Validating Spectrophotometric Methods: A Complete Guide for Pharmaceutical Analysis

This article provides a comprehensive guide to the validation of spectrophotometric methods for researchers, scientists, and drug development professionals.

Validating Spectrophotometric Methods: A Complete Guide for Pharmaceutical Analysis

Abstract

This article provides a comprehensive guide to the validation of spectrophotometric methods for researchers, scientists, and drug development professionals. It explores the foundational principles and regulatory requirements from ICH, FDA, and USP, details the practical application of key validation parameters for UV-Vis techniques, and offers strategies for troubleshooting common issues and optimizing method performance. The content also covers the lifecycle management of validated methods, including transfer and revalidation, and discusses the role of spectrophotometry as a reliable, cost-effective alternative to chromatographic techniques in the quality control of pharmaceuticals and clinical research.

Core Principles and Regulatory Frameworks for Spectrophotometric Validation

In the highly regulated landscape of pharmaceutical development and manufacturing, method validation serves as a critical bridge between scientific innovation and patient safety. It provides the documented evidence that an analytical procedure is suitable for its intended purpose, ensuring that the data generated about a drug's quality, safety, and efficacy are reliable and reproducible. Regulatory authorities worldwide mandate method validation as a fundamental requirement under Good Manufacturing Practice (GMP) and Good Clinical Practice (GCP) frameworks to ensure that products consistently meet predetermined quality attributes throughout their lifecycle [1] [2]. For spectrophotometric techniques, which are widely employed for both drug substance analysis and clinical trial sample testing, rigorous validation demonstrates that these methods can accurately measure analyte concentration, detect impurities, and provide trustworthy data for critical decisions.

The current regulatory environment emphasizes a lifecycle approach to method validation, with recent updates to ICH guidelines Q2(R2) and Q14 refining requirements to accommodate technological advances and emerging analytical challenges [2]. These guidelines, integrated into GMP regulations such as 21 CFR Parts 210 and 211, establish method validation as non-negotiable for drug approval and commercial manufacturing [3]. Similarly, under GCP principles, validated analytical methods are essential for generating reliable data in clinical trials, where they may be used to analyze biological samples for therapeutic drug monitoring or to assess drug stability under various conditions [4] [5]. This application note examines the regulatory basis for method validation requirements and provides detailed protocols for validating spectrophotometric methods within the context of modern pharmaceutical quality systems.

Regulatory Foundations: GMP/GCP Requirements for Method Validation

Current Good Manufacturing Practice (cGMP) Provisions

The United States Food and Drug Administration (FDA) mandates through Current Good Manufacturing Practice (cGMP) regulations that all test methods used in pharmaceutical manufacturing must be verified for suitability under actual conditions of use. According to 21 CFR Part 211, which governs finished pharmaceuticals, laboratory controls must include "the calibration of instruments, apparatus, gauges, and recording devices at suitable intervals in accordance with an established written program containing specific directions, schedules, limits for accuracy and precision, and provisions for remedial action" [3]. The European Medicines Agency (EMA) similarly requires validated test methods as specified in EudraLex Volume 4, which references ICH guidelines for validation content [2].

These requirements are not merely administrative but are fundamentally designed to build quality into pharmaceutical products. As stated in cGMP principles, "quality, safety, and efficacy are built into the product" through validated processes and methods, recognizing that "the quality of the product cannot be adequately assured by in-process and finished-product inspection" alone [1]. Method validation thus represents a proactive approach to quality assurance, demonstrating that analytical procedures can consistently detect deviations from critical quality attributes before they impact product safety or performance.

Good Clinical Practice (GCP) and Data Integrity Requirements

Under Good Clinical Practice (GCP) frameworks, particularly the updated ICH E6(R3) guideline effective July 2025, method validation supports the generation of reliable clinical trial results through appropriate data management systems and processes [5] [6]. The guideline emphasizes that "computerized systems used to create, modify, maintain, archive, retrieve, or transmit source data should assure data quality and reliability," which inherently requires validated analytical methods when these systems are used for generating clinical trial data [6] [7].

For spectrophotometric methods used in clinical trial settings, compliance with electronic records requirements under 21 CFR Part 11 may also be necessary when automated spectrophotometers generate electronic data [8]. This regulation requires that systems be "validated to ensure accuracy, reliability, consistent intended performance, and the ability to discern invalid or altered records" [8], creating an additional layer of validation requirements beyond the analytical procedure itself. The integration of method validation within broader data integrity frameworks ensures that results from clinical samples are trustworthy and traceable throughout the data lifecycle.

ICH Guideline Updates: Q2(R2) and Q14

The recent adoption of ICH Q2(R2) "Validation of Analytical Procedures" and ICH Q14 "Analytical Procedure Development" represents the most significant evolution in method validation requirements in nearly two decades [2]. These complementary guidelines provide an updated framework for demonstrating method suitability, with Q2(R2) extending the scope of validation to include:

- Intermediates and in-process controls within the overall product control strategy

- Phase-appropriate validation recognizing different requirements during drug development lifecycle

- Multivariate and bio(techno)logical test methods beyond traditional chromatographic procedures

- Product-related range concept emphasizing validation across the entire reportable range [2]

These updates address previous shortcomings in linearity assessment and technology applicability, providing a more robust foundation for validating modern analytical techniques, including advanced spectrophotometric methods.

Method Validation Parameters for Spectrophotometric Techniques

Core Validation Characteristics

For spectrophotometric methods, validation must demonstrate adequacy of specific performance characteristics that collectively establish method suitability. The following table summarizes these key parameters, their definitions, and acceptance criteria for a hypothetical spectrophotometric assay:

Table 1: Key Validation Parameters for Spectrophotometric Methods

| Parameter | Definition | Typical Acceptance Criteria | Experimental Approach |

|---|---|---|---|

| Accuracy | Closeness between measured value and true value | Recovery: 98-102% for API; 95-105% for formulations | Spiked recovery experiments using placebo or synthetic mixtures |

| Precision | Repeatability under normal operating conditions | RSD ≤ 2% for assay of drug substance | Multiple measurements of homogeneous sample (n≥6) |

| Linearity | Ability to obtain results proportional to analyte concentration | Correlation coefficient (r) ≥ 0.998 | Minimum 5 concentrations across specified range (e.g., 50-150% of target) |

| Range | Interval between upper and lower concentration with suitable precision, accuracy, and linearity | Dependent on application; typically 80-120% of test concentration | Established from linearity and precision data |

| Specificity | Ability to measure analyte accurately in presence of potential interferents | No interference from placebo, degradation products, or matrix components | Compare samples with and without potential interferents |

| Detection Limit (LOD) | Lowest detectable amount of analyte | Signal-to-noise ratio ≥ 3:1 or calculated from standard deviation of blank | Successive dilution until signal disappears in noise |

| Quantitation Limit (LOQ) | Lowest quantifiable amount with acceptable precision and accuracy | Signal-to-noise ratio ≥ 10:1; RSD ≤ 5% at LOQ | Analysis of low concentration samples with precision evaluation |

| Robustness | Capacity to remain unaffected by small, deliberate variations in method parameters | System suitability criteria still met despite variations | Deliberate changes to wavelength, pH, extraction time, etc. |

These parameters align with ICH Q2(R2) recommendations and should be demonstrated experimentally for each spectrophotometric method based on its specific application within the pharmaceutical quality system [2]. The validation scope may vary between drug substances, finished products, and clinical trial samples, but the fundamental principles remain consistent across these applications.

Application to Spectrophotometric Methods

Spectrophotometric methods present unique validation considerations due to their reliance on light absorption properties and potential matrix effects. Specificity must be carefully established, particularly for formulations with multiple components or complex biological matrices in clinical trial samples [4]. The use of reagents such as complexing agents, oxidizing/reducing agents, pH indicators, or diazotization reagents introduces additional validation requirements to demonstrate reaction completeness and stability of the resulting chromophores [4].

For example, when employing complexing agents like ferric chloride for phenolic drugs or potassium permanganate as an oxidizing agent, validation must confirm that the complex formation is complete, reproducible, and stable throughout the analysis period [4]. Similarly, methods using pH indicators must demonstrate that color development is consistent across the specified pH range and unaffected by minor variations in buffer composition [4]. These method-specific considerations highlight the importance of tailoring validation protocols to the particular spectrophotometric technique and its intended application.

Experimental Protocols: Validation of Spectrophotometric Methods

Protocol for Method Validation: UV-Vis Spectrophotometric Assay

This protocol provides a standardized approach for validating spectrophotometric methods used in pharmaceutical analysis, adaptable to various drug substances and formulations.

Scope and Applications

This procedure applies to the validation of UV-Vis spectrophotometric methods for quantification of active pharmaceutical ingredients (APIs) in bulk drug substances, finished pharmaceutical products, and stability test samples. The protocol covers validation parameters per ICH Q2(R2) requirements [2].

Equipment and Reagents

- Double-beam UV-Vis spectrophotometer with validated performance (installation qualification/operational qualification/performance qualification documented)

- Matched quartz cells (1 cm pathlength)

- Analytical balance (calibrated)

- Reference standard of analyte (certified purity)

- Pharmaceutical grade reagents and solvents

- Appropriate volumetric glassware

Procedure for Linearity and Range

- Prepare stock solution of reference standard at known concentration (e.g., 1000 μg/mL)

- Dilute stock solution to prepare at least five standard solutions covering the range of 50-150% of target test concentration

- Scan each solution to determine wavelength of maximum absorption (λmax)

- Measure absorbance of each standard solution at λmax against blank solvent

- Plot absorbance versus concentration and calculate regression statistics

- Determine range from linearity data where correlation coefficient ≥ 0.998 and residuals show no systematic pattern

Procedure for Accuracy (Recovery Studies)

- Prepare placebo mixture (excluding API) representing formulation composition

- Spike placebo with known quantities of API at three levels (80%, 100%, 120% of target)

- Prepare each level in triplicate and analyze using the proposed method

- Calculate recovery percentage for each spike level: (Measured Concentration/Theoretical Concentration) × 100

- Mean recovery should be 98-102% with RSD ≤ 2% for method acceptance

Procedure for Precision

- Prepare six independent sample preparations from a homogeneous bulk sample

- Analyze all six preparations following the complete analytical procedure

- Calculate mean, standard deviation, and relative standard deviation (RSD) of results

- For intermediate precision, repeat the study on a different day with different analyst and equipment

- RSD should be ≤ 2% for both repeatability and intermediate precision

Procedure for Specificity

- Prepare solutions of placebo, API, stressed API (forced degradation), and finished product

- Record absorbance spectra of each solution from 200-400 nm

- Compare spectra to demonstrate no interference from placebo or degradation products at analyte λmax

- For methods using derivatization, demonstrate complete reaction and specificity of chromophore

Enhanced Spectrophotometric Techniques: Derivative and Reagent-Based Methods

For spectrophotometric methods requiring reagent-based detection, additional validation elements are necessary. The following workflow illustrates the development and validation process for these enhanced techniques:

Reagent Compatibility and Stability Studies

- Complexing Agent Evaluation: Test multiple complexing agents (ferric chloride, ninhydrin, etc.) for sensitivity and selectivity with target analyte [4]

- Reaction Time Course: Monitor chromophore development over time to establish optimal measurement window and stability duration

- pH Optimization: Evaluate method performance across pH range to establish optimal and tolerable ranges

- Temperature Dependence: Assess impact of temperature variations on reaction rate and completeness

Specificity Enhancement Protocols

- Placebo Interference Testing: Analyze complete placebo mixture with and without spiked analyte to detect potential interactions

- Forced Degradation Studies: Stress drug substance and product under acid/base, oxidative, thermal, and photolytic conditions, then analyze to demonstrate method stability-indicating capability

- Matrix Effect Evaluation: For biological samples, compare calibration in solvent versus biological matrix to quantify matrix effects

The Scientist's Toolkit: Essential Reagents and Materials

Successful development and validation of spectrophotometric methods requires carefully selected reagents and materials. The following table details key research reagent solutions used in spectrophotometric analysis of pharmaceuticals:

Table 2: Essential Reagents for Spectrophotometric Method Development

| Reagent Category | Specific Examples | Primary Function | Application Notes |

|---|---|---|---|

| Complexing Agents | Ferric chloride, Ninhydrin, Potassium permanganate | Form colored complexes with target analytes to enhance detection | Enables analysis of compounds lacking inherent chromophores; requires optimization of stoichiometry and pH [4] |

| Oxidizing/Reducing Agents | Ceric ammonium sulfate, Sodium thiosulfate | Modify oxidation state to create detectable chromophores | Essential for drugs lacking chromophores; useful for stability testing of oxidation-prone drugs [4] |

| pH Indicators | Bromocresol green, Phenolphthalein | Create color changes dependent on solution pH | Critical for acid-base titrations and ensuring proper pH for complex formation [4] |

| Diazotization Reagents | Sodium nitrite with HCl, N-(1-naphthyl)ethylenediamine | Form azo dyes with primary aromatic amines for sensitive detection | Highly sensitive for drugs containing primary aromatic amines; requires careful control of reaction conditions [4] |

| Solvents | Methanol, Ethanol, Water, Buffer solutions | Dissolve analytes and maintain optimal chemical environment | Must be spectrophotometric grade to minimize background absorption; affects λmax and sensitivity |

| Fluorene-2,3-dione | Fluorene-2,3-dione, CAS:6957-72-8, MF:C13H8O2, MW:196.20 g/mol | Chemical Reagent | Bench Chemicals |

| 3,3'-Bipyridine, 1-oxide | 3,3'-Bipyridine, 1-oxide, CAS:33349-46-1, MF:C10H8N2O, MW:172.18 g/mol | Chemical Reagent | Bench Chemicals |

Implementation in Regulated Environments

Documentation and Compliance Requirements

Implementation of validated spectrophotometric methods in GMP/GCP environments requires comprehensive documentation, including:

- Validation Protocol: Pre-approved document defining scope, parameters, acceptance criteria, and experimental design

- Validation Report: Complete summary of experimental data, statistical analysis, and conclusion regarding method suitability

- Standard Operating Procedure (SOP): Detailed instructions for method execution, including system suitability criteria

- Electronic Data Integrity: For computerized spectrophotometers, compliance with 21 CFR Part 11 requirements for audit trails, electronic signatures, and data security [8]

Lifecycle Management and Ongoing Verification

Method validation is not a one-time event but requires ongoing monitoring throughout the method lifecycle. ICH Q2(R2) and Q14 encourage continued method verification through:

- System Suitability Tests: Routine checks to ensure method performance during actual use

- Change Control: Formal assessment of any modifications to method parameters

- Periodic Revalidation: Scheduled reevaluation based on risk assessment and historical performance data

- Method Transfer Protocol: Documented procedures for transferring validated methods between laboratories or sites [2]

Method validation stands as a cornerstone of pharmaceutical quality systems because it provides the scientific evidence that analytical methods consistently produce reliable results capable of supporting quality decisions. For spectrophotometric techniques, which offer simplicity, cost-effectiveness, and broad applicability across drug development and clinical testing, rigorous validation transforms basic analytical procedures into trustworthy tools for protecting patient safety and product quality. The evolving regulatory landscape, particularly with the implementation of ICH Q2(R2), Q14, and ICH E6(R3), continues to reinforce method validation's essential role in demonstrating both scientific validity and regulatory compliance. By implementing the protocols and principles outlined in this application note, researchers and pharmaceutical scientists can ensure their spectrophotometric methods generate data worthy of trust in high-stakes decisions about drug quality, safety, and efficacy.

For researchers and scientists employing spectrophotometric techniques, navigating the regulatory environment is fundamental to ensuring the quality, safety, and efficacy of pharmaceutical products. The core guidelines governing this space—ICH Q2, USP General Chapter <1225>, and FDA guidance documents—have recently undergone significant harmonization and modernization [9] [10]. A profound understanding of these guidelines is not merely a regulatory obligation but a critical component of robust scientific practice. This document frames the key principles and updated requirements within the context of the analytical procedure life cycle, providing detailed application notes and protocols tailored to spectrophotometric methods used in drug development.

The landscape is shifting from a traditional, parameter-centric checklists towards a more holistic, risk-based approach focused on the "fitness for purpose" of an analytical procedure [9]. A pivotal change is the full adoption of ICH Q2(R2), which was implemented in key regions like Canada in October 2025 [11]. Concurrently, the United States Pharmacopeia (USP) has proposed a comprehensive revision of its general chapter <1225>, retitling it "Validation of Analytical Procedures" to better reflect its application for both compendial and non-compendial methods and to create stronger connectivity with the life cycle approach described in USP <1220> [9]. The U.S. Food and Drug Administration (FDA) has also updated its long-standing guidance on analytical method validation to align with ICH Q2(R2), streamlining traditional requirements and providing flexibility for modern techniques [10]. For scientists, this convergence underscores the importance of demonstrating that a method is reliable and suitable for its intended use throughout its entire life cycle.

The following table summarizes the core principles and recent evolution of the key regulatory guidelines.

Table 1: Key Regulatory Guidelines for Analytical Method Validation

| Guideline | Core Focus & Recent Updates | Primary Scope | Key Concepts for Spectrophotometry |

|---|---|---|---|

| ICH Q2(R2) [11] [10] [12] | Harmonized criteria for validation; Revision 2 (2025) incorporates validation for non-linear and multivariate methods (e.g., spectral data). | Analytical procedures for drug substance/product release and stability testing. | Defines fundamental validation parameters. Now explicitly accommodates complex spectral analysis beyond simple linearity. |

| USP <1225> [9] [13] | Validation of compendial & non-compendial procedures; Under revision (as of Nov 2025) to align with ICH Q2(R2) and USP <1220> life cycle. | Pharmaceutical quality control testing per USP-NF standards. | Emphasizes "Fitness for Purpose" and controlling uncertainty of the "Reportable Result". |

| FDA Guidance [10] | Reflects ICH Q2(R2); Focuses on critical validation parameters to show method reliability for routine use. | Regulatory submissions to the FDA for drug approval. | Streamlines parameters, refocusing on Specificity/Selectivity, Range, and Accuracy/Precision. |

A critical development is the enhanced connection between these documents. The proposed revision of USP <1225> is intentionally designed to align with ICH Q2(R2) and integrate into the analytical procedure life cycle, creating a more unified global framework [9]. Furthermore, the FDA's updated guidance now explicitly allows for the validation of multivariate analytical procedures, which is directly relevant to advanced spectrophotometric applications like the creation of spectral libraries for identity testing [10].

Core Validation Parameters and Application to Spectrophotometry

The following table details the fundamental validation characteristics as defined by the guidelines, with specific application notes for spectrophotometric techniques such as UV-Vis and IR spectroscopy.

Table 2: Validation Parameters and Spectrophotometric Application

| Parameter | Traditional Definition (ICH Q2(R1)) | Application in Spectrophotometric Analysis | Enhanced Considerations (ICH Q2(R2)/USP) |

|---|---|---|---|

| Specificity/Selectivity [10] | Ability to assess analyte unequivocally in the presence of components. | For assay: Compare sample spectrum with blank & placebo. For impurities: Resolve & measure analyte peaks from interfering species. | Demonstrated via stressed/aged samples. Lack of specificity may be compensated by orthogonal procedures. |

| Accuracy [10] [14] | Closeness of test results to the true value. | Spike & recover known analyte concentrations into placebo/blank. Analyze in triplicate at 3 levels (e.g., 80%, 100%, 120%). | For multivariate models, accuracy is evaluated via metrics like Root Mean Square Error of Prediction (RMSEP). |

| Precision [9] [10] | Degree of scatter among a series of measurements. | Repeatability: 6 injections of 100% test concentration. Intermediate Precision: Different days/analysts/instruments. | Precision studies are now linked to controlling uncertainty of the Reportable Result, not just predefined injections. |

| Linearity & Range [10] | Proportionality of response to analyte concentration & the interval between upper/lower levels. | Prepare & analyze standard solutions across a range (e.g., 50-150% of target). Plot response vs. concentration, determine R², slope, y-intercept. | Now includes non-linear responses (e.g., S-shaped curves). Range must cover specification limits (see Table 3). |

| LOD/LOQ [14] | Lowest concentration that can be detected/quantified. | Signal-to-Noise (S/N) ratio (e.g., LOD: S/N=3, LOQ: S/N=10) or based on standard deviation of response & slope. | Primarily required for impurity tests. The quantitation limit should be established if measuring analyte near the lower range limit. |

Defining the Reportable Range

The updated guidelines provide clearer definitions for the reportable range of an analytical procedure, which must encompass the specification limits. The following table outlines these ranges for common test types.

Table 3: Newly Defined Analytical Test Method Ranges per FDA/ICH Guidance [10]

| Use of Analytical Procedure | Low End of Reportable Range | High End of Reportable Range |

|---|---|---|

| Assay of a Product | 80% of declared content or 80% of lower specification | 120% of declared content or 120% of upper specification |

| Content Uniformity | 70% of declared content | 130% of declared content |

| Impurity (Quantitative) | Reporting threshold | 120% of the specification acceptance criterion |

| Dissolution (Immediate Release) | Q-45% of the lowest strength or Quantitation Limit (QL) | 130% of declared content of the highest strength |

Experimental Protocols for Spectrophotometric Method Validation

Protocol 1: Validation of a UV-Vis Spectrophotometric Assay for Drug Substance

This protocol provides a detailed methodology for validating a simple assay method for an active pharmaceutical ingredient (API) using a UV-Vis spectrophotometer, aligning with Category I tests per USP <1225> [13].

1. Scope and Purpose To validate a UV-Vis spectrophotometric method for the quantitative determination of [API Name] in bulk drug substance. The method is intended to be accurate, precise, specific, and linear over the range of 50-150% of the target concentration of 100 µg/mL.

2. Experimental Workflow

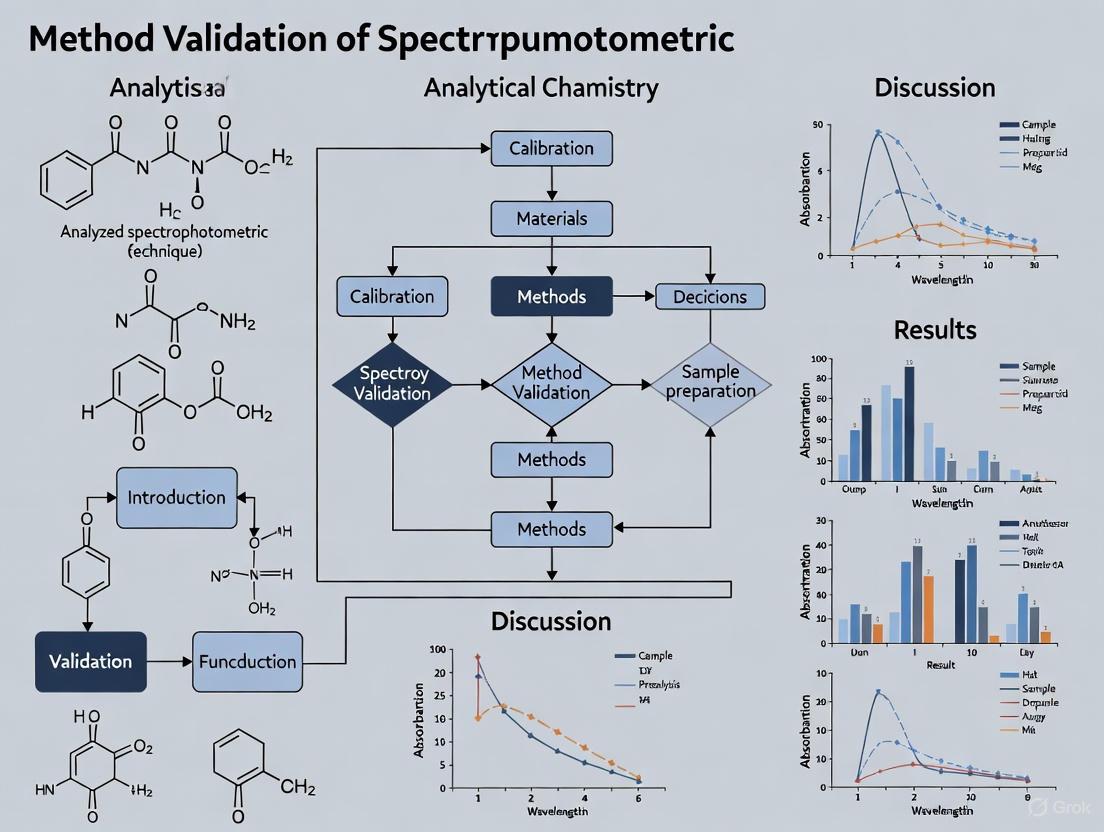

The following diagram illustrates the logical workflow for the method validation and transfer process.

3. Materials and Reagents

- API: [Chemical Name, Batch Number, Purity]

- Solvent: [e.g., 0.1N Hydrochloric Acid, HPLC Grade Water]

- Placebo/Excipients: [List all excipients expected in the final formulation]

- Equipment: UV-Vis Spectrophotometer (e.g., Agilent Cary 60), analytical balance, volumetric flasks, pipettes.

4. Procedure

- Standard Solution Preparation: Accurately weigh and dissolve reference standard API in solvent to obtain a stock solution of 1000 µg/mL. Dilute serially to prepare standard solutions at 50, 80, 100, 120, and 150 µg/mL.

- Sample Solution Preparation: Accurately weigh ~10 mg of test API into a 100 mL volumetric flask, dissolve, and dilute to volume with solvent to obtain a nominal concentration of 100 µg/mL.

- Specificity: Scan and record the spectra (e.g., 200-400 nm) of the solvent (blank), a placebo solution (if available), and the standard solution. Ensure no interference at the analytical wavelength (λ_max).

- Linearity and Range: Measure the absorbance of each standard solution (50-150 µg/mL) in triplicate. Plot mean absorbance vs. concentration and perform linear regression analysis.

- Accuracy (Recovery): Prepare placebo solutions (if applicable) spiked with API at 80%, 100%, and 120% of the target concentration (n=3 per level). Analyze and calculate the percentage recovery.

- Precision:

- Repeatability: Analyze six independent sample preparations at 100% concentration.

- Intermediate Precision: Perform the repeatability study on a different day or with a different instrument (if available).

5. Acceptance Criteria

- Linearity: Correlation coefficient (R²) ≥ 0.998.

- Accuracy: Mean recovery between 98.0-102.0% for each level.

- Precision: Relative Standard Deviation (RSD) for repeatability and intermediate precision ≤ 2.0%.

Protocol 2: Validation of an FTIR Spectroscopic Method for Identity Testing

This protocol outlines the validation of a qualitative identity test using Fourier-Transform Infrared (FTIR) spectroscopy, corresponding to a Category IV test [10] [13].

1. Scope and Purpose To validate an FTIR method for the identification of [API Name] by comparing the spectrum of a test sample to that of a reference standard.

2. Procedure

- Reference Standard Spectrum: Prepare a potassium bromide (KBr) pellet of the reference standard or use an ATR accessory. Collect the FTIR spectrum in the range of 4000-400 cmâ»Â¹ with a defined resolution (e.g., 4 cmâ»Â¹).

- Test Sample Spectrum: Prepare the test sample identically and collect its spectrum.

- Specificity: The test is considered specific if the spectrum of the test sample is identical in all critical absorption bands to that of the reference standard. To demonstrate discriminatory ability, also collect spectra of chemically similar compounds or common excipients to show they can be distinguished.

3. Enhanced Protocol for Multivariate/Model-Based Methods (per ICH Q2(R2)) For spectral library models, the validation approach expands [10]:

- Calibration Model: Use a well-characterized reference standard to establish a calibration spectrum library.

- Model Validation: Analyze test samples of the reference material, a representative sample, and one or more materials that differ from the analyte. This demonstrates the discriminative ability of the library model.

- Accuracy (for Qualitative Methods): Characterized using metrics like the misclassification rate or positive prediction rate.

The Scientist's Toolkit: Essential Research Reagent Solutions

The following table lists key materials and reagents critical for successfully executing the validation protocols for spectrophotometric methods.

Table 4: Essential Research Reagent Solutions for Spectrophotometric Validation

| Item | Function & Importance in Validation | Key Compliance Notes |

|---|---|---|

| USP/EP Reference Standards [15] | Highly purified and characterized substances used as benchmarks for identity, assay, and impurity testing. Essential for establishing method Accuracy and Specificity. | Must be obtained from authorized pharmacopeial organizations (USP, EDQM) to ensure reliability and traceability. |

| High-Purity Solvents | Used for sample and standard preparation. Impurities can cause spectral interference, affecting Specificity, baseline noise, and LOD/LOQ. | Use HPLC or spectroscopic grade. Verify absence of interfering UV/IR absorption. |

| Placebo/Excipient Mixtures | A blend of all inactive components of the formulation. Critical for demonstrating Specificity by proving no interference from excipients and for Accuracy via recovery studies. | Should be representative of the final drug product composition. |

| System Suitability Test (SST) Solutions [15] | A solution or mixture used to verify that the analytical system (spectrophotometer) is performing adequately at the time of the test. | Parameters (e.g., signal-to-noise, absorbance limits) should be established during method development and checked before validation runs. |

| BacosideA | BacosideA, MF:C41H68O13, MW:769.0 g/mol | Chemical Reagent |

| Boc-D-Alg(Z)2-OH | Boc-D-Alg(Z)2-OH|1932279-96-3|RUO | Boc-D-Alg(Z)2-OH CAS 1932279-96-3. A protected amino acid derivative for peptide synthesis research. For Research Use Only. Not for human or veterinary use. |

The Analytical Procedure Life Cycle: From Validation to Ongoing Verification

A modern understanding of method validation places it within a broader life cycle, as illustrated below. This holistic view ensures method performance is maintained over time.

The process begins with Analytical Procedure Development, which defines the Analytical Target Profile (ATP)—a summary of the required performance characteristics for the procedure [16]. This is followed by the formal Procedure Validation described in this document. Once validated, the method enters Routine Use, supported by a control strategy that includes System Suitability Tests (SSTs). Crucially, the life cycle now emphasizes Ongoing Procedure Performance Verification (as described in the new USP <1221>), which involves continuous monitoring to ensure the method remains in a state of control [9] [16]. All stages are supported by Knowledge Management, which involves documenting all data and decisions, forming the basis for sound science and regulatory confidence [9].

For drug development professionals, a deep and practical understanding of ICH Q2(R2), USP <1225>, and FDA expectations is non-negotiable. The guidelines are now more aligned than ever, promoting a science-based, life cycle approach to analytical procedures. The successful implementation of these principles for spectrophotometric techniques requires careful planning, execution, and documentation, as outlined in the provided application notes and protocols. By focusing on fitness for purpose, controlling the uncertainty of the reportable result, and committing to ongoing performance verification, researchers can ensure their analytical methods are not only compliant but also robust and reliable, thereby safeguarding public health and accelerating the delivery of new therapies.

In the realm of analytical chemistry, particularly for spectrophotometric techniques, the reliability of any developed method is paramount. Method validation provides documented evidence that a process consistently produces results meeting predetermined specifications and quality attributes. It is a critical component in research, pharmaceutical development, and quality control laboratories, ensuring data is accurate, precise, and reproducible. This document delineates the essential validation parameters—Specificity, Linearity, Accuracy, Precision, LOD, LOQ, and Robustness—framed within the context of spectrophotometric analysis. Adherence to these validated parameters, as guided by international standards like ICH Q2(R1), guarantees that spectrophotometric methods are fit for their intended purpose, from routine drug quantification to complex research applications [17] [18].

Core Validation Parameters: Definitions and Experimental Protocols

Specificity

Definition: Specificity is the ability of an analytical method to assess unequivocally the analyte in the presence of components that may be expected to be present, such as impurities, degradation products, and matrix components.

Experimental Protocol for Spectrophotometric Determination: A primary study to demonstrate specificity involves comparing the absorbance spectrum of the analyte in its pure form against samples containing the analyte spiked with potential interferents, such as excipients or known degradation products.

- Preparation of Solutions:

- Standard Solution: Prepare a solution of the pure analyte at a known concentration in a suitable solvent.

- Sample Solution with Interferents: Prepare a solution mimicking the final formulation, including all excipients or potential interferents, but without the analyte.

- Spiked Sample Solution: Prepare a solution containing the analyte at the same concentration as the standard, along with all the added interferents.

- Analysis: Scan and record the UV-Vis spectra (e.g., from 200-400 nm) of all three solutions using the same solvent as a blank.

- Evaluation: The method is considered specific if the spectrum of the standard analyte is identical in shape and λmax to the spectrum of the spiked sample solution. Furthermore, the solution containing only interferents should show no significant absorbance at the λmax used for quantification of the analyte. For instance, a study on liposomal hydroquinone confirmed specificity by demonstrating that the other liposomal components did not absorb at the analysis wavelength of 293 nm after appropriate dilution [17].

Linearity and Range

Definition: Linearity is the ability of a method to elicit test results that are directly proportional to the concentration of the analyte within a given range. The range is the interval between the upper and lower concentrations for which demonstrated linearity, accuracy, and precision are achieved.

Experimental Protocol for Calibration Curve Generation:

- Preparation of Stock Solution: Accurately weigh and dissolve the analyte to prepare a primary stock solution of high concentration.

- Preparation of Standard Solutions: Dilute the stock solution serially to prepare at least five to six standard solutions spanning the expected range. For example, a method for atorvastatin used concentrations of 20, 40, 60, 80, 100, and 120 µg/mL [18].

- Measurement: Measure the absorbance of each standard solution at the predetermined λmax.

- Data Analysis: Plot the absorbance (y-axis) against the corresponding concentration (x-axis). Perform linear regression analysis to obtain the slope, y-intercept, and correlation coefficient (R²). A high degree of linearity is typically indicated by an R² value ≥ 0.999, as demonstrated in both the atorvastatin (R² = 0.9996) and hydroquinone (R² = 0.9998) studies [17] [18].

Table 1: Exemplary Linearity Data from UV-Spectrophotometric Method Validation

| Analyte | Linear Range (µg/mL) | Regression Equation | Correlation Coefficient (R²) |

|---|---|---|---|

| Atorvastatin [18] | 20 - 120 | y = 0.01x + 0.0048 | 0.9996 |

| Hydroquinone [17] | 1 - 50 | Not Specified | 0.9998 |

| Urea (PDAB Method) [19] | Up to 100 | Not Specified | 0.9999 |

Accuracy

Definition: Accuracy expresses the closeness of agreement between the measured value and a value accepted as a true or reference value. It is often reported as percent recovery.

Experimental Protocol via Recovery Study:

- Sample Preparation: Prepare a known quantity of the analyte (e.g., from a synthetic mixture or formulated product). Alternatively, spike a placebo sample with the analyte at three different concentration levels covering the range (e.g., 80%, 100%, and 120% of the target concentration).

- Analysis: Analyze each of these samples using the validated spectrophotometric method.

- Calculation: For each level, calculate the percentage recovery using the formula:

- % Recovery = (Measured Concentration / Theoretical Concentration) × 100 The mean recovery across all levels should ideally be between 98-102%. The hydroquinone study reported recoveries of 102 ± 0.8, 99 ± 0.2, and 98 ± 0.4 for the 80%, 100%, and 120% levels, respectively [17].

Precision

Definition: Precision describes the closeness of agreement between a series of measurements obtained from multiple sampling of the same homogeneous sample under prescribed conditions. It is further subdivided into repeatability (intra-day precision) and intermediate precision (inter-day precision, often involving a different analyst or instrument on a different day).

Experimental Protocol:

- Repeatability (Intra-day): Prepare six independent samples of the analyte at 100% of the test concentration. Analyze all six samples on the same day, using the same instrument and analyst. Calculate the mean, standard deviation (SD), and relative standard deviation (%RSD).

- Intermediate Precision (Inter-day): Repeat the repeatability study on a different day (or with a different analyst). Calculate the SD and %RSD for this new set of results.

- Evaluation: The method is considered precise if the %RSD for both intra-day and inter-day studies is typically not more than 2%. The atorvastatin method validation reported excellent %RSD values of 0.2598 (intra-day) and 0.2987 (inter-day) [18].

Table 2: Summary of Precision and Accuracy Parameters from Validation Studies

| Analyte | Precision (%RSD) | Accuracy (% Recovery) | ||

|---|---|---|---|---|

| Intra-day | Inter-day | Mean | %RSD | |

| Atorvastatin [18] | 0.2598 | 0.2987 | 99.65 | 0.043 |

| Hydroquinone [17] | < 2% | Not Specified | 98 - 102 | < 0.8 |

| Urea (PDAB Method) [19] | Not Specified | < 5% (Inter-lab) | 90 - 110 | Not Specified |

Limit of Detection (LOD) and Limit of Quantification (LOQ)

Definition:

- LOD: The lowest concentration of an analyte that can be detected, but not necessarily quantified, under the stated experimental conditions.

- LOQ: The lowest concentration of an analyte that can be quantified with acceptable accuracy and precision.

Experimental Protocol Based on Calibration Curve: This is a common and straightforward method for determining LOD and LOQ.

- Generate a Calibration Curve: As described in the linearity section.

- Calculation: The standard deviation (Ï) of the response (y-intercept) can be estimated from the standard error of the regression. The slope (S) is taken from the calibration curve.

Robustness

Definition: Robustness is a measure of a method's capacity to remain unaffected by small, deliberate variations in method parameters (e.g., pH, wavelength, temperature), indicating its reliability during normal usage.

Experimental Protocol: A robustness study can be planned using an experimental design (e.g., a full or fractional factorial design) to efficiently test multiple factors.

- Identify Critical Parameters: Select factors that might influence the results, such as:

- Wavelength variation (± 1 nm from λmax)

- pH of the buffer (± 0.1 units)

- Temperature (± 2°C)

- Reaction time (± 5%)

- Different sources or batches of solvent

- Experimental Execution: Analyze a standard sample (e.g., at 100% concentration) under the nominal conditions and then under each varied condition.

- Evaluation: Monitor the impact on the results, such as changes in absorbance, measured concentration, or %RSD. A robust method will show minimal variation. The PDAB method for urea was noted for its robustness, exhibiting "minimal sensitivity to changes in critical factors" [19]. General best practices also recommend ensuring sample homogeneity and using compatible, non-absorbing buffers to enhance robustness [20].

Visual Workflows and Relationships

Diagram 1: Method Validation Parameter Relationships

Diagram 2: Experimental Workflow for Accuracy & Precision

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Reagents and Materials for Spectrophotometric Method Validation

| Reagent/Material | Function / Application | Key Considerations |

|---|---|---|

| High-Purity Analytical Standards [18] | Used to prepare stock and standard solutions for calibration curves and recovery studies. | Purity must be certified and known; essential for accurate linearity and accuracy determinations. |

| p-Dimethylaminobenzaldehyde (PDAB) [19] | Derivatizing reagent for quantifying urea; reacts to form a colored complex. | Solution stability is critical; optimized acidic conditions (Hâ‚‚SOâ‚„) are required for reproducibility. |

| UV-Transparent Quartz Cuvettes [20] [21] | Hold the sample solution in the spectrophotometer's light path. | Required for UV range measurements (e.g., nucleic acids, protein A280); must be clean and scratch-free. |

| Appropriate Solvent (e.g., Methanol, Ethanol) [17] [18] | Dissolves the analyte and is used for sample and blank preparation. | Must be transparent at the wavelength of analysis; should not contain absorbing impurities. |

| Buffers for pH Control [20] [19] | Maintains the pH of the solution, critical for reaction-based assays and robustness. | The buffer should not absorb at the wavelength of interest. Compatibility with the analyte is key. |

| 2-Ethyl-5-fluoropyridine | 2-Ethyl-5-fluoropyridine | |

| N-Me-Orn(Boc)-OMe.HCl | N-Me-Orn(Boc)-OMe.HCl, MF:C12H25ClN2O4, MW:296.79 g/mol | Chemical Reagent |

The rigorous validation of a spectrophotometric method is an indispensable process that underpins the generation of reliable and defensible analytical data. By systematically defining and evaluating the parameters of specificity, linearity, accuracy, precision, LOD, LOQ, and robustness, researchers and pharmaceutical scientists can ensure their methods are capable of producing results that are precise, accurate, and sensitive. The experimental protocols and acceptance criteria outlined herein, supported by contemporary research, provide a framework for establishing methods that are not only scientifically sound but also robust enough for transfer to quality control environments, thereby ensuring consistent product quality and bolstering the integrity of scientific research.

The reliability of analytical data in pharmaceutical development and quality control is paramount. Spectrophotometric methods, prized for their simplicity, specificity, and cost-effectiveness, are foundational techniques for the quantitative determination of active pharmaceutical ingredients (APIs) and other analytes [22] [23]. The journey of an analytical method from its initial conception to its steadfast application in a quality control laboratory follows a structured lifecycle. This lifecycle ensures the method is fit for its intended purpose, providing accurate, precise, and reproducible results throughout its use. This application note details the stages of this lifecycle—development, validation, and routine use—within the context of spectrophotometric techniques, providing structured protocols and data to guide researchers and drug development professionals.

The Analytical Method Lifecycle

The lifecycle of an analytical method is an iterative process designed to ensure continued fitness for purpose. It begins with strategic development, is confirmed through rigorous validation, and is maintained via controlled routine application and monitoring. The workflow below illustrates this interconnected process.

Stage 1: Method Development

Method development is the foundational stage where the analytical procedure is designed and optimized. The goal is to establish a protocol that is specific, robust, and suitable for the intended analyte.

Experimental Protocol: Initial Method Scouting

Objective: To select a suitable solvent and determine the wavelength of maximum absorption (λmax) for the analyte.

Materials:

- Analytic reference standard (e.g., Terbinafine HCl [22] or Levofloxacin [24])

- High-purity solvents (Water, Methanol, Acetonitrile, Ethanol) [25] [22] [24]

- Volumetric flasks (10 mL, 100 mL)

- Micropipettes

- UV-Visible Spectrophotometer (e.g., DeNovix DS-Series [20])

Procedure:

- Standard Stock Solution: Accurately weigh about 10 mg of the analyte reference standard. Transfer to a 100 mL volumetric flask, dissolve, and dilute to volume with the primary solvent (e.g., distilled water, or a solvent mixture like Water:Methanol:Acetonitrile 9:0.5:0.5 [24]) to obtain a stock solution of approximately 100 µg/mL.

- Working Solution: Pipette 0.5 mL of the standard stock solution into a 10 mL volumetric flask and dilute to mark with the same solvent to obtain a ~5 µg/mL solution [22].

- λmax Determination: Fill a cleaned quartz cuvette (1 cm pathlength) with the working solution. Using the spectrophotometer, scan the solution across the UV range (e.g., 200-400 nm) against a blank of the pure solvent [26] [23]. The wavelength corresponding to the highest absorbance peak is the λmax. An example is shown in the table below.

Table 1: Exemplar Method Development Parameters for Select APIs

| API | Recommended Solvent | Wavelength of Maximum Absorption (λmax) | Linear Range | Reference |

|---|---|---|---|---|

| Terbinafine Hydrochloride | Distilled Water | 283 nm | 5 - 30 µg/mL | [22] |

| Levofloxacin | Water:Methanol:Acetonitrile (9:0.5:0.5) | 292 nm | 1.0 - 12.0 µg/mL | [24] |

| Paracetamol | Methanol and Water | Not Specified | Applicable range defined | [23] |

| Hongjam Silkworm Extract | 0.5% Triton X-100 | Not Specified | Distinguishes 2.5% content difference | [25] |

The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Materials and Reagents for Spectrophotometric Analysis

| Item | Function / Purpose | Exemplars & Technical Notes |

|---|---|---|

| UV-Vis Spectrophotometer | Measures the absorption of light by a sample. | Instruments with microvolume capabilities (e.g., DeNovix DS-Series) save sample [20]. |

| Cuvettes | Holds the sample solution in the light path. | Use UV-transparent quartz for UV range; handle by opaque sides to avoid smudges [20] [26]. |

| Reference Standard | The highly pure compound used to develop and calibrate the method. | Enables accurate quantification (e.g., Terbinafine HCl, Levofloxacin) [22] [24]. |

| High-Purity Solvents | Dissolves the analyte and serves as the blank matrix. | Water, methanol, acetonitrile; must be transparent at λmax and not react with analyte [20] [24]. |

| Volumetric Flasks & Pipettes | For precise preparation and dilution of standard and sample solutions. | Critical for achieving accurate concentrations and calibration curves [22]. |

| NIST-Traceable Standards | For instrument calibration and performance verification. | Holmium oxide filter for wavelength accuracy; neutral density filters for photometric accuracy [27]. |

| Iron(II)bromidehexahydrate | Iron(II)bromidehexahydrate, MF:Br2FeH12O6, MW:323.75 g/mol | Chemical Reagent |

| Phthalazin-5-ylmethanamine | Phthalazin-5-ylmethanamine|Research Use |

Stage 2: Method Validation

Once developed, the method must be validated to demonstrate it is suitable for its intended use. Validation provides scientific evidence that the method is reliable, consistent, and accurate. The International Council for Harmonisation (ICH) guidelines define the key parameters to be assessed [22] [23].

Experimental Protocol: A Template for Validation

Objective: To determine the linearity, accuracy, precision, and sensitivity of the developed spectrophotometric method for an API.

Procedure:

- Linearity and Range:

- Prepare a series of standard solutions at a minimum of five concentrations across the specified range (e.g., 5, 10, 15, 20, 25, 30 µg/mL for Terbinafine HCl [22]).

- Measure the absorbance of each solution at the λmax.

- Plot absorbance versus concentration and perform linear regression analysis. A correlation coefficient (R²) of ≥ 0.999 is typically expected [22] [24].

Accuracy (Recovery Study):

- Analyze a pre-analyzed sample solution (e.g., from a formulation) to know the base amount.

- Spike the sample with known amounts of the reference standard at three levels (typically 80%, 100%, and 120% of the target concentration) [22].

- Re-analyze the spiked samples and calculate the percentage recovery of the added standard. The mean recovery should ideally be between 98-102% [22].

Precision:

- Repeatability (Intra-day): Analyze three different concentrations (e.g., 10, 15, 20 µg/mL) in triplicate, multiple times within the same day [22].

- Intermediate Precision (Inter-day): Analyze the same three concentrations over three different days, or by a different analyst [22].

- Calculate the % Relative Standard Deviation (%RSD) for each concentration set. An %RSD of < 2% is generally acceptable [22].

Sensitivity: Limit of Detection (LOD) and Quantification (LOQ):

- Calculate LOD and LOQ from the standard deviation of the response (σ) and the slope of the calibration curve (S). LOD = 3.3σ/S, LOQ = 10σ/S [22].

Table 3: Summary of Validation Parameters and Acceptance Criteria

| Validation Parameter | Experimental Approach | Exemplar Results & Acceptance Criteria |

|---|---|---|

| Linearity | Absorbance measured at 5-6 concentration levels. | Terbinafine HCl: R² = 0.999 over 5-30 µg/mL [22]. Levofloxacin: R² = 0.9998 over 1-12 µg/mL [24]. |

| Accuracy | Recovery study at 80%, 100%, 120% levels. | Terbinafine HCl: %Recovery 98.54 - 99.98% [22]. Levofloxacin: %Recovery 99.00 - 100.07% [24]. |

| Precision (%RSD) | Multiple measurements of the same sample (n=6). | Terbinafine HCl: Intra-day and inter-day %RSD < 2% [22]. |

| Sensitivity | Calculated from calibration curve. | Terbinafine HCl: LOD = 1.30 µg, LOQ = 0.42 µg [22]. |

| Ruggedness | Analysis by different analysts or instruments. | Terbinafine HCl: %RSD < 2% between analysts [22]. |

Stage 3: Routine Use and Maintenance

The transition of a validated method to routine analysis requires strict adherence to the approved procedure, coupled with robust instrument care and ongoing performance checks to ensure data integrity over time.

Workflow for Routine Analysis and Monitoring

The following workflow outlines the critical steps for maintaining analytical control during the routine use of the method.

Protocol for Ensuring Instrumental Integrity

Objective: To maintain spectrophotometer performance through calibration and system suitability tests.

Procedure:

- Instrument Calibration:

- Warm-up: Turn on the instrument and allow it to warm up for at least 15-30 minutes to stabilize the light source and electronics [26] [27].

- Zero/Baseline Calibration: Use the pure solvent (blank) to set the 0% Absorbance baseline. This corrects for any background signal from the solvent or cuvette [26].

- Periodic Checks: Perform weekly or monthly verification of wavelength accuracy using holmium oxide filters and photometric accuracy using NIST-traceable neutral density filters [27].

System Suitability Test:

- Prior to analyzing a batch of samples, prepare and analyze a system suitability standard (a mid-point concentration from the calibration curve).

- The measured absorbance of this standard should fall within a pre-defined range (e.g., ±2% of the established value). This ensures the entire system (instrument, reagents, and protocol) is functioning correctly on that day [27].

Sample Analysis:

- Follow the standard operating procedure (SOP) derived from the validated method for all sample preparations.

- Ensure samples are homogenous and free of bubbles before measurement [20].

- Use a fresh pipette tip for each sample and solution to prevent cross-contamination [26].

- Record all data, including sample identification, absorbance readings, and any observations, in a controlled laboratory notebook or data management system.

In the pharmaceutical sciences, the analytical method is a critical tool for ensuring the identity, strength, quality, and purity of drug substances and products. The concept of "fitness for purpose" signifies that the validation level of an analytical method must be directly aligned with its intended application [23]. A method designed for routine quality control (QC) of a finished product, for instance, requires a different validation approach than one developed for stability-indicating analysis or pharmacokinetic studies.

This application note provides a structured framework for linking method objectives to a corresponding validation strategy, using UV-Spectrophotometry as a model technique. UV-Spectrophotometry remains a popular choice due to its simplicity, rapidity, and cost-effectiveness [22] [28]. We detail the development and validation of a specific UV method for Terbinafine Hydrochloride, presenting the experimental protocol, validation data, and a clear pathway for ensuring the method is fit for its intended purpose.

Method Objectives and Validation Parameters

The initial and most crucial step in any analytical lifecycle is defining the method's objective. This definition directly dictates which validation parameters need to be evaluated and to what stringency. The International Council for Harmonisation (ICH) guideline Q2(R1) provides the foundational basis for this decision-making process [22] [28] [29].

Table 1: Linking Method Objective to Validation Strategy

| Method Objective | Core Validation Parameters | Typical Acceptance Criteria |

|---|---|---|

| Release & Quality Control Testing | Specificity, Accuracy, Precision, Linearity | Accuracy: 98-102% RSD: < 2% [22] |

| Stability-Indicating Methods | Specificity, Forced Degradation Studies | Must demonstrate stability of the analyte |

| Determination of Impurities | Specificity, LOD, LOQ | LOQ: Signal/Noise ≥ 10 [28] |

| Pharmacokinetic/ Bioanalysis | Specificity, LOD, LOQ, wider Range | High sensitivity for low concentration detection |

The following diagram illustrates the logical workflow for establishing fitness for purpose, from defining the objective to implementing the method.

Experimental Protocol: A Case Study of Terbinafine Hydrochloride

To illustrate the practical application of these principles, we detail the development and validation of a UV-spectrophotometric method for the analysis of Terbinafine Hydrochloride (TER-HCL) in a bulk substance and a tablet formulation [22].

The Scientist's Toolkit: Essential Materials and Reagents

Table 2: Key Research Reagent Solutions and Equipment

| Item | Specification / Function |

|---|---|

| Terbinafine HCl | Reference Standard (e.g., from Dr. Reddy's Lab) [22] |

| Distilled Water | Solvent for dissolution and dilution [22] |

| UV-Vis Spectrophotometer | e.g., Shimadzu 1700 with 1.0 cm quartz cells [22] [28] |

| Volumetric Flasks | Class A, for precise preparation of standard and sample solutions |

| Analytical Balance | For accurate weighing of the reference standard |

| 3-Phenoxycyclopentanamine | 3-Phenoxycyclopentanamine|High-Purity |

| Glycerol monoleate | Glycerol monoleate, MF:C42H80O8, MW:713.1 g/mol |

Detailed Experimental Workflow

The entire analytical procedure, from instrument preparation to sample analysis, is outlined in the workflow below.

Step-by-Step Procedure:

- Instrument Preparation: Turn on the UV-spectrophotometer and allow it to warm up for at least 30 minutes to ensure stable lamp and electronics. Use distilled water in the quartz cell to set the baseline or blank [22] [27].

- Standard Stock Solution: Accurately weigh and transfer 10 mg of TER-HCL reference standard into a 100 mL volumetric flask. Dissolve and dilute to volume with distilled water to obtain a final concentration of 100 µg/mL [22].

- Wavelength Determination (λmax): Pipette 0.5 mL of the standard stock solution into a 10 mL volumetric flask and dilute to mark with distilled water (5 µg/mL). Scan this solution against a blank from 200 nm to 400 nm. The maximum absorbance (λmax) for TER-HCL was found to be 283 nm [22].

- Calibration Curve: Pipette appropriate aliquots (0.5, 1.0, 1.5, 2.0, 2.5, 3.0 mL) of the 100 µg/mL stock solution into a series of 10 mL volumetric flasks. Dilute to volume with distilled water to obtain concentrations of 5, 10, 15, 20, 25, and 30 µg/mL, respectively. Measure the absorbance of each solution at 283 nm and plot a graph of absorbance versus concentration [22].

- Sample Preparation: Weigh and finely powder 20 tablets. Transfer an amount of powder equivalent to 10 mg of TER-HCL into a 100 mL volumetric flask. Add about 30 mL of distilled water, sonicate for 15 minutes to facilitate dissolution, then make up to volume with distilled water. Filter the solution and further dilute a suitable aliquot (e.g., 0.6 mL to 10 mL) to obtain a concentration within the linear range (e.g., ~20 µg/mL) [22].

- Analysis: Measure the absorbance of the prepared sample solution at 283 nm. Calculate the concentration of TER-HCL in the sample using the linear regression equation derived from the calibration curve [22].

Validation Data and Acceptance Criteria

The developed method for TER-HCL was rigorously validated as per ICH guidelines. The quantitative results for each validation parameter are summarized below, demonstrating that the method meets standard acceptance criteria for its purpose.

Table 3: Summary of Validation Parameters for the TER-HCL Method [22]

| Validation Parameter | Experimental Results | Acceptance Criteria |

|---|---|---|

| Linearity Range | 5 - 30 µg/mL | -- |

| Correlation Coefficient (r²) | 0.999 | r² ≥ 0.995 |

| Regression Equation | Y = 0.0343X + 0.0294 | -- |

| Accuracy (% Recovery) | 98.54% - 99.98% | 98 - 102% |

| Precision (% RSD) | ||

|   - Repeatability (n=6) | < 2% | RSD ≤ 2% |

|   - Intra-day (n=3) | < 2% | RSD ≤ 2% |

|   - Inter-day (n=3 over 3 days) | < 2% | RSD ≤ 2% |

| LOD / LOQ | 1.30 µg / 0.42 µg | -- |

| Ruggedness (Between Analysts) | % RSD < 2% | RSD ≤ 2% |

Discussion: Interpreting Validation Outcomes

The data presented in Table 3 confirms that the method is fit for its intended purpose as a QC assay for TER-HCL in tablets.

- Linearity and Range: The correlation coefficient of 0.999 over 5-30 µg/mL demonstrates an excellent linear relationship, which is essential for accurate quantification [22]. The range adequately covers the expected sample concentration (around 20 µg/mL after dilution).

- Accuracy and Precision: The recovery results of 98.54-99.98% indicate that the method is highly accurate, with minimal interference from the tablet excipients [22]. The % RSD for all precision measures being below 2% confirms the method's reliability and reproducibility, a critical requirement for routine testing [22] [28].

- Sensitivity: The determined LOD and LOQ values are sufficiently low relative to the assay concentration, confirming the method can easily detect and quantify the analyte at the levels of interest.

- Ruggedness: The low variability between different analysts demonstrates that the method is robust and can be successfully transferred to other operators or laboratories [22].

This application note demonstrates a systematic strategy for establishing fitness for purpose in analytical methods. By first defining the method's objective—in this case, a QC assay for Terbinafine Hydrochloride tablets—a targeted validation strategy was designed and executed. The experimental data conclusively shows that the developed UV-spectrophotometric method is simple, rapid, accurate, precise, and rugged. It is, therefore, perfectly suited for its intended application in the routine analysis of bulk and formulated TER-HCL, ensuring product quality and patient safety. This framework can be universally adapted to develop and validate analytical methods for a wide range of pharmaceutical compounds.

Implementing Key Validation Parameters in Spectrophotometric Analysis

In the field of pharmaceutical analysis, demonstrating the specificity of an analytical method is a fundamental requirement for method validation, proving its ability to accurately measure the analyte in the presence of other components that may be expected to be present in the sample matrix [30] [4]. For spectrophotometric techniques, this is particularly challenging when analyzing complex drug mixtures or formulations with overlapping spectral profiles and potential interferents such as degradation products or process-related impurities [30] [31]. This article explores advanced spectrophotometric techniques and provides detailed protocols for rigorously assessing and establishing method specificity, enabling researchers to ensure the reliability and accuracy of their analytical methods in pharmaceutical development and quality control.

Advanced Techniques for Resolving Spectral Interference

Mathematical Spectrophotometric Methods

Conventional ultraviolet-visible spectrophotometry, while simple and cost-effective, often lacks sufficient specificity for directly analyzing complex mixtures due to significant spectral overlap [4]. Advanced mathematical manipulation techniques enhance specificity by resolving these overlapping spectra without physical separation. The table below summarizes key techniques and their applications for assessing interference in complex mixtures.

Table 1: Advanced Spectrophotometric Techniques for Resolving Spectral Interferences

| Technique | Principle | Application Example | Key Advantage |

|---|---|---|---|

| Ratio Difference (RD) [31] | Measures the difference in peak amplitudes at two wavelengths in the ratio spectrum of a mixture. | Determination of Lidocaine in presence of its carcinogenic impurity, 2,6-dimethylaniline (DMA) [31]. | Resolves severely overlapping spectra; simple calculations. |

| Derivative Ratio Spectrum [31] | Applies derivative transformation to the ratio spectrum of a mixture divided by a divisor of one component. | Resolving overlapping peaks of Lidocaine and Oxytetracycline HCl [31]. | Enhances resolution of closely spaced or overlapping peaks. |

| Double Divisor-Ratio Spectra Derivative (DD-RS-DS) [30] | Uses the sum of spectra of two other components as a divisor before derivative processing. | Simultaneous assessment of Nebivolol and Valsartan in presence of Valsartan impurity (VAL-D) [30]. | Enables quantification of one analyte in the presence of two potential interferents. |

| Dual Wavelength in Ratio Spectrum (DWRS) [30] | Selects two wavelengths in the ratio spectrum where the interferent shows the same absorbance. | Analysis of Nebivolol and Valsartan using a divisor of the impurity VAL-D [30]. | Cancels out the contribution of the interfering component. |

| H-Point Derivative Ratio (HDR) [30] | Uses the amplitude values of the derivative ratio spectrum at two wavelengths where the interferent has equal absorbance. | Quantifying Nebivolol in laboratory-prepared mixtures with interferents [30]. | Allows determination of the analyte and can also quantify the interferent. |

| Constant Multiplication (CM) [31] | Identifies a constant region in the ratio spectrum of an extended component, which is then multiplied by its divisor to obtain its original spectrum. | Isolation and determination of the Lidocaine impurity DMA from a ternary mixture [31]. | Can isolate the spectrum of one component for individual quantification. |

Multivariate Calibration and Chemometric Techniques

For highly complex mixtures, multivariate calibration techniques such as Partial Least Squares (PLS) and Principal Component Regression (PCR) are powerful tools [31]. These methods utilize the entire spectral data rather than a few selected wavelengths, building a mathematical model to correlate spectral changes with analyte concentrations. They are exceptionally useful for analyzing multi-component systems where univariate techniques face limitations and are considered a key part of the modern "scientist's toolkit" for handling intricate interference challenges [31].

Experimental Protocols for Specificity Assessment

Protocol 1: Specificity Assessment Using the Ratio Difference Method

This protocol is adapted from a study determining Lidocaine HCl (LD) in the presence of its carcinogenic impurity, 2,6-dimethylaniline (DMA) [31].

- Objective: To establish the specificity of a method for quantifying Lidocaine in a mixture with its impurity, DMA.

- Materials and Reagents:

- Standard stock solutions: Lidocaine HCl (100 µg/mL) and DMA (100 µg/mL) in a suitable solvent like acetonitrile.

- Laboratory-prepared mixtures: Containing varying ratios of LD and DMA.

- Instrumentation: Double-beam UV-Vis spectrophotometer with 1 cm quartz cells.

- Procedure:

- Scan and store the zero-order absorption spectra (200-400 nm) of a series of standard LD solutions (e.g., 1.0–9.0 µg/mL), a standard DMA solution (e.g., 10 µg/mL) to be used as a divisor, and the laboratory-prepared mixtures.

- Divide the stored zero-order absorption spectra of both the standard LD solutions and the laboratory-prepared mixtures by the normalized spectrum of the DMA standard (the divisor).

- Obtain the ratio spectra from step 2.

- For each standard LD ratio spectrum, measure the peak amplitudes at two carefully selected wavelengths (λ1 and λ2). In the cited study, 218 nm and 230 nm were used for LD [31].

- Calculate the difference in amplitudes (ΔP) between these two wavelengths for each standard: ΔP = Pλ1 - Pλ2.

- Construct a calibration curve by plotting the ΔP values against the corresponding concentrations of pure LD.

- Process the laboratory-prepared mixtures identically (steps 2-5). Use the calibration curve to determine the concentration of LD in each mixture.

- Specificity Evaluation:

- Calculate the % recovery of LD from the laboratory-prepared mixtures. Recovery values close to 100% demonstrate that the method is unaffected by the presence of the impurity DMA, thus proving specificity.

- Statistically compare the results (e.g., using a t-test) with those from a reference method, if available.

Protocol 2: Specificity Assessment Using the Double Divisor-Ratio Spectra Derivative Method

This protocol is adapted from a study simultaneously assessing Nebivolol (NEB) and Valsartan (VAL) in the presence of a Valsartan impurity (VAL-D) [30].

- Objective: To determine the specificity of a method for quantifying one active pharmaceutical ingredient (API) in a binary mixture with a known impurity.

- Materials and Reagents:

- Standard stock solutions: NEB (100 µg/mL), VAL (100 µg/mL), and VAL-D (100 µg/mL) in methanol.

- Laboratory-prepared mixtures: Containing NEB, VAL, and VAL-D in varying, known proportions.

- Instrumentation: UV-Vis spectrophotometer.

- Procedure for Assessing Nebivolol (NEB):

- Scan and store the zero-order spectra of NEB standard solutions (2.5–70 µg/mL) and the laboratory-prepared mixtures.

- Create a "double divisor" by adding the stored spectra of VAL (10 µg/mL) and VAL-D (10 µg/mL).

- Divide the stored spectra of the NEB standards and the mixtures by this double divisor.

- Obtain the first derivative of the resulting ratio spectra.

- Measure the first derivative amplitude at a wavelength specific to NEB (e.g., 297 nm).

- Construct a calibration curve by plotting the derivative amplitudes against the concentrations of pure NEB.

- Process the laboratory-prepared mixtures identically and use the calibration curve to determine the concentration of NEB.

- Specificity Evaluation:

- The method's specificity is confirmed by the ability to quantify NEB in the mixtures with high accuracy, demonstrating that the signal contribution from VAL and VAL-D has been effectively eliminated by the double divisor and derivative process.

- Report the recovery percentages for NEB from all mixtures, along with precision data (e.g., %RSD).

Diagram 1: Workflow for specificity assessment using mathematical spectrophotometry.

The Scientist's Toolkit: Key Reagent Solutions

The following table details essential reagents and materials commonly used in advanced spectrophotometric analysis for assessing specificity.

Table 2: Key Research Reagent Solutions for Spectrophotometric Specificity Assessment

| Reagent/Material | Function & Application | Example Use Case |

|---|---|---|

| Complexing Agents (e.g., Ferric Chloride) [4] | Form stable, colored complexes with analytes to enhance absorbance and sensitivity for compounds that do not absorb strongly. | Analysis of phenolic drugs like Paracetamol. |

| Oxidizing/Reducing Agents (e.g., Ceric Ammonium Sulfate) [4] | Modify the oxidation state of the analyte to create a product with different, measurable absorbance properties. | Determination of Ascorbic Acid (Vitamin C). |

| Diazotization Reagents (e.g., NaNOâ‚‚ + HCl) [4] | Convert primary aromatic amines in pharmaceuticals into diazonium salts, which form colored azo compounds for detection. | Analysis of sulfonamide antibiotics. |

| pH Indicators (e.g., Bromocresol Green) [4] | Change color based on solution pH, useful for analyzing acid-base equilibria of drugs and ensuring formulation pH. | Assay of weak acids in pharmaceutical formulations. |

| High-Purity Solvents (e.g., Methanol, Acetonitrile) [30] [31] | Dissolve samples and standards without introducing interfering absorbances in the spectral region of interest. | Used as a solvent in the analysis of Nebivolol/Valsartan and Lidocaine/Oxytetracycline. |

| Standard Reference Materials (SRMs) [32] | Calibrate and validate spectrophotometers to ensure wavelength and photometric accuracy, a foundational step for reliable specificity. | NIST provides SRMs for instrument characterization. |

| 2-Quinolinamine, 8-ethyl- | 2-Quinolinamine, 8-ethyl-, CAS:104217-17-6, MF:C11H12N2, MW:172.23 g/mol | Chemical Reagent |

| Diethyldifluorosilane | Diethyldifluorosilane|High-Purity Reagent |

Data Analysis and Interpretation

Validation Parameters for Specificity

After executing the experimental protocols, the data must be rigorously analyzed to conclusively demonstrate specificity. The following table outlines the key validation parameters and acceptance criteria.

Table 3: Key Parameters for Validating Specificity in Spectrophotometric Methods

| Validation Parameter | Assessment Method | Typical Acceptance Criteria |

|---|---|---|

| Accuracy (Recovery) | Analyze laboratory-prepared mixtures with known concentrations of the analyte and interferents. | Recovery of 98–102% for the analyte of interest. |

| Precision | Repeat the analysis of the specificity mixtures multiple times (e.g., n=3). | Relative Standard Deviation (RSD) ≤ 2.0%. |

| Linearity | Verify that the calibration curve for the analyte remains linear in the presence of interferents. | Correlation coefficient (r) ≥ 0.999. |

| Limit of Detection (LOD) / Quantification (LOQ) | Ensure the method can detect and quantify the analyte at low levels despite potential interference. | Signal-to-Noise ratio of 3:1 for LOD and 10:1 for LOQ. |

Case Study: Quantitative Results

A study on Nebivolol (NEB), Valsartan (VAL), and its impurity (VAL-D) provides concrete examples of successful specificity assessment [30].

Table 4: Specificity Assessment Data for Nebivolol and Valsartan in Presence of an Impurity [30]

| Analyte | Technique Used | Concentration Taken (µg/mL) | Concentration Found (µg/mL) | Recovery (%) |

|---|---|---|---|---|

| NEB | DD-RS-DS | 5.00 | 4.95 | 99.00 |

| 30.00 | 30.27 | 100.90 | ||

| VAL | DD-RS-DS | 20.00 | 19.80 | 99.00 |

| 40.00 | 40.40 | 101.00 | ||

| VAL-D | DD-RS-DS | 20.00 | 19.82 | 99.10 |

| 60.00 | 60.18 | 100.30 |

The high recovery percentages (all between 99% and 101%) for each component in the mixture, as determined by the Double Divisor-Ratio Spectra Derivative Method, provide strong quantitative evidence that the methods are specific and that the analyses are free from interference from the other compounds present.

In the realm of analytical chemistry, particularly in spectrophotometric method validation, establishing linearity and range constitutes a fundamental step in demonstrating that an analytical method provides results that are directly proportional to the concentration of the analyte in samples within a given range [33]. The calibration curve serves as the critical mathematical model that translates instrumental response—such as absorbance in ultraviolet-visible (UV-Vis) spectrophotometry—into meaningful quantitative data about analyte concentration [34] [35]. For researchers, scientists, and drug development professionals, a properly constructed and validated calibration curve is not merely a regulatory formality but a cornerstone of data integrity, without which analytical results remain questionable and unreliable.

This protocol outlines comprehensive procedures for developing, evaluating, and validating calibration curves specifically within the context of spectrophotometric techniques. The foundation of these procedures rests on the Beer-Lambert law, which states that the absorbance (A) of a solution is directly proportional to the concentration (c) of the absorbing species, as expressed by the equation A = εbc, where ε is the molar absorptivity and b is the path length [23]. By meticulously following the guidelines presented herein, laboratory personnel can generate robust calibration models that satisfy stringent method validation requirements under international standards such as ICH Q2(R1) and ISO/IEC 17025 [33] [36].

Theoretical Foundations

Principles of Spectrophotometric Quantification

In UV-Vis spectrophotometry, the quantitative relationship between analyte concentration and light absorption forms the theoretical basis for calibration curve development. When monochromatic light passes through a solution containing the analyte, the amount of light absorbed is measured as absorbance, which exhibits a linear relationship with concentration across a specified range [34] [23]. This relationship holds true provided that the analyte follows Beer-Lambert law behavior, which requires the use of monochromatic light, appropriate solvent systems, and concentrations that do not exhibit molecular interactions or instrumental saturation effects.

The fundamental equation governing this relationship is:

[ A = \varepsilon b c ]

Where:

- A = Absorbance (unitless)

- ε = Molar absorptivity (L·molâ»Â¹Â·cmâ»Â¹)

- b = Path length (cm)

- c = Concentration (mol·Lâ»Â¹)

For calibration purposes, this is often simplified to the linear model:

[ y = mx + b ]

Where y represents the instrumental response (absorbance), m represents the sensitivity (slope), x represents the analyte concentration, and b represents the y-intercept accounting for any systematic background response [37] [35].

Calibration Model Selection