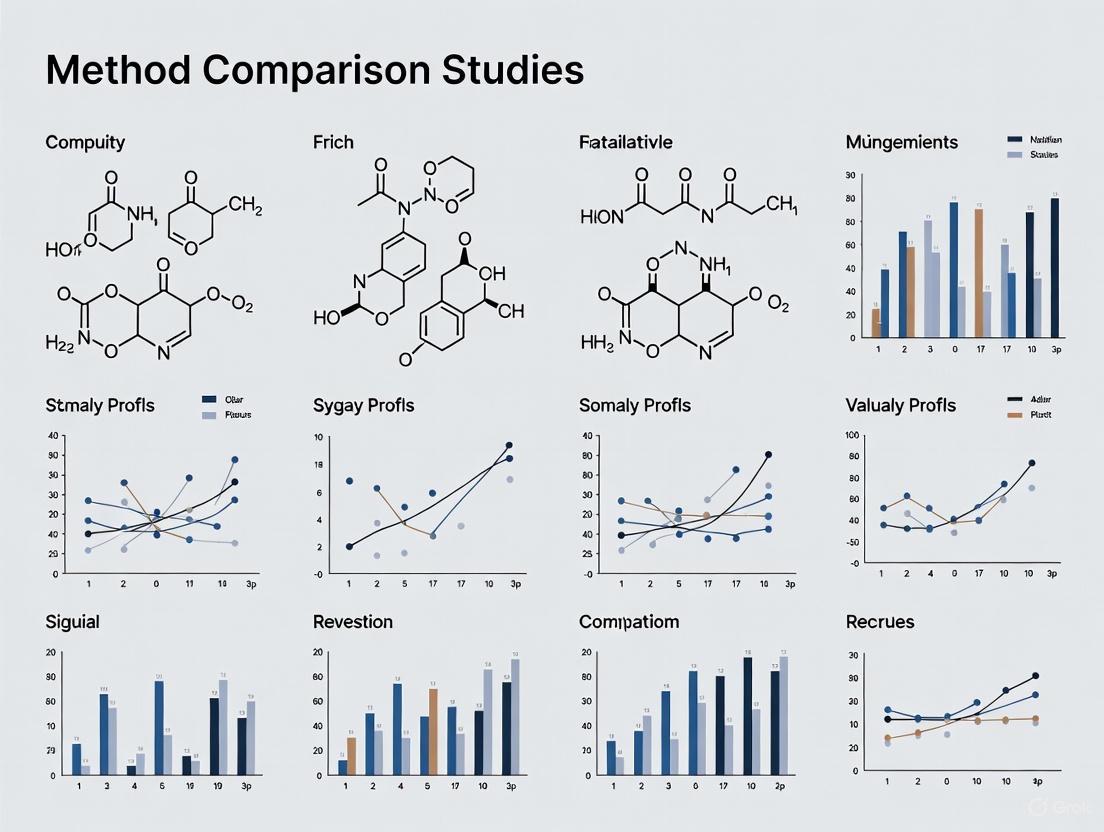

A Practical Guide to Minimizing Systematic Error in Method Comparison Studies for Robust Biomedical Research

This article provides a comprehensive framework for researchers and drug development professionals to understand, identify, and minimize systematic error (bias) in method comparison studies.

A Practical Guide to Minimizing Systematic Error in Method Comparison Studies for Robust Biomedical Research

Abstract

This article provides a comprehensive framework for researchers and drug development professionals to understand, identify, and minimize systematic error (bias) in method comparison studies. Covering foundational concepts to advanced troubleshooting, it details rigorous experimental designs for detecting constant and proportional bias, strategies for handling method failure, and robust statistical techniques for validation. By synthesizing current best practices, this guide aims to enhance the reliability and accuracy of analytical data, which is fundamental for valid scientific conclusions and sound clinical decision-making.

Understanding Systematic Error: Definitions, Sources, and Impact on Data Integrity

Troubleshooting Guides

Guide 1: Identifying and Diagnosing Systematic Error in Your Data

Problem: Your experimental results are consistently skewed away from the known true value or results from a standard method, even after repeating the experiment.

Solution: Follow this diagnostic pathway to confirm the presence and identify the source of systematic error.

Diagnostic Steps:

- Calibrate Your Instrument: Use a traceable standard reference material (SRM) with a known concentration. If your measurements consistently read higher or lower than the standard's value by a fixed amount, a constant systematic error (offset error) is likely present [1] [2]. If the difference changes proportionally with the value, you may have a proportional systematic error (scale factor error) [3] [1].

- Method Comparison Analysis: Analyze a minimum of 40 patient specimens covering the entire analytical range using both your test method and a validated comparative method (e.g., a reference method) [4]. The data analysis should involve more than just a correlation coefficient.

- Create a Difference Plot: Plot the differences between the test and comparative method (test - comparative) on the y-axis against the average of the two values or the comparative method value on the x-axis [4]. Visual inspection of this plot is a fundamental diagnostic tool.

- Interpret the Pattern:

- If the data points on the difference plot are scattered randomly around the zero line, systematic error is minimal.

- If all data points are consistently above or below the zero line, a constant systematic error is present [4] [5].

- If the data points show a trend (e.g., positive differences at low concentrations and negative differences at high concentrations), a proportional systematic error is present [4].

Guide 2: Resolving a High Systematic Error in a Method Comparison Study

Problem: A method comparison experiment has revealed a medically significant systematic error that makes your new method unacceptable for use.

Solution: Systematically investigate and correct the primary sources of bias.

Resolution Steps:

- Inspect and Calibrate Equipment: Recalibrate all instruments, including balances, pipettes, and volumetric flasks, against certified standards before the experiment and at regular intervals [6]. Check for instrument drift over time.

- Review Analytical Method: Scrutinize your method for inherent flaws, such as incomplete chemical reactions, incorrect sampling procedures, or unaccounted-for interferences from the sample matrix or reagents [6]. Perform a recovery experiment to check for specific interferences.

- Verify Reagents: Ensure all chemicals and reagents are pure, not decomposed, and correctly labeled [6]. Contaminated reagents are a common source of error.

- Standardize Analyst Technique: Implement detailed Standard Operating Procedures (SOPs) for both the method and instrument operation [6]. Provide training to eliminate analyst-specific errors, such as misreading menisci or improper instrument setup [7].

- Control the Environment: Document and control environmental factors like temperature, humidity, and electrical line voltage, as fluctuations can introduce systematic bias, especially in sensitive instrumental analyses [6] [7].

Frequently Asked Questions (FAQs)

Q1: Why can't we just use a larger sample size to eliminate systematic error, like we can with random error?

A: Systematic error (bias) cannot be reduced by increasing the sample size because it consistently pushes measurements in the same direction [5]. Every data point is skewed equally, so averaging a larger number only gives a more precise, but still inaccurate, result. In contrast, random error causes variations both above and below the true value. These variations tend to cancel each other out when averaged over a large sample, bringing the average closer to the true value [3] [5].

Q2: In a method comparison study, what is a more reliable statistic than the correlation coefficient (r) for assessing agreement?

A: While a high correlation coefficient (e.g., r > 0.99) indicates a strong linear relationship, it does not prove the methods agree. A test method could consistently show results 20% higher than the reference method and still have a perfect correlation. For assessing agreement, it is preferable to use linear regression analysis to calculate the slope and y-intercept [4]. The slope indicates a proportional error, and the y-intercept indicates a constant error. You can then use these values to calculate the systematic error at critical medical decision concentrations [4].

Q3: What is the single most effective action to minimize systematic error in my experiments?

A: There is no single solution, but the most robust strategy is triangulation—using multiple, independent techniques or instruments to measure the same thing [3] [1] [5]. If different methods and instruments all yield convergent results, you can be more confident that systematic error is minimal. This should be complemented by regular calibration of equipment against certified standards and the use of randomization in sampling and assignment to balance out unknown biases [3].

Q4: How can an experimenter's behavior introduce systematic error, and how can we prevent it?

A: Experimenters can unintentionally influence results through their spoken language, body language, or facial expressions, which can shape participant responses or performance (a form of response bias) [7]. To prevent this:

- Use masking (blinding) so neither the participant nor the experimenter knows which treatment group or condition is being tested [3].

- Provide experimenters with strict, written Standard Operating Procedures (SOPs) to ensure consistency [6] [7].

- Use pre-recorded instructions for all participants to guarantee identical delivery [7].

Data Presentation

Table 1: Characterizing Random and Systematic Error

This table summarizes the core differences between the two error types, which is fundamental for troubleshooting.

| Feature | Random Error | Systematic Error |

|---|---|---|

| Definition | Unpredictable fluctuations causing variation around the true value [3] [5] | Consistent, reproducible deviation from the true value [3] [7] |

| Effect on Data | Introduces variability or "noise"; affects precision [3] [5] | Introduces inaccuracy or "bias"; affects accuracy [3] [5] |

| Direction | Occurs equally in both directions (high and low) [5] | Always in the same direction (consistently high or low) [7] |

| Reduced by | Taking repeated measurements, increasing sample size [3] [5] | Improving calibration, triangulation, blinding, randomization [3] [1] |

| Eliminated by Averaging? | Yes, errors cancel out over many measurements [3] [5] | No, averaging does not remove consistent bias [2] [5] |

Table 2: Essential Materials for a Method Comparison Study

This toolkit lists critical reagents and materials needed to conduct a robust comparison of methods experiment.

| Item | Function & Importance |

|---|---|

| Certified Reference Material (CRM) | A substance with a known, traceable quantity of analyte. Serves as the gold standard for calibrating instruments and assessing method accuracy [4] [6]. |

| Well-Characterized Patient Specimens | At least 40 specimens covering the entire analytical range of the method. They should represent the expected pathological spectrum to properly evaluate performance across all clinically relevant levels [4]. |

| Reference/Comparative Method | A method (preferably a recognized reference method) whose correctness is well-documented. Differences from this method are attributed to the test method's error [4]. |

| Calibrated Pipettes & Volumetric Flasks | Precisely calibrated glassware and pipettes are essential for accurate sample and reagent preparation. Uncalibrated tools are a primary source of systematic error [6]. |

| Statistical Software | Required for calculating linear regression statistics (slope, intercept) and creating difference plots, which are necessary for quantifying systematic error [4]. |

Experimental Protocol: The Comparison of Methods Experiment

Purpose: To estimate the inaccuracy or systematic error of a new test method by comparing it to a comparative method using real patient specimens [4].

Methodology:

Specimen Selection:

- Collect a minimum of 40 different patient specimens.

- Select specimens to cover the entire working range of the method.

- Ensure the specimens represent the spectrum of diseases and conditions expected in routine practice [4].

Experimental Procedure:

- Analyze each specimen using both the test method (new method under evaluation) and the comparative method (established method) [4].

- Analyze specimens in a single run or, preferably, over multiple days (minimum of 5 days is recommended) to account for day-to-day variability [4].

- Analyze specimens within a short time frame (e.g., 2 hours) of each other to ensure specimen stability, unless specific handling procedures (e.g., freezing) are validated [4].

- Ideally, perform duplicate measurements on different aliquots of the sample to identify and prevent errors from sample mix-ups or transcription mistakes [4].

Data Analysis:

- Graphical Analysis: Create a difference plot (test result - comparative result vs. comparative result) to visually inspect for constant or proportional trends and identify outliers [4].

- Statistical Calculation:

- Interpretation: The calculated systematic error (SE) should be compared to pre-defined, medically acceptable limits to judge the acceptability of the new method.

Systematic errors are consistent, reproducible inaccuracies that can compromise the validity of biomedical assay results. Unlike random errors, which vary unpredictably, systematic errors introduce bias in the same direction across measurements, potentially leading to false conclusions in method comparison studies and drug development research. This technical support guide identifies common sources of systematic error in biomedical testing and provides detailed troubleshooting methodologies to help researchers minimize these errors, thereby enhancing data quality and research outcomes.

Troubleshooting Guide: Identifying and Rectifying Systematic Errors

How do sample matrix effects cause systematic error in LC-MS/MS analyses?

Issue: Matrix effects represent a significant source of systematic error in liquid chromatography-tandem mass spectrometry (LC-MS/MS), particularly causing ion suppression or enhancement that compromises quantitative accuracy.

Background: Matrix effects occur when co-eluting components from biological samples alter the ionization efficiency of target analytes. In biomonitoring studies assessing exposure to environmental toxicants, these effects can lead to inaccurate measurements of target compounds if not properly characterized and controlled [8].

Primary Mechanisms:

- Electrospray Ionization (ESI) Vulnerability: ESI is particularly susceptible to ion suppression because matrix components can interfere with charge addition to analytes in the liquid phase or inhibit transfer of ions to the gas phase [8].

- Competition for Charge: Matrix components compete with target analytes for available charges in the liquid phase [8].

- Altered Droplet Properties: Interfering compounds increase viscosity and surface tension of ESI droplets, reducing analyte transfer efficiency to the gas phase [8].

Troubleshooting Protocol:

- Sample Preparation Assessment: Compare protein precipitation (PPT), liquid-liquid extraction (LLE), and solid-phase extraction (SPE) methods. Mixed-mode SPE sorbents combining reversed-phase and ion exchange mechanisms provide the cleanest extracts [9].

- Chromatographic Optimization: Manipulate mobile phase pH to alter retention of basic compounds relative to phospholipids. Implement UPLC technology instead of HPLC for significant improvement in reducing matrix effects [9].

- Ionization Technique Evaluation: Consider atmospheric pressure chemical ionization (APCI) as it is generally less susceptible to matrix effects compared to ESI [8].

- Matrix Effect Quantification: Use post-column infusion to monitor suppression/enhancement regions or prepare calibration standards in biological matrix to assess quantification accuracy [8].

What systematic errors arise from improper calibration practices?

Issue: Inaccurate calibration introduces systematic errors that propagate through all subsequent measurements, affecting method comparison studies.

Background: Calibration errors can occur from using improper standards, unstable reference materials, or incorrect calibration procedures. For example, in amino acid assay by ion-exchange chromatography, using different commercial standards led to systematic errors during sample calibration [10].

Troubleshooting Protocol:

- Reference Standard Verification: Use certified reference materials with documented purity. Verify any commercial standards against certified reference materials before use [10].

- Calibration Curve Validation: Establish multiple calibration points covering the entire analytical measurement range. Verify linearity through statistical analysis [11].

- Regular Recalibration: Implement a calibration schedule based on instrument severity of use, environmental conditions, and required accuracy. Frequently used devices should be checked and recalibrated regularly [11].

- Cross-Validation: Compare results with reference methods when possible. In method comparison studies, analyze at least 40 patient specimens covering the entire working range of the method [4].

How does sample preparation introduce systematic errors?

Issue: Sample preparation techniques can introduce systematic errors through analyte loss, contamination, or incomplete processing.

Background: Deproteinization of plasma for amino acid assays clearly enlarges the coefficient of variation in the determination of cystine, aspartic acid, and tryptophan. Losses of hydrophobic amino acids occur during this process, particularly when the supernatant volume is small [10].

Troubleshooting Protocol:

- Deproteinization Optimization: For plasma amino acid assays, remove supernatant promptly after deproteinization. Delaying removal for 1 hour decreases tryptophan concentration [10].

- Internal Standard Selection: Use stable isotope-labeled internal standards that closely mimic analyte behavior. Note that correction for hydrophobic amino acid losses using internal standards may not be possible [10].

- Extraction Efficiency Determination: Calculate recovery percentages for each sample preparation method. Pure cation exchange SPE and mixed-mode SPE provide cleaner extracts with reduced matrix effects compared to protein precipitation [9].

- Process Standardization: Strictly control timing, temperature, and handling procedures across all samples to minimize variation.

What environmental and storage conditions cause systematic errors?

Issue: Improper sample storage and environmental control during analysis introduce systematic errors through analyte degradation or altered instrument performance.

Background: Systematic errors due to storage of plasma for amino acid assay include degradation of glutamine and asparagine at temperatures above -40°C. The concentration of cystine decreases considerably during storage of non-deproteinized plasma [10].

Troubleshooting Protocol:

- Temperature Control: Store deproteinized plasma at -40°C or lower to maintain amino acid concentrations for at least one year. For non-depproteinized plasma, neutralize samples before storage to minimize degradation [10].

- Environmental Monitoring: For multiparameter patient simulators, maintain operating temperature between 10°C and 40°C with humidity between 10% and 90% [11].

- Stability Testing: Conduct stability studies under various storage conditions. Avoid correction for changes due to storage as it is often impossible [10].

- Equipment Acclimation: Allow calibrators to stabilize at room temperature before use. Turn on multiparameter patient simulators 10 minutes before calibration [11].

How do instrument-related factors contribute to systematic errors?

Issue: Instrument characteristics and settings can introduce systematic errors through measurement limitations or inappropriate configuration.

Background: In biomedical testing, load cell measurement errors are common, especially in low-force measurements. The accuracy may be presented as a percentage of reading (relative accuracy) or percentage of full scale (fixed accuracy) [12].

Troubleshooting Protocol:

- Load Cell Specification: Verify load cell operating range matches experimental needs. For low-force measurements, ensure relative accuracy covers the expected measurement range [12].

- Bandwidth Configuration: Set appropriate system bandwidth based on event duration. For events lasting 0.2 seconds, ensure rise time is sufficiently fast to capture peak forces [12].

- Data Rate Optimization: Adjust sampling rate to capture critical events without creating excessively large files. Higher data rates do not necessarily yield additional information [12].

- Regular Performance Verification: Implement scheduled calibration checks for all instruments. For centrifuges, calibrate every six months using tachometers to verify RPM accuracy [11].

Systematic Error Comparison Tables

Table 1: Common Systematic Errors in Biomedical Testing and Their Characteristics

| Error Source | Impact on Results | Detection Method | Common Affected Techniques |

|---|---|---|---|

| Matrix Effects | Ion suppression/enhancement | Post-column infusion | LC-ESI-MS/MS |

| Improper Calibration | Constant or proportional bias | Method comparison | All quantitative techniques |

| Sample Preparation | Analyte loss/contamination | Recovery experiments | Sample extraction methods |

| Storage Conditions | Analyte degradation | Stability studies | Biobank samples, labile analytes |

| Instrument Configuration | Measurement inaccuracy | Reference materials | Mechanical testing, centrifugation |

Table 2: Systematic Error Management Strategies Across Experimental Phases

| Experimental Phase | Preventive Strategy | Corrective Action | Validation Approach |

|---|---|---|---|

| Pre-Analytical | Standardized SOPs | Sample re-preparation | Process validation |

| Calibration | Certified reference materials | Curve re-fitting | Accuracy verification |

| Analysis | Internal standards | Data normalization | Quality controls |

| Post-Analytical | Statistical review | Data transformation | Method comparison |

Experimental Workflows for Systematic Error Minimization

Sample Preparation and Analysis Workflow

Method Comparison Study Design

Research Reagent Solutions for Error Reduction

Table 3: Essential Research Reagents and Materials for Systematic Error Management

| Reagent/Material | Function | Application Example | Error Mitigated |

|---|---|---|---|

| Certified Reference Standards | Calibration and accuracy verification | Preparing calibration curves | Calibration bias |

| Stable Isotope-Labeled Internal Standards | Compensation for sample preparation losses | LC-MS/MS quantification | Matrix effects, recovery variations |

| Mixed-Mode SPE Sorbents | Comprehensive sample cleanup | Biological sample preparation | Phospholipid matrix effects |

| Quality Control Materials | Monitoring analytical performance | Process verification | Instrument drift, reagent degradation |

| Matrix-Matched Calibrators | Accounting for matrix effects | Quantitative bioanalysis | Ion suppression/enhancement |

Frequently Asked Questions

How can I determine if systematic error is affecting my assay results?

Systematic error should be suspected when consistent bias is observed across multiple measurements. Detection methods include: (1) analyzing certified reference materials with known concentrations; (2) performing method comparison studies with reference methods; (3) evaluating recovery of spiked standards; and (4) analyzing quality control samples over time. A minimum of 40 patient specimens should be tested in method comparison studies, selected to cover the entire working range and representing the spectrum of expected sample types [4].

What is the most effective approach to minimize matrix effects in LC-MS/MS?

A systematic, comprehensive strategy provides the most effective approach: (1) utilize mixed-mode solid-phase extraction (combining reversed-phase and ion exchange mechanisms) for cleaner extracts; (2) optimize mobile phase pH to separate analytes from phospholipids; (3) implement UPLC technology for improved resolution; and (4) consider atmospheric pressure chemical ionization (APCI) as an alternative to electrospray ionization for less susceptible compounds. Protein precipitation alone is the least effective sample preparation technique and often results in significant matrix effects [9].

How often should calibration be performed to minimize systematic error?

Calibration frequency depends on the severity of instrument use, environmental conditions, and required accuracy. Frequently used devices should be checked and recalibrated regularly. Specific intervals vary by instrument - for example, centrifuges should be calibrated every six months and documented on a Maintenance Log [11]. The schedule should be established based on stability data and quality control performance.

Can statistical methods correct for systematic errors after data collection?

While some statistical approaches can help identify and partially adjust for systematic errors, prevention during experimental design is far more effective. Empirical calibration using negative controls (outcomes not affected by treatment) and positive controls (outcomes with known effects) can calibrate p-values and confidence intervals in observational studies [13]. However, statistical correction cannot completely eliminate systematic errors, particularly when their sources are not fully understood.

Commonly overlooked sources include: (1) environmental conditions during testing, such as temperature variations affecting material properties; (2) sample storage conditions leading to analyte degradation; (3) instrument bandwidth and data rate settings inappropriate for measurement speed; and (4) load cell characteristics mismatched to force measurement requirements. For example, testing medical consumables at room temperature rather than physiological temperature (37°C) can drastically affect results for catheters, gloves, and tubing [12].

Troubleshooting Guides

Problem: My experimental results are consistently skewed away from the known true value.

Diagnosis: This is a classic symptom of systematic error, a fixed deviation inherent in each measurement due to flaws in the instrument, procedure, or study design [14] [15]. Unlike random error, it cannot be reduced by simply repeating measurements [16].

Solution: Execute the following troubleshooting workflow to identify and correct the error.

Detailed Corrective Actions:

- Step 1: Verify Instrument Calibration: Systematic error often stems from poorly calibrated instruments [17] [14]. Use standard weights or certified reference materials to check your equipment. If found, apply a correction factor to all future measurements and include the uncertainty of this correction in your overall uncertainty budget [14].

- Step 2: Control Environmental Errors: External factors like temperature fluctuations, humidity, or vibration can introduce systematic bias [17] [15]. Monitor the environment and implement controls to maintain stable conditions throughout the experiment.

- Step 3: Standardize Experimental Procedure: Procedural errors from inconsistent methods are a common source of bias [15]. Develop and document a Standard Operating Procedure (SOP). Train all personnel to ensure the procedure is applied uniformly, eliminating variations in how measurements are taken or data is recorded (transcriptional error) [15].

- Step 4: Implement Blinding Techniques: Observer bias occurs when the researcher's expectations influence measurements [17] [16]. Use blinding (or masking) so that personnel measuring outcomes are unaware of the sample's group assignment or the expected results [16].

- Step 5: Use Certified Reference Materials (CRMs): The most robust way to detect systematic error is to measure a CRM with a known property value [14]. A significant difference between your result and the certified value confirms a systematic error, allowing for quantification and correction.

Guide 2: Mitigating Systematic Error in Clinical and Diagnostic Decisions

Problem: Diagnostic tests or clinical decisions are consistently inaccurate, leading to missed or delayed diagnoses.

Diagnosis: This indicates systematic error in a clinical context, often manifesting as cognitive bias in decision-making or information bias from flawed diagnostic systems [18] [19].

Solution: Implement strategies targeting cognitive processes and system-level checks.

Detailed Corrective Actions:

- Strategy: Deploy Clinical Decision Support Systems (CDSS): These technology-based systems provide alerts for potential issues like drug-drug interactions or reminders for necessary follow-up care [20] [21]. Evidence shows CDSS can reduce medication errors and prevent adverse drug events by providing a systematic check against human oversight [20].

- Strategy: Implement Cognitive Forcing: This involves teaching clinicians to recognize their own cognitive biases (e.g., anchoring, confirmation bias) and using metacognition (thinking about one's thinking) to challenge initial diagnoses [19]. Employing checklists for differential diagnosis is a practical cognitive forcing strategy.

- Strategy: Establish Diagnostic Audit Systems: Implement systems that provide feedback on diagnostic performance [18]. Trigger algorithms, for example, can automatically review records to identify patients with potential delayed diagnoses (e.g., those returning to the emergency department within days), allowing for review and correction of diagnostic processes [18].

Frequently Asked Questions (FAQs)

Q1: What is the fundamental difference between systematic error and random error?

| Aspect | Systematic Error (Bias) | Random Error |

|---|---|---|

| Cause | Flaw in instrument, method, or observer [14] [15] | Unpredictable, chance variations [17] [14] |

| Impact | Consistent offset from true value; affects accuracy [14] | Scatter in repeated measurements; affects precision [17] [14] |

| Reduction | Improved design, calibration, blinding [16] | More measurements or replication [15] |

| Quantification | Difficult to detect statistically; requires comparison to a standard [14] | Quantified by standard deviation or confidence intervals [14] |

Q2: How can I quantify the impact of a systematic error on my results? Systematic error can be represented mathematically. For instance, in epidemiological studies, the observed risk ratio ((RR{obs})) can be expressed as: [ RR{obs} = RR_{true} \times Bias ] where (Bias) represents the systematic error. If (Bias = 1), there is no error; if (Bias > 1), the observed risk is overestimated; and if (Bias < 1), it is underestimated [16]. In engineering, the maximum systematic error ((\Delta M)) for a measurement that is a function of multiple variables ((x, y, z...)) can be estimated by summing the individual systematic errors in quadrature: (\Delta M = \sqrt{\delta x^2 + \delta y^2 + \delta z^2}) [14].

Q3: What are the real-world consequences of systematic error in drug development? Systematic error in drug development can lead to incorrect conclusions about a drug's safety or efficacy, potentially resulting in the pursuit of ineffective compounds or the failure to identify toxic effects. This wastes immense resources. Conversely, using Model-Informed Drug Development (MIDD) approaches, which systematically integrate data to quantify benefit/risk, has been shown to yield significant savings, reducing cycle times by approximately 10 months and cutting costs by about $5 million per program by improving trial efficiency and decision-making [22].

Q4: What are the main types of systematic error (bias) in research on human subjects? The three primary types are:

- Selection Bias: Occurs when there is a systematic difference between the characteristics of those selected for the study and those who are not [16].

- Information Bias: Arises from inaccurate measurement of exposure or outcome variables, which can include observer bias or recall bias [16].

- Confounding: Occurs when a third variable is related to both the exposure and the outcome, distorting their apparent relationship [16].

Q5: Can digital health technology effectively reduce systematic errors in healthcare? Yes. Digital Health Technology (DHT), particularly Clinical Decision Support Systems (CDSS), has been proven effective. A 2025 systematic review found that DHT interventions reduced adverse drug events (ADEs) by 37.12% and medication errors by 54.38% on average. These systems work by providing automated, systematic checks against human cognitive biases and procedural oversights, making them a cost-effective strategy for improving medication safety [21].

The Scientist's Toolkit: Research Reagent Solutions

| Tool / Reagent | Function / Explanation |

|---|---|

| Certified Reference Materials (CRMs) | A substance with one or more property values that are certified by a validated procedure, providing a traceable standard to detect and correct for systematic error in analytical measurements [14]. |

| Clinical Decision Support System (CDSS) | A health information technology system that provides clinicians with patient-specific assessments and recommendations to aid decision-making, systematically reducing diagnostic and medication errors [20] [21]. |

| Savitzky-Golay (S-G) Filter | A digital filter that can be used to smooth data and is integral to advanced algorithms (like the Recovery method in DIC) for mitigating undermatched systematic errors in deformation measurements [23]. |

| Cognitive Forcing Strategies | A set of cognitive tools designed to force a clinician to step back and consider alternative possibilities, thereby counteracting inherent cognitive biases like anchoring and confirmation bias [19]. |

| Trigger Algorithms | Automated audit systems that use predefined criteria (e.g., a patient returning to the ER within 10 days) to identify cases with a high probability of a diagnostic error for further review [18]. |

Key Concepts FAQ

What is the difference between accuracy, precision, and bias in method-comparison studies?

In method-comparison studies, bias is the central term describing the systematic difference between a new method and an established one [24]. It is the mean overall difference in values obtained with the two different methods [24]. Accuracy, in contrast, is the degree to which an instrument measures the true value of a variable, typically assessed by comparison with a calibrated gold standard [24]. Precision refers to the degree to which the same method produces the same results on repeated measurements (repeatability) or how closely values cluster around the mean [24]. Precision is a necessary condition for assessing agreement between methods [24].

How do constant and proportional bias differ from each other?

Constant and proportional bias are two distinct types of systematic error [25].

- Constant Bias (or Fixed Bias): One method gives values that are consistently higher (or lower) than the other by a constant amount, regardless of the measurement level [25]. For example, a scale that always reads 5 grams heavier than the true weight.

- Proportional Bias: One method gives values that are higher (or lower) than the other by an amount that is proportional to the level of the measured variable [25]. The absolute difference between methods increases as the magnitude of the measurement increases.

What is Total Error, and why is it important?

Total Error is a crucial concept for judging the overall acceptability of a method. It accounts for both the systematic error (bias) and the random error (imprecision) of the testing process [26]. The components of error are important for managing quality in the laboratory, as the total error can be calculated from these components [26]. A method is judged acceptable when the observed total error is smaller than a pre-defined allowable error for the test's medical application [26].

What statistical analysis should I use to detect constant and proportional bias?

The Pearson correlation coefficient (r) is ineffective for detecting systematic biases, as it only measures random error [25]. While difference plots (Bland-Altman plots) are popular, they do not distinguish between fixed and proportional bias [25]. Least products regression (a type of Model II regression) is a sensitive technique preferred for detecting and distinguishing between fixed and proportional bias because it accounts for random error in both measurement methods [25]. Ordinary least squares (Model I) regression is invalid for this purpose [25].

How can I minimize systematic errors in my experiments?

Systematic errors can be minimized through careful experimental design and procedure:

- Calibration: Perform the procedure on a known reference quantity and adjust the process until the known result is obtained [2].

- Apparatus Calibration: Corrects for instrumental errors by ensuring all equipment is properly calibrated [27].

- Control Determination: Running the experiment with a standard substance under identical conditions helps minimize errors [27].

- Blank Determination: Conducting a test without the sample identifies errors caused by reagent impurities [27].

- Standardization: Create a consistent, repeatable process for all experiments to prevent on-the-fly changes based on expectations [28].

Troubleshooting Guides

Issue 1: Detecting and Quantifying Bias in a New Glucose Meter

Problem: A new point-of-care blood glucose meter needs to be validated against the standard laboratory analyzer to determine if it can be used interchangeably.

Solution:

- Experimental Design:

- Collect a sufficient number of patient specimens (perform an a priori sample size calculation) [24].

- Ensure paired measurements are taken simultaneously (or as close as possible) with both methods to avoid time-based changes in the analyte [24].

- The specimens should cover the entire physiological range of glucose values for which the meter will be used [24].

- Data Analysis:

- Construct a Bland-Altman Plot: Plot the difference between the new meter and the lab method (y-axis) against the average of the two measurements (x-axis) [24] [26].

- Calculate Bias and Limits of Agreement: The overall bias is the mean of all the differences. The limits of agreement are calculated as bias ± 1.96 standard deviations of the differences, defining the range where 95% of differences between the two methods are expected to fall [24].

- Interpretation: Visually inspect the plot for patterns. A spread of points that is consistent across the average values suggests only constant bias. A pattern that fans out or forms a trend suggests proportional bias, indicating that a regression approach may be more appropriate [25].

Issue 2: Addressing High Background in a High-Throughput Screening Assay

Problem: A quantitative high-throughput screening (qHTS) for a new drug candidate shows systematic row, column, and edge effects, making it difficult to distinguish true signals from noise.

Solution: Apply normalization techniques to remove spatial systematic errors [29].

- Linear Normalization (LN):

- Standardization: For each plate, transform the raw data using the formula:

x_i,j' = (x_i,j - μ) / σ, wherex_i,jis the raw value, μ is the plate mean, and σ is the plate standard deviation [29]. - Background Subtraction: Create a background value for each well position by averaging its normalized value across all plates. Subtract this background surface from each plate [29].

- Standardization: For each plate, transform the raw data using the formula:

Non-Parametric Regression (LOESS):

- Apply a LOESS (Locally Weighted Scatterplot Smoothing) smoothing technique to the data to correct for local cluster effects. The optimal smoothing parameter (span) can be determined using criteria like the Akaike Information Criterion (AIC) [29].

Combined Approach (LNLO):

- For the most effective removal of multiple types of systematic error, first apply Linear Normalization (LN), then apply the LOESS smoothing (LO) to the result [29].

Issue 3: Unexplained Discrepancies in Method-Comparison Data

Problem: A method-comparison study shows poor agreement, but the standard correlation analysis shows a high correlation coefficient (r).

Solution: A high correlation does not indicate agreement, only that the methods are related [25]. Follow this diagnostic flowchart to identify potential causes.

Essential Materials and Reagents

Table 1: Key Research Reagent Solutions for Method-Comparison Studies

| Item | Function/Brief Explanation |

|---|---|

| Reference Standard | A substance with a known, high-purity value used to calibrate the established method and ensure its accuracy [27]. |

| Control Materials | Stable materials with known concentrations (e.g., high, normal, low) analyzed alongside patient samples to monitor the precision and stability of both methods [27]. |

| Blank Reagent | The reagent or solvent without the analyte, used in a "blank determination" to identify and correct for signals caused by the reagent itself [27]. |

| Calibrators | A set of standards used to construct a calibration curve, which defines the relationship between the instrument's response and the analyte concentration [2]. |

Experimental Protocol: Conducting a Method-Comparison Study

Objective: To estimate the systematic error (bias) between a new method and an established comparative method and determine if the new method is acceptable for clinical use [26].

Step-by-Step Methodology:

- Define Medical Requirements: Identify the critical medical decision concentrations for the analyte. This determines the required analytical range and where error estimates are most important [26].

- Sample Selection and Collection: Collect a sufficient number of patient specimens (e.g., via a priori power calculation) that cover the entire analytical range of interest, including the medical decision levels [24].

- Paired Measurements: Analyze each specimen with both the new and the established method in a randomized order, ensuring measurements are made as simultaneously as possible to avoid biological variation [24].

- Data Collection and Integrity: Record all paired results. Immediately plot the data on a comparison graph (scatter plot) to visually identify any outliers or non-linearity while specimens are still available for re-testing if needed [26].

- Statistical Analysis:

- For a Single Decision Level: If focused on one medical decision concentration, a Bland-Altman difference plot with calculation of bias and limits of agreement is often sufficient [26].

- For Multiple Decision Levels: If assessing error across a wide range, use regression analysis. If the correlation coefficient (r) is ≥ 0.99, ordinary linear regression may be suitable. If r < 0.975, use more robust techniques like Deming or Passing-Bablok regression [26].

- Interpretation and Decision: Compare the estimated systematic error (bias) and total error to the predefined allowable error. The method is acceptable if the observed error is smaller than the allowable error [26].

In method comparison studies, a core challenge is distinguishing true methodological bias from spurious associations caused by confounding factors. Directed Acyclic Graphs (DAGs) provide a powerful framework for this task by visually representing causal assumptions and clarifying the underlying data-generating processes [30]. A DAG is a directed graph with no cycles, meaning you cannot start at a node, follow a sequence of directed edges, and return to the same node [31] [32]. Within the context of minimizing systematic error, DAGs allow researchers to move beyond mere statistical correlation and reason explicitly about the mechanisms through which errors might be introduced, transmitted, or confounded [30]. This structured approach is vital for identifying which variables must be measured and controlled to obtain an unbiased estimate of the true method difference, thereby addressing the "fundamental problem of causal inference" – that we can never simultaneously observe the same unit under both test and comparative methods [30]. By framing the problem causally, DAGs help ensure that the subsequent statistical analysis targets the correct estimand for the target population.

Core Concepts of Causal DAGs

The "What" and "Why" of Directed Acyclic Graphs

A Directed Acyclic Graph (DAG) is defined by two key characteristics [31] [32]:

- Directed Edges: Each edge (or arrow) has a direction, signifying a one-way relationship or dependency from one node (vertex) to another.

- Acyclic: The graph contains no cycles or closed loops; it is impossible to start at any node, follow the directed arrows, and return to the starting node.

In causal inference, DAGs are used to represent causal assumptions, where nodes represent variables, and directed arrows (X → Y) represent the causal effect of X on Y [30]. The acyclic property reflects the logical constraint that a variable cannot be a cause of itself, either directly or through a chain of other causes.

Key Terminology and Properties

To effectively use DAGs, understanding their fundamental properties is essential:

- Reachability/Paths: A node A is reachable from node B if a directed path exists from B to A. The set of all such relationships defines the reachability relation of the DAG [31] [32].

- Topological Ordering: A DAG can be linearly ordered such that for every directed edge (U → V), node U comes before node V in the ordering. This is crucial for understanding dependency and the sequence of events [31] [32].

- Transitive Closure & Reduction: The transitive closure is a graph with the most edges that has the same reachability relation. Conversely, the transitive reduction is the graph with the fewest edges that preserves the same reachability, simplifying the diagram to only essential relationships [32].

Foundational Graphical Structures

All complex causal diagrams are built from a few elementary structures that describe the basic relationships between variables. The table below summarizes these core structures.

Table 1: Elementary Structures in Causal Directed Acyclic Graphs

| Structure Name | Graphical Representation | Causal Interpretation | Role in Error Mechanisms |

|---|---|---|---|

| Chain (Cause → Mediator → Outcome) | A → M → Y | A affects M, which in turn affects Y. | Represents a mediating pathway; controlling for M can block the path of causal influence from A to Y. |

| Fork (Common Cause) | A ← C → Y | A common cause C affects both A and Y. | Represents confounding; failing to control for C creates a spurious, non-causal association between A and Y. |

| Immoralities / Colliders (Common Effect) | A → C ← Y | Both A and Y are causes of C. | Conditioning on the collider C (or its descendant) induces a spurious association between A and Y. |

These structures form the building blocks for identifying confounding, selection bias, and other sources of systematic error.

Building Your DAG: A Step-by-Step Guide

Constructing a DAG is an iterative process that requires deep subject-matter knowledge. The following steps provide a systematic guide.

- Define the Causal Question: Precisely specify the exposure (e.g., the new test method) and the outcome (e.g., the result from a reference method) [30]. The entire DAG is built to investigate the causal effect of this exposure on this outcome.

- Identify the Target Population: Clarify the population for whom the causal knowledge is meant to generalize, as this influences the relevant variables and relationships [30].

- List Relevant Variables: Enumerate all known or plausible variables related to the exposure and outcome. This includes predictors of the outcome, causes of the exposure, and common causes of both.

- Draw Causal Relationships: Based on subject-matter expertise, draw directed arrows from causes to their effects. This step encodes your explicit assumptions about the data-generating mechanism.

- Simplify the Diagram: Apply the rules of d-separation (see Section 4.1) to check for redundant paths and consider using the transitive reduction to present the most parsimonious graph that retains all necessary causal relationships [32].

- Validate and Revise: Review the DAG with other experts and revise it as needed. Where ambiguity exists, it is good practice to report multiple plausible DAGs and conduct analyses for each [30].

Using DAGs for Error Identification and Control

The d-separation Criterion

d-separation is a fundamental graphical rule for determining, from a DAG, whether a set of variables Z blocks all paths between two other variables, X and Y. A path is blocked by Z if:

- The path contains a chain (X → M → Y) or a fork (X ← C → Y) and the middle variable M or C is in Z, or

- The path contains a collider (X → C ← Y) and neither C nor any descendant of C is in Z.

If all paths between X and Y are blocked by Z, then X and Y are conditionally independent given Z. This rule is the graphical counterpart to statistical conditioning and is essential for identifying confounding and selection bias.

Identifying Confounding Bias

Confounding is a major source of systematic error in method comparisons. A confounder is a variable that is a common cause of both the exposure and the outcome. In a DAG, confounding is present if an unblocked non-causal "back-door path" exists between exposure and outcome [30].

Diagram 1: Identifying a Confounder

To block this back-door path and obtain an unbiased estimate of the causal effect of A on Y, you must condition on the confounder, "Specimen Age". The DAG makes this adjustment strategy explicit.

Identifying Selection Bias (Collider Bias)

Selection bias, often arising from conditioning on a collider, is another pernicious source of systematic error.

Diagram 2: Inducing Selection Bias

In this DAG, "Study Inclusion" is a collider. While "True Analytic Concentration" and "Instrument Sensitivity" are independent in the full population, conditioning on "Study Inclusion" (e.g., by only analyzing specimens that produced a detectable signal) creates a spurious association between them, biasing the analysis.

Troubleshooting Guides and FAQs

FAQ 1: My DAG is Complex. How Do I Know What to Control For?

Question: My DAG has many variables and paths. I'm unsure which variables to include as covariates in my model to minimize confounding without introducing bias.

Answer: Use the back-door criterion. To estimate the causal effect of exposure A on outcome Y, a set of variables Z is sufficient to control for confounding if:

- Z blocks every back-door path (i.e., any path starting with an arrow into A) between A and Y.

- No variable in Z is a descendant of A (i.e., not affected by A).

Troubleshooting Steps:

- List all non-causal paths between your exposure (A) and outcome (Y).

- For each path, check if it is already blocked by a collider. If not, identify a variable on that path that is not a collider which you can condition on to block it.

- The minimal sufficient set is often a set of variables that blocks all unblocked back-door paths without conditioning on colliders or their descendants.

Diagram 3: Applying the Back-Door Criterion

In this DAG, the set Z = {C1, C2} is sufficient to control for confounding. It blocks the back-door paths A ← C1 → Y and A ← C2 → Y. Controlling for "Lab Temperature" is unnecessary as it is not a common cause.

FAQ 2: Why Did My Bias Get Worse After Adjusting for a Variable?

Question: I adjusted for a variable I thought was a confounder, but the association between my exposure and outcome became stronger and more biased. What happened?

Answer: This is a classic symptom of having adjusted for a collider or a descendant of a collider. Conditioning on a collider opens a spurious path between its causes, which can create or amplify bias.

Troubleshooting Steps:

- Revisit your DAG and identify all colliders (variables with two or more arrows pointing into them).

- Check if you have conditioned (e.g., matched, stratified, or included as a covariate) on any of these colliders or variables caused by them (their descendants).

- If you find one, re-run your analysis without conditioning on that variable. Alternatively, use more advanced methods like inverse probability weighting to handle the selection without conditioning.

FAQ 3: How Do I Handle Unmeasured Confounding?

Question: My DAG suggests a crucial confounder exists, but I did not collect data on it. Is my causal inference doomed?

Answer: While an unmeasured confounder poses a serious threat to validity, your DAG still provides valuable insights and options.

Troubleshooting Steps:

- Sensitivity Analysis: Use the structure of your DAG to inform a quantitative sensitivity analysis. This analysis estimates how strong the unmeasured confounder would need to be to explain away the observed effect.

- Instrumental Variables (IV): Sometimes, a DAG can help identify a valid instrumental variable (Z), which affects Y only through its effect on A and is not affected by the unmeasured confounder. IV methods can provide unbiased effect estimates under these conditions.

- Proxy Controls: If you have a measured variable that is a proxy (an imperfect measure) of the unmeasured confounder, it may be possible to use it to partially control for confounding.

DAGs in Action: Experimental Protocol for a Method Comparison Study

The following protocol integrates DAGs into the design and analysis of a method comparison study, a key activity for minimizing systematic error in analytical research [4].

Protocol: Method Comparison with Causal Reasoning

Purpose: To estimate the systematic error (inaccuracy) between a new test method and a comparative method, using causal diagrams to guide the experimental design and statistical analysis, thereby minimizing confounding and other biases [4].

Pre-Experimental Steps:

- Define Causal Question: "What is the systematic error of the new method compared to the reference method for measuring analyte X in human serum?"

- Define Target Population: The general adult population with suspected disorders of X.

- Construct DAG: Before collecting data, assemble a team of experts to draft a DAG. Include variables such as: Test Method, Reference Method Result, Specimen Matrix, Hemolysis, Lipemia, Icterus, Patient Age, Disease Status, Laboratory Technician, and Batch Effect.

Experimental Execution [4]:

- Specimen Selection: A minimum of 40 patient specimens should be tested, selected to cover the entire working range of the method. The quality and range of specimens are more critical than a large number.

- Measurement: Analyze each specimen using both the test and comparative methods. Ideally, perform measurements in duplicate, with the duplicates analyzed in different runs or in a different order to identify sample mix-ups or transposition errors.

- Time Period: Conduct the experiment over a minimum of 5 days, and ideally up to 20 days, to capture and average out day-to-day systematic variations.

- Specimen Handling: Analyze specimens by both methods within two hours of each other to minimize differences due to specimen instability. Define and systematize specimen handling procedures (e.g., centrifugation, storage temperature) prior to the study.

Data Analysis Guided by DAG [4]:

- Graphical Inspection: Create a difference plot (test result minus reference result vs. reference result) or a scatterplot (test result vs. reference result). Visually inspect for outliers and patterns.

- Statistical Modeling: Based on the pre-specified DAG, identify the minimal sufficient set of covariates for adjustment. If the DAG indicates no confounding, a simple linear regression of the test result on the reference result may be appropriate.

- Estimate Systematic Error: For a wide analytical range, use linear regression (Y = a + bX) to estimate the slope (b) and intercept (a). Calculate the systematic error (SE) at a critical medical decision concentration (Xc) as: SE = (a + b*Xc) - Xc [4].

- Sensitivity Analysis: Perform sensitivity analyses to assess the potential impact of any unmeasured confounders identified in the DAG.

Research Reagent Solutions

Table 2: Essential Materials for Method Comparison Studies

| Item | Function / Rationale |

|---|---|

| Calibrated Reference Material | Provides a traceable standard to ensure the correctness of the comparative method, serving as a benchmark for accuracy [4]. |

| Unadulterated Patient Specimens | The primary matrix for testing; carefully selected to cover the analytical range and represent the spectrum of expected disease states and interferences [4]. |

| Stable Control Materials | Used for quality control (QC) during the multi-day experiment to monitor and ensure the stability of both methods over time [33] [27]. |

| Interference Stock Solutions | (e.g., Hemolysate, Lipid Emulsions, Bilirubin) Used in separate recovery and interference experiments to characterize the specificity of the new method and identify potential sources of systematic error suggested by the DAG [4] [27]. |

| Appropriate Preservatives & Stabilizers | (e.g., Sodium Azide, Protease Inhibitors) Ensures specimen stability between analyses by the two methods, preventing pre-analytical error from being misattributed as methodological error [4]. |

Advanced Topics: DAGs for Complex Error Mechanisms

Time-Varying Confounding and Causal Pathways

In longitudinal studies, a variable may confound the exposure-outcome relationship at one time point but also lie on the causal pathway between a prior exposure and the outcome at a later time point. Standard regression adjustment for such time-varying confounders can block part of the causal effect of interest. DAGs are exceptionally useful for visualizing these complex scenarios, and methods like g-computation or structural nested models are needed for unbiased estimation.

Integrating DAGs with Other Error-Minimization Techniques

DAGs provide a theoretical framework that complements traditional, practical error-minimization techniques. For example:

- Blank Determination: In a DAG, this practice can be framed as an intervention to set the "Sample Analyte" to zero, allowing the isolation of the effect of "Reagent Impurities" on the "Measured Signal" [33] [27].

- Standard Addition: This method can be represented as a series of interventions at different dose levels, helping to isolate the effect of the "Sample Matrix" on the "Analytical Recovery" [33].

By formally representing these practices in a DAG, researchers can better understand their underlying causal logic and how they contribute to a comprehensive error-control strategy.

Designing Rigorous Method Comparison Experiments: A Step-by-Step Protocol

Frequently Asked Questions (FAQs)

1. What is the core difference between a reference method and a routine comparative method in terms of error attribution?

The core difference lies in the established "correctness" of the method and, consequently, how differences from a new test method are interpreted. A reference method is a high-quality method whose results are known to be correct through comparison with definitive methods or traceable reference materials. Any difference between the test method and a reference method is assigned as error in the test method. In contrast, a routine comparative method does not have this documented correctness. If a large, medically unacceptable difference is found between the test method and a routine method, further investigation is needed to identify which of the two methods is inaccurate [4].

2. Why is a large sample size (e.g., 40-200 specimens) recommended for a method comparison study?

The sample size serves different purposes. A minimum of 40 patient specimens is generally recommended to cover the entire working range of the method and provide a reasonable estimate of systematic error [4] [34]. However, larger sample sizes of 100 to 200 specimens are recommended to thoroughly investigate the methods' specificity, particularly to identify if individual patient samples show discrepancies due to interferences in the sample matrix. The quality and range of the specimens are often more important than the absolute number [4].

3. My data shows a high correlation coefficient (r = 0.99). Does this mean the two methods agree?

Not necessarily. A high correlation coefficient mainly indicates a strong linear relationship between the two sets of results but does not prove agreement [34]. Correlation can be high even when there are consistent, clinically significant differences between the methods. It is more informative to use statistical techniques like regression analysis (e.g., Passing-Bablok, Deming) or Bland-Altman plots, which are designed to reveal constant and proportional biases that the correlation coefficient overlooks [34].

4. What are the key strategies to minimize systematic error in a method comparison study?

Several strategies can be employed to minimize systematic error:

- Calibration: Regularly calibrate instruments against known, traceable standards [3] [35] [2].

- Methodology: Use a reference method for comparison if possible [4].

- Experimental Design: Analyze specimens over multiple days (at least 5 recommended) to minimize systematic errors from a single run [4].

- Sample Handling: Define and systematize specimen handling to prevent stability issues from causing differences [4].

- Triangulation: Use multiple techniques or instruments to measure the same analyte to cross-verify results [3].

5. When should I use ordinary linear regression versus more advanced methods like Passing-Bablok or Deming regression?

Ordinary linear regression assumes that the comparative (reference) method has no measurement error and is best suited when this assumption is largely true, or when the data range is wide (e.g., correlation coefficient >0.975) [36]. In contrast, Passing-Bablok regression is a robust, non-parametric method that does not require normal distribution of errors, is insensitive to outliers, and accounts for imprecision in both methods. It is particularly useful when the errors between the two methods are of a similar magnitude [34]. The choice depends on the known error characteristics of your comparative method.

Troubleshooting Guides

Problem 1: Discrepant Results Between New and Routine Method

Symptoms: You observe a consistent, significant difference between your new test method and the established routine method.

Resolution Steps:

- Verify Specimen Integrity: Confirm that samples were analyzed within a stable timeframe (e.g., within 2 hours for unstable analytes) and handled identically to avoid pre-analytical errors [4].

- Check Calibration: Re-calibrate both instruments using traceable calibrators to rule out simple offset or scale factor errors [35] [2].

- Run a Control Sample: Analyze a control sample with a known target value. This helps determine which of the two methods is deviating from the expected result [35].

- Investigate Specificity: Use recovery and interference experiments to check if the new method is affected by substances in the sample matrix that the routine method is not. A blank determination can help identify reagent impurities [4] [27].

- Use a Third Method: If the discrepancy persists, employ a definitive or reference method, if available, to act as an arbiter and identify which of the two routine methods is inaccurate [4] [27].

Problem 2: Detecting and Interpreting Constant vs. Proportional Error

Symptoms: The regression analysis from your method comparison shows a non-zero intercept and/or a slope that is not 1.

Resolution Steps:

- Perform Regression Analysis: Use an appropriate regression model (e.g., Passing-Bablok) to calculate the regression line equation (y = a + bx) [34].

- Interpret the Intercept (a): A confidence interval for the intercept that does not include zero suggests a constant systematic error. This is an offset that affects all measurements by the same absolute amount, regardless of concentration [34].

- Interpret the Slope (b): A confidence interval for the slope that does not include one suggests a proportional systematic error. This error increases or decreases in proportion to the analyte concentration [34].

- Assess Clinical Impact: Calculate the systematic error at medically important decision levels. For a decision level ( Xc ), the error is ( SE = (a + bXc) - X_c ). Compare this error to your allowable total error (TEa) specifications to determine if it is clinically acceptable [4] [36].

Experimental Protocols

Detailed Protocol: Method Comparison Experiment

Purpose: To estimate the systematic error (inaccuracy) between a new test method and a comparative method using real patient samples [4].

Research Reagent Solutions & Materials

| Item | Function |

|---|---|

| Patient Samples | At least 40 different specimens, covering the entire analytical range and expected disease spectrum [4]. |

| Reference Material | A certified material with a known value, used for calibration and trueness checks [35]. |

| Control Samples | Stable materials with assigned target values, used to monitor the precision and trueness of each analytical run [35]. |

| Calibrators | Solutions used to adjust the response of an instrument to known standard values [35]. |

Procedure:

- Sample Selection: Collect a minimum of 40 patient specimens that span the full reportable range of the assay [4] [34].

- Experimental Schedule: Analyze samples over multiple days (at least 5, ideally up to 20) to incorporate routine sources of variation. Analyze 2-5 patient specimens per day [4].

- Measurement: Analyze each patient specimen using both the test method and the comparative method. Ideally, perform measurements in duplicate and in a randomized order to avoid systematic bias [4].

- Data Collection: Record all results for statistical analysis.

Data Analysis Workflow: The following diagram illustrates the logical process for analyzing method comparison data and making a decision on method acceptability.

Protocol: Calculating and Interpreting Systematic Error

Purpose: To quantify the systematic error at critical medical decision concentrations and determine its acceptability [4] [36].

Procedure:

- Perform Regression: Using your comparison data, calculate the slope (b) and y-intercept (a) of the regression line using an appropriate model (e.g., Passing-Bablok).

- Define Decision Levels: Identify one to three critical medical decision concentrations (( X_c )) for the analyte.

- Calculate Systematic Error: For each decision level (( Xc )), calculate the corresponding value from the test method (( Yc )) and the systematic error (SE).

- ( Yc = a + b \times Xc )

- ( SE = Yc - Xc )

Example Calculation Table: The table below demonstrates how systematic error is calculated and evaluated against a performance goal.

| Medical Decision Level (( X_c )) | Calculated Test Method Value (( Y_c )) | Systematic Error (SE) | Allowable Error (TEa) | Is SE acceptable? |

|---|---|---|---|---|

| 100 mg/dL | ( 2.0 + (1.03 \times 100) = 105.0 ) mg/dL | +5.0 mg/dL | ±6 mg/dL | Yes |

| 200 mg/dL | ( 2.0 + (1.03 \times 200) = 208.0 ) mg/dL | +8.0 mg/dL | ±10 mg/dL | Yes |

| 300 mg/dL | ( 2.0 + (1.03 \times 300) = 311.0 ) mg/dL | +11.0 mg/dL | ±12 mg/dL | Yes |

Example based on a regression line of Y = 2.0 + 1.03X [4].

A technical support center for robust method comparison studies

This resource provides troubleshooting guides and FAQs to help researchers, scientists, and drug development professionals effectively determine sample size and select samples for method comparison studies, with a specific focus on minimizing systematic error.

FAQs: Fundamentals of Sample Size

FAQ 1: Why is sample size critical in method comparison studies? An adequately sized sample is fundamental for two primary reasons [37]:

- To Detect the Effect of Interest: A sample must be large enough to detect the "effect" or difference of clinical importance (e.g., the systematic error between two methods). A sample that is too small can easily fail to detect a clinically important effect (Type II error). Conversely, an excessively large sample may be wasteful and can detect differences that are statistically significant but clinically unimportant [37] [38].

- To Represent the Target Population: The sample must be large enough to effectively represent the target patient population. Heterogeneous populations generally require larger sample sizes to ensure the results are accurate and generalizable [37].

FAQ 2: What are the four essential pieces of information I need to estimate sample size? Before consulting a statistician or software, you should have preliminary estimates for the following four parameters [37] [38] [39]:

- Significance Level (α): The probability of rejecting a true null hypothesis (Type I error), often set at 0.05. This is the risk you are willing to take of concluding a difference exists when it does not [38].

- Power (1-β): The probability of correctly rejecting a false null hypothesis. A common standard is 80% or 90%, meaning you have an 80% or 90% chance of detecting an effect if it truly exists [37] [39].

- Magnitude of Effect (Effect Size): The difference you are trying to detect. For method comparison, this is often the systematic error (bias) that is considered clinically significant. Smaller, more subtle biases require larger samples to detect [37].

- Variability: The standard deviation of your variable of interest. Greater variability in your data requires a larger sample size to distinguish the signal (the effect) from the noise (the variability) [37].

FAQ 3: What is a practical minimum sample size for a method comparison experiment? A minimum of 40 different patient specimens is a common recommendation [4]. The quality and range of these specimens are often more important than a very large number. Specimens should be carefully selected to cover the entire working range of the method [4]. Some scenarios, such as assessing method specificity with different measurement principles, may require 100 to 200 specimens to identify sample-specific interferences [4].

FAQs: Advanced Planning & Troubleshooting

FAQ 4: How do I select patient specimens to ensure they cover the clinical range?

- Strategy: Do not use random patient specimens received by the laboratory. Instead, purposively select specimens based on their known concentrations to ensure coverage from low to high medical decision levels [4].

- Justification: The quality of the experiment depends more on obtaining a wide range of results than on a large number of results. A wide range is crucial for reliably estimating the slope and intercept in regression analysis, which helps characterize the nature of systematic error (constant or proportional) [4] [26].

FAQ 5: My correlation coefficient (r) is low in regression analysis. What does this mean for my sample? A low correlation coefficient (e.g., below 0.975 or 0.99) primarily indicates that the range of your data is too narrow to provide reliable estimates of the slope and intercept using ordinary linear regression [26]. It does not, by itself, indicate the acceptability of method performance.

- Corrective Action: The solution is not to collect more samples at the same concentrations, but to expand the concentration range of your specimens. If expanding the range is impossible, consider using statistical techniques like Deming or Passing-Bablok regression, which are better suited for data with a narrow range or with errors in both methods [26].

FAQ 6: How can I minimize the impact of systematic error from the very beginning?

- Calibration: Calibrate your equipment or entire procedure using a known reference quantity. This is the most reliable way to reduce systematic errors [2].

- Experimental Design: Analyze patient specimens over several different analytical runs (a minimum of 5 days is recommended) to minimize systematic errors that might occur in a single run [4].

- Normalization: In high-throughput studies (e.g., using 1536-well plates), systematic errors like row, column, or edge effects can be minimized post-hoc using normalization techniques that combine linear normalization and non-parametric regression (LOESS) [29].

Data Presentation: Key Parameters & Calculations

Table 1: Fundamental Parameters for Sample Size Estimation [37] [38] [39]

| Parameter | Symbol | Common Standard(s) | Role in Sample Size |

|---|---|---|---|

| Significance Level | α | 0.05 (5%) | A stricter level (e.g., 0.01) reduces Type I error risk but requires a larger sample. |

| Statistical Power | 1-β | 0.80 (80%) | Higher power (e.g., 0.90) increases the chance of detecting a true effect but requires a larger sample. |

| Effect Size | Δ | Minimal Clinically Important Difference | A smaller, harder-to-detect effect requires a larger sample. |

| Variability | σ | Standard Deviation from prior data | Greater variability requires a larger sample to distinguish the effect from background noise. |

Table 2: Common Sample Size Formulas for Different Study Types [38]

| Study Type | Formula | Variable Explanations |

|---|---|---|

| Comparing Two Means | n = (2 * (Zα/2 + Z1-β)² * σ²) / d² |

σ = pooled standard deviation; d = difference between means; Zα/2 = 1.96 for α=0.05; Z1-β = 0.84 for 80% power. |

| Comparing Two Proportions | n = (Zα/2 + Z1-β)² * (p1(1-p1) + p2(1-p2)) / (p1 - p2)² |

p1 & p2 = event proportions in each group; p = (p1+p2)/2. |

| Diagnostic Studies (Sensitivity/Specificity) | n = (Zα/2)² * P(1-P) / D² |

P = expected sensitivity or specificity; D = allowable error. |

Experimental Protocols

Protocol 1: Conducting a Basic Method Comparison Study

Purpose: To estimate the systematic error (bias) between a new test method and a comparative method [4].

- Specimen Selection: Select a minimum of 40 patient specimens to cover the entire analytical range of the method [4].

- Analysis: Analyze each specimen using both the test and comparative methods. Ideally, analyze specimens over multiple days (at least 5) to capture day-to-day variability [4].

- Immediate Data Review: Graph the data as it is collected using a comparison plot (test method vs. comparative method) or a difference plot (difference vs. average). Investigate any large discrepancies immediately while specimens are still available [4] [26].

- Statistical Analysis:

- For a wide analytical range, use linear regression to obtain the slope and intercept, which help identify proportional and constant systematic error, respectively [4] [40].

- For a narrow range, calculate the average difference (bias) and standard deviation of the differences using a paired t-test [26].

Protocol 2: A Workflow for Systematic Error Assessment and Sample Size

The following diagram illustrates a logical workflow for integrating systematic error assessment with sample size planning in method comparison studies.

The Scientist's Toolkit

Table 3: Essential Research Reagent Solutions for Method Comparison

| Item | Function in Experiment |

|---|---|

| Certified Reference Material | A sample with a known, traceable analyte concentration. Serves as the highest standard for assessing accuracy and identifying systematic error (bias) [40]. |

| Quality Control (QC) Samples | Stable materials with known expected values used in every run (e.g., on Levey-Jennings charts) to monitor ongoing precision and accuracy, and to detect shifts indicative of systematic error [40]. |

| Patient Specimens | Real-world samples that represent the biological matrix and spectrum of diseases. They are essential for assessing method performance under actual clinical conditions [4]. |

| Calibrators | Materials used to adjust the analytical instrument's response to establish a correct relationship between the signal and the analyte concentration. Incorrect calibration is a common source of proportional bias [40]. |

FAQs on Core Concepts

Q1: What is the difference between reproducibility and replicability in the context of experimental science?

In metascientific literature, these terms have specific meanings. Reproducibility refers to taking the same data, performing the same analysis, and achieving the same result. Replicability involves collecting new data using the same methods, performing the same analysis, and achieving the same result. A third concept, robustness, is when a different analysis is performed on the same data and yields a similar result [41].

Q2: Why is the stability of patient specimens a critical factor in method comparison studies?

Specimen stability directly impacts the validity of your results. Specimens should generally be analyzed by both the test and comparative methods within two hours of each other to prevent degradation, unless the specific analyte is known to have shorter stability (e.g., ammonia, lactate). Differences observed between methods may be due to variables in specimen handling rather than actual systematic analytical errors. Stability can often be improved by adding preservatives, separating serum or plasma from cells, refrigeration, or freezing [4].

Q3: What is the recommended timeframe for conducting a comparison of methods experiment?

The experiment should be conducted over several different analytical runs on different days to minimize systematic errors that might occur in a single run. A minimum of 5 days is recommended. Extending the experiment over a longer period, such as 20 days, and analyzing only 2 to 5 patient specimens per day, can provide even more robust data that aligns with long-term replication studies [4].

Q4: How can I minimize systematic errors introduced by my equipment or apparatus?

The primary method is calibration. All instruments should be calibrated, and original measurements should be corrected accordingly. This process involves performing your experimental procedure on a known reference quantity. By adjusting your apparatus or calculations until the known result is achieved, you create a calibration curve to correct measurements of unknown quantities. Using equipment with linear responses simplifies this process [27] [2].

Troubleshooting Common Experimental Issues

| Problem | Possible Cause | Solution |

|---|---|---|

| Large, inconsistent differences between methods on a few specimens | Sample mix-ups, transposition errors, or specific interferences in an individual sample matrix [4]. | Perform duplicate measurements on different samples or re-analyze discrepant results immediately while specimens are still available [4]. |

| Consistent over- or under-estimation across all measurements | Systematic error (bias), potentially from uncalibrated apparatus, reagent impurities, or flaws in the methodology itself [27] [42]. | Calibrate all apparatus and perform a blank determination to identify and correct for impurities from reagents or vessels [27] [33]. |

| Findings from an experiment cannot be repeated by others | A lack of replicability, potentially due to analytical flexibility, vague methodological descriptions, or publication bias [41]. | Ensure full transparency by sharing detailed protocols, analysis scripts, and raw data where possible. Pre-register experimental plans to mitigate bias [41]. |