A Strategic Framework for Designing Robust Method Comparison Studies in Assay Validation

This article provides a comprehensive, step-by-step guide for researchers and drug development professionals on designing and executing method comparison studies, a critical component of assay validation.

A Strategic Framework for Designing Robust Method Comparison Studies in Assay Validation

Abstract

This article provides a comprehensive, step-by-step guide for researchers and drug development professionals on designing and executing method comparison studies, a critical component of assay validation. It covers foundational principles of validation versus verification, detailed methodological planning for accuracy and precision assessment, advanced troubleshooting for handling real-world data challenges like method failure, and final verification against regulatory standards. The content synthesizes current best practices and statistical methodologies to ensure reliable, defensible, and compliant analytical results in biomedical and clinical research.

Laying the Groundwork: Core Principles of Method Comparison and Validation

In regulated laboratory environments, the generation of reliable and defensible data is paramount. Two foundational processes that underpin data integrity are method validation and method verification. Although sometimes used interchangeably, they represent distinct activities with specific applications in the assay lifecycle. A clear understanding of the difference—where validation proves a method is fit-for-purpose through extensive testing, and verification confirms it works as expected in a user's specific laboratory—is critical for regulatory compliance and operational efficiency [1] [2].

This application note delineates the strategic roles of method validation and verification within regulated laboratories, providing a clear framework for their application. It further details the design and execution of a robust method comparison study, a critical component for assessing a new method's performance against a established one during verification or method transfer.

Core Definitions and Regulatory Context

Method Validation

Method validation is a comprehensive, documented process that establishes, through extensive laboratory studies, that the performance characteristics of a method meet the requirements for its intended analytical applications [3]. It is performed when a method is newly developed or when an existing method undergoes significant change [1].

The core objective is to demonstrate that the method is scientifically sound and capable of delivering accurate, precise, and reproducible data for a specific purpose, such as a new drug submission [1].

Method Verification

Method verification, in contrast, is the process of confirming that a previously validated method performs as expected in a particular laboratory. It demonstrates that the laboratory can competently execute the method under its own specific conditions, using its analysts, equipment, and reagents [1] [3] [2].

Verification is typically required when adopting a standardized or compendial method (e.g., from USP, EP, or AOAC) [3]. The goal is not to re-establish all performance characteristics, but to provide evidence that the validated method functions correctly in the new setting.

Regulatory Guidelines

The following table summarizes key regulatory guidelines that govern method validation and verification practices.

Table 1: Key Regulatory Guidelines for Method Validation and Verification

| Guideline | Issuing Body | Primary Focus | Key Parameters Addressed |

|---|---|---|---|

| ICH Q2(R1) | International Council for Harmonisation | Global standard for analytical procedure validation [4]. | Specificity, Linearity, Accuracy, Precision, Range, Detection Limit (LOD), Quantitation Limit (LOQ) [4]. |

| USP General Chapter <1225> | United States Pharmacopeia | Validation of compendial procedures; categorizes tests and required validation data [3] [4]. | Accuracy, Precision, Specificity, LOD, LOQ, Linearity, Range, Robustness [3]. |

| FDA Guidance on Analytical Procedures | U.S. Food and Drug Administration | Method validation for regulatory submissions; expands on ICH with a focus on robustness and life-cycle management [4]. | Analytical Accuracy, Precision, Robustness, Documentation. |

Strategic Application: A Decision Framework

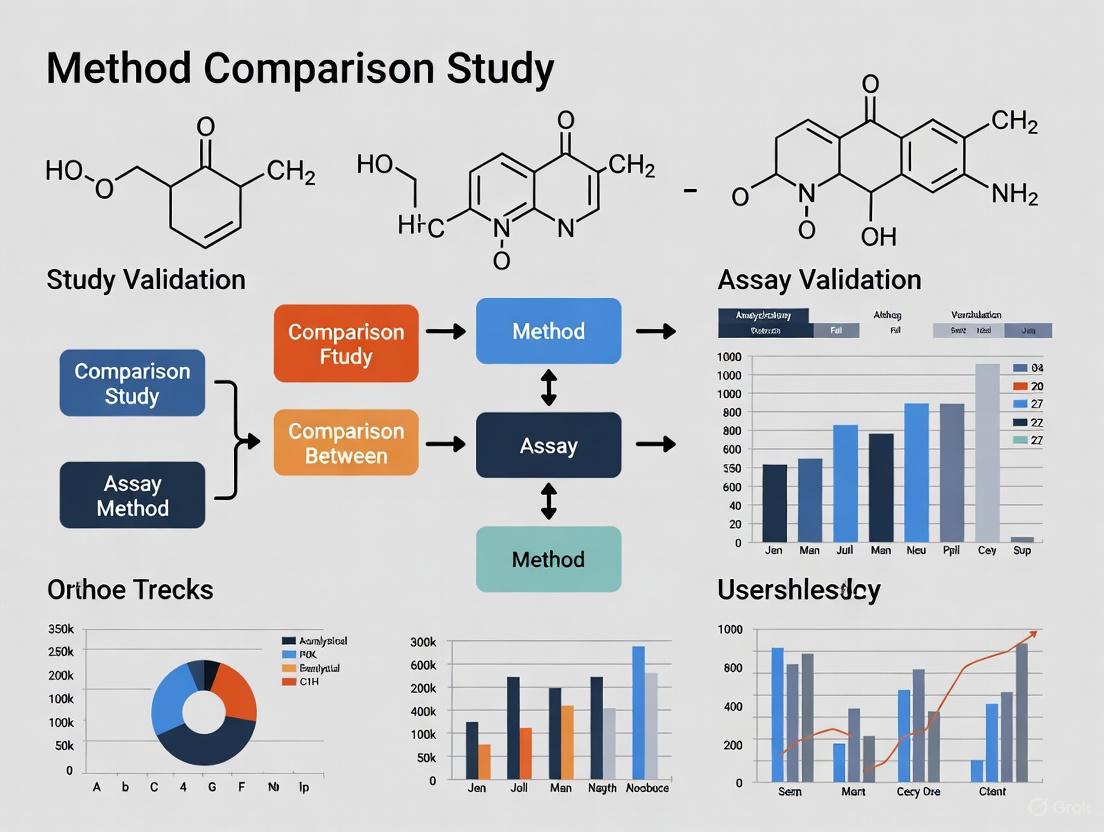

The choice between performing a full validation or a verification is strategic and depends on the method's origin and status. The following workflow diagram outlines the decision-making process for implementing a new analytical method in a regulated laboratory.

Experimental Protocols for Method Validation and Verification

Protocol for a Full Method Validation

A full validation requires a multi-parameter study to establish the method's performance characteristics as per ICH Q2(R1) and USP <1225> [3] [4]. The following table details the key experiments, their methodologies, and acceptance criteria.

Table 2: Protocol for Key Method Validation Experiments

| Validation Parameter | Experimental Methodology | Typical Acceptance Criteria |

|---|---|---|

| Accuracy | Analyze samples spiked with known quantities of the analyte (e.g., drug substance) across the specified range. Compare measured value to true value [3]. | Recovery within specified limits (e.g., 98-102%). RSD < 2% [5]. |

| Precision | 1. Repeatability: Multiple injections of a homogeneous sample by one analyst in one session.2. Intermediate Precision: Multiple analyses of the same sample by different analysts, on different instruments, or on different days [3]. | RSD < 2% for repeatability; agreed limits for intermediate precision [5]. |

| Specificity | Demonstrate that the method can unequivocally assess the analyte in the presence of potential interferences like impurities, degradation products, or matrix components [3]. | No interference observed at the retention time of the analyte. Peak purity tests passed. |

| Linearity & Range | Prepare and analyze a series of standard solutions at a minimum of 5 concentration levels. Plot response vs. concentration and apply linear regression [3]. | Correlation coefficient (r) > 0.999. Residuals are randomly scattered. |

| Robustness | Deliberately introduce small, deliberate variations in method parameters (e.g., mobile phase pH ±0.1, column temperature ±2°C). Evaluate impact on system suitability [3]. | All system suitability parameters remain within specified limits despite variations. |

| LOD & LOQ | Based on signal-to-noise ratio or standard deviation of the response and slope of the calibration curve [3]. | LOD: S/N ≈ 3:1.LOQ: S/N ≈ 10:1 with defined precision/accuracy. |

Designing a Method Comparison Study

A method comparison study is a critical part of method verification or transfer. It estimates the systematic error (bias) between a new (test) method and a established (comparative) method using real patient or sample matrices [6] [7].

1. Study Design and Sample Selection:

- Sample Number: A minimum of 40, and preferably 100, patient specimens is recommended to cover the clinically meaningful range and identify matrix-related interferences [6] [7].

- Sample Analysis: Analyze samples over multiple days (at least 5) and multiple analytical runs to mimic real-world conditions. Ideally, perform duplicate measurements to minimize random variation and identify errors [6] [7].

- Sample Stability: Analyze specimens by both methods within a short timeframe (e.g., 2 hours) to prevent degradation from causing observed differences [6].

2. Data Analysis and Graphical Presentation:

- Visual Inspection: Graph the data during collection to identify discrepant results for immediate re-analysis.

- Difference Plot (Bland-Altman): Plot the difference between the test and comparative method results (y-axis) against the average of the two results (x-axis). This helps visualize bias across the concentration range [7].

- Scatter Plot: Plot test method results (y-axis) against comparative method results (x-axis). A line of equality (y=x) can be drawn to visualize deviations [6] [7].

- Statistical Analysis:

- Avoid Inadequate Statistics: Correlation coefficient (r) and t-test are not sufficient for method comparison, as they measure association, not agreement [7].

- Regression Analysis: For data covering a wide range, use linear regression (e.g., Deming or Passing-Bablok) to estimate constant (y-intercept) and proportional (slope) systematic error. The systematic error at a critical decision concentration (Xc) is calculated as SE = (a + bXc) - Xc, where 'a' is the intercept and 'b' is the slope [6].

- Bias Estimation: For a narrow concentration range, calculate the average difference (bias) between the two methods [6].

The Scientist's Toolkit: Essential Reagents and Materials

The following table lists key materials required for performing method validation and verification studies, particularly for chromatographic assays.

Table 3: Essential Research Reagent Solutions and Materials

| Item | Function / Application |

|---|---|

| Certified Reference Standard | Provides the known, high-purity analyte essential for preparing calibration standards to establish accuracy, linearity, and range. |

| Internal Standard (IS) | A compound added in a constant amount to samples and standards in chromatography to correct for variability in sample preparation and injection. |

| Matrix-Matched Quality Control (QC) Samples | Samples spiked with known analyte concentrations in the biological or sample matrix. Critical for assessing accuracy, precision, and recovery during validation/verification. |

| Appropriate Chromatographic Column | The stationary phase specified in the method. Its type (e.g., C18), dimensions, and particle size are critical for achieving the required separation, specificity, and robustness [5]. |

| HPLC/UHPLC-Grade Solvents and Reagents | High-purity mobile phase components (water, buffers, organic solvents) are essential to minimize baseline noise, ghost peaks, and ensure reproducible retention times. |

| System Suitability Test (SST) Solution | A reference preparation used to confirm that the chromatographic system is performing adequately at the time of the test (e.g., meets requirements for retention, resolution, tailing, and precision) [5]. |

| CCG-50014 | CCG-50014, CAS:883050-24-6, MF:C16H13FN2O2S, MW:316.4 g/mol |

| CCG-63808 | CCG-63808, CAS:620113-73-7, MF:C25H15FN4O2S, MW:454.5 g/mol |

In the field of clinical diagnostics and assay validation, the reliability of a measurement is paramount. Establishing the purpose of a method comparison study is a critical first step that frames the entire investigation, ensuring that the assessment of systematic error and bias is conducted with scientific rigor [8]. These studies are foundational to evidence-based medicine, providing a structured framework to evaluate whether a new or alternative method provides results that are comparable to a established reference [8]. A meticulously designed study purpose not only guides the experimental protocol and data analysis but also ensures the findings are transparent, objective, and repeatable, thereby supporting robust healthcare decision-making [8]. This document outlines detailed application notes and protocols for establishing the study purpose and conducting the subsequent analysis, framed within the broader context of designing a method comparison study for assay validation research.

Defining the Research Question and Objectives

Frameworks for Quantitative Clinical Research

The formulation of a precise and structured research question is the cornerstone of any successful method comparison study. A well-defined question guides every subsequent stage, from literature search and study design to data extraction and synthesis [8]. For quantitative studies focused on therapy, diagnosis, or prognosis, the PICO framework (Population, Intervention, Comparator, Outcome) is the most frequently used tool [8]. Its adaptability makes it suitable for a wide range of research questions in clinical accuracy studies.

Table 1: Adapting the PICO Framework for Method Comparison Studies

| Structure | Meaning | Application in Method Comparison Studies |

|---|---|---|

| P (Population/Patient) | The type of sample, matrix, or patient population under investigation. | Define the specific sample types (e.g., human serum, whole blood, tissue biopsies) and the relevant clinical population (e.g., healthy volunteers, patients with a specific disease staging). |

| I (Intervention/Index) | The new method or assay whose accuracy is being evaluated. | The novel diagnostic platform, a new reagent lot, or a modified assay protocol. |

| C (Comparator) | The reference standard or established method against which the new method is compared. | The gold-standard method, a FDA-approved assay, or the current standard of practice in the clinical laboratory. |

| O (Outcome) | The metrics used to quantify the agreement, error, and bias between the two methods. | Primary outcomes: Systematic error (bias), mean difference, correlation coefficients (e.g., Pearson's r). Secondary outcomes: Limits of agreement, total error, clinical decision point concordance. |

For more complex studies, the PICOTTS extension (Population, Intervention, Comparator, Outcome, Time, Type of Study, Setting) can be employed to provide additional granularity regarding the study duration, design, and environmental context [8].

Core Objectives in Assessing Systematic Error and Bias

The primary objective of a method comparison study is to quantitatively assess the agreement between two measurement procedures. This overarching goal can be broken down into several core analytical objectives:

- Quantify Systematic Error (Bias): Determine the constant and proportional differences between the new method and the reference method across the assay's measurable range [9].

- Evaluate Clinical Concordance: Assess the agreement at critical medical decision thresholds to ensure the new method does not alter patient classification [10].

- Identify Sources of Variation: Diagnose the components of variance, distinguishing systematic bias from random error to guide method improvement [9].

- Establish Acceptability: Judge whether the observed bias falls within pre-defined, clinically acceptable limits based on biological variation or clinical requirements.

Quantitative Data Analysis Methods and Protocols

Selecting the appropriate statistical methods is critical for a valid interpretation of method comparison data. The choice of method depends on the data type, distribution, and the specific research question [9]. The following table summarizes the core quantitative techniques used in assay validation.

Table 2: Quantitative Data Analysis Methods for Method Comparison Studies [9]

| Method Type | Primary Use Case | Key Outputs | Underlying Principle |

|---|---|---|---|

| Descriptive Analysis | Initial data exploration and summary. | Mean difference (Bias), Standard Deviation of differences, Correlation Coefficient (r). | Describes the central tendency and dispersion of the differences between paired measurements. |

| Bland-Altman Analysis | Visualizing and quantifying agreement between two quantitative methods. | Mean bias, Limits of Agreement (LoA = Bias ± 1.96*SD), plot of differences vs. averages. | Assesses the degree of agreement by analyzing the distribution of differences between paired measurements. |

| Passing-Bablok Regression | Deming Regression | A non-parametric regression method used when both methods have error; robust to outliers. Calculates the intercept (constant bias) and slope (proportional bias). | |

| Deming Regression | Method comparison where both methods have measurable error. | Intercept (constant bias), slope (proportional bias), confidence intervals for both. | A type of errors-in-variables regression that accounts for measurement error in both methods. |

| Equivalence Testing | To statistically prove that two methods are equivalent within a pre-specified margin. | Confidence intervals for the mean difference; conclusion of equivalence/non-inferiority. | Uses a reversal of the null hypothesis to test if the mean difference lies within a clinically acceptable range (the equivalence margin). |

Detailed Experimental Protocol: Bland-Altman Analysis

The Bland-Altman plot is a cornerstone technique for assessing systematic error and is widely used in clinical chemistry and laboratory medicine.

Protocol 1: Bland-Altman Analysis for Bias Assessment

Objective: To visualize and quantify the agreement between two clinical measurement methods and estimate the systematic error (bias).

Materials and Reagents:

- A set of N patient samples that span the clinical reporting range of the assay (typically N ≥ 40 is recommended).

- All reagents, calibrators, and controls for both the index and comparator methods.

- Standard laboratory equipment (pipettes, centrifuges, water baths, etc.).

- Statistical software capable of generating scatter plots and performing basic calculations (e.g., R, Python, GraphPad Prism, MedCalc).

Procedure:

- Sample Preparation and Measurement: a. Select and aliquot patient samples to ensure they are homogeneous and sufficient for duplicate testing on both platforms. b. Analyze each sample using both the index method (new) and the comparator method (reference) in a randomized sequence to avoid systematic drift. c. Record the paired results (ValueIndex, ValueReference) for each sample.

Data Calculation: a. For each sample pair, calculate the average of the two measurements:

Average = (Value_Index + Value_Reference) / 2. b. For each sample pair, calculate the difference between the two measurements:Difference = Value_Index - Value_Reference.Statistical Analysis: a. Calculate the mean of the differences. This is the estimated systematic bias.

Bias = Σ(Differences) / Nb. Calculate the standard deviation (SD) of the differences. c. Calculate the 95% Limits of Agreement (LoA):Upper LoA = Bias + 1.96 * SDLower LoA = Bias - 1.96 * SDVisualization (Plot Generation): a. Create a scatter plot with the Average of the two methods on the X-axis and the Difference on the Y-axis. b. Draw a solid horizontal line at the mean Bias. c. Draw dashed horizontal lines at the Upper LoA and Lower LoA. d. (Optional) Add a regression line of differences on averages to check for a relationship between bias and magnitude.

Interpretation:

- The Bias indicates the average systematic difference between the two methods. A positive bias means the new method consistently gives higher results than the reference.

- The Limits of Agreement define the range within which 95% of the differences between the two methods are expected to lie.

- The plot should be examined for any patterns, such as widening spread (heteroscedasticity) or a trend in the bias across the measurement range, which suggest more complex errors.

The Scientist's Toolkit: Essential Research Reagents and Materials

A successful method comparison study relies on the consistent quality and appropriate selection of materials. The following table details key reagent solutions and their critical functions in the context of assay validation.

Table 3: Essential Research Reagent Solutions for Method Comparison Studies

| Item Category | Specific Examples | Function in the Experiment |

|---|---|---|

| Calibrators | Master calibrator sets, traceable reference standards. | To establish a calibration curve for both the index and reference methods, ensuring both instruments are standardized to the same scale before sample measurement. |

| Quality Control (QC) Materials | Commercial QC sera at multiple levels (low, normal, high), third-party controls. | To monitor the precision and stability of both measurement systems throughout the testing period, verifying that they are operating within pre-defined performance specifications. |

| Patient Sample Panels | Fresh/frozen human serum, plasma, whole blood, or tissue extracts. | To serve as the core test material for the comparison. The panel should be commutable (behave like fresh patient samples) and cover the analytical range from low to high pathological values. |

| Assay-Specific Reagents | Enzymes, antibodies, substrates, buffers, probes, dyes. | To perform the core analytical reaction of the assay. Consistent lot numbers for all reagents should be used throughout the study to minimize a source of variation. |

| Sample Processing Tools | Pipettes (manual/electronic), pipette tips, microcentrifuge tubes, plate readers. | To ensure accurate and precise volumetric handling, sample preparation, and signal detection, which are critical for obtaining reliable and reproducible paired results. |

| Ciliobrevin A | Ciliobrevin A, CAS:302803-72-1, MF:C17H9Cl2N3O2, MW:358.2 g/mol | Chemical Reagent |

| Cinromide | Cinromide|B0AT1 (SLC6A19) Inhibitor|For Research Use |

Data Visualization and Color Contrast Standards

Effective data visualization is key to communicating the results of a method comparison study. Adhering to accessibility standards ensures that charts and graphs are interpretable by all audiences, including those with color vision deficiencies [11].

Adherence to WCAG Contrast Guidelines

The Web Content Accessibility Guidelines (WCAG) set a benchmark for color contrast. For standard text and data visualizations, a contrast ratio of at least 4.5:1 is required (Level AA), while a higher ratio of 7:1 is recommended for Level AAA compliance [11]. This is crucial for elements like axis labels, data point legends, and trend lines. Sufficient contrast is not just about accessibility; it also improves overall readability in various lighting conditions and on different display devices [10].

Application in Scientific Diagrams

When creating diagrams, such as flowcharts with Graphviz, explicit color choices must be made to ensure clarity.

- Foreground vs. Background: Avoid using the same or similar colors for foreground elements (arrows, symbols, lines) and the background. The specified palette (

#4285F4,#EA4335,#FBBC05,#34A853,#FFFFFF,#F1F3F4,#202124,#5F6368) provides a range of high-contrast options [12]. - Node Text Contrast: For any shape (node) that contains text, the

fontcolormust be explicitly set to contrast highly with the node'sfillcolor. For example, use light-colored text (#FFFFFFor#F1F3F4) on dark fill colors (#202124,#5F6368,#4285F4) and dark-colored text (#202124) on light fill colors (#FFFFFF,#F1F3F4,#FBBC05).

The following diagram illustrates a generic workflow for a method comparison study, implementing these contrast rules.

In the rigorous process of assay validation, the comparison of methods experiment is a critical step for assessing the systematic error, or inaccuracy, of a new test method relative to an established procedure [6]. The selection of an appropriate comparative method is arguably the most significant decision in designing this study, as it forms the basis for all subsequent interpretations about the test method's performance. An ill-considered choice can compromise the entire validation effort, leading to inaccurate conclusions and potential regulatory challenges. This document provides a structured framework for researchers and drug development professionals to understand the types of comparative methods, select the most suitable one for a given context, and implement a robust comparison protocol. The principles outlined here are designed to align with modern regulatory expectations, including the FDA's 2025 Biomarker Guidance, which emphasizes that while validation parameters are similar to drug assays, the technical approaches must be adapted for endogenous biomarkers [13].

Types of Comparative Methods

The term "comparative method" encompasses a spectrum of procedures, each with distinct implications for the confidence of your results. The fundamental distinction lies between a reference method and a routine method.

Reference Method

A reference method is a thoroughly validated technique whose results are known to be correct through comparison with an accurate "definitive method" and/or through traceability to standard reference materials [6]. When differences are observed between a test method and a reference method, the errors are confidently attributed to the test method. This provides the highest level of assurance in an accuracy claim.

Routine Comparative Method

A routine comparative method is an established procedure used in daily laboratory practice whose absolute correctness may not be fully documented [6]. When large, medically unacceptable differences are observed between a test method and a routine method, additional investigative experiments (e.g., recovery and interference studies) are required to determine which method is the source of the error.

Table 1: Characteristics of Comparative Method Types

| Method Type | Key Feature | Impact on Result Interpretation | Best Use Case |

|---|---|---|---|

| Reference Method | Results are traceable to a higher-order standard. | Differences are attributed to the test method. | Definitive accuracy studies and regulatory submissions. |

| Routine Method | Established in laboratory practice; relative accuracy. | Differences require investigation to identify the source of error. | Verifying consistency with a current laboratory standard. |

The following diagram illustrates the decision-making workflow for selecting the appropriate comparative method.

Experimental Protocol for Method Comparison

A robust experimental design is essential to generate reliable data for estimating systematic error. The following protocol outlines the key steps and considerations.

Specimen Selection and Handling

- Number of Specimens: A minimum of 40 different patient specimens is recommended [6]. The quality of specimens is more critical than quantity; they should cover the entire working range of the method and represent the spectrum of diseases expected in its routine application.

- Selection Strategy: Carefully select specimens based on observed concentrations to ensure a wide range of values. For methods where specificity is a concern (e.g., different chemical principles), 100-200 specimens may be needed to identify matrix-specific interferences [6].

- Stability and Handling: Analyze specimens by the test and comparative methods within two hours of each other, unless stability data supports a longer interval [6]. Define and systematize specimen handling (e.g., preservatives, centrifugation, storage) prior to the study to prevent handling-induced differences.

Analysis Protocol

- Replication: While single measurements by each method are common practice, performing duplicate measurements on different aliquots is advantageous. Duplicates help identify sample mix-ups, transposition errors, and confirm whether large differences are repeatable [6].

- Time Period: Conduct the study over a minimum of 5 days using multiple analytical runs to minimize bias from a single run. Extending the study over 20 days (with 2-5 specimens per day) aligns with long-term precision studies and incorporates more routine variation [6].

- Data Collection Order: Analyze specimens in a randomized order to avoid systematic bias related to run sequence.

Table 2: Key Research Reagents and Materials for Method Comparison Studies

| Material / Reagent | Function in the Experiment | Key Considerations |

|---|---|---|

| Patient Specimens | The core test material used for comparison across methods. | Must be stable, cover the analytical measurement range, and be clinically relevant. |

| Reference Method | Provides the benchmark for assessing the test method's accuracy. | Should be a high-quality method with documented traceability. |

| Quality Control (QC) Pools | Monitors the precision and stability of both methods during the study. | Should span low, medium, and high clinical decision levels. |

| Calibrators | Ensures both methods are properly calibrated according to manufacturer specifications. | Traceability of calibrators should be documented. |

Data Analysis and Interpretation

Graphical Analysis

The first step in data analysis is always visual inspection.

- Difference Plot: For methods expected to show 1:1 agreement, plot the difference (Test Method result - Comparative Method result) on the y-axis against the Comparative Method result on the x-axis [6]. This plot helps visualize systematic errors; points should scatter randomly around the zero line.

- Comparison Plot (Scatter Diagram): Plot the Test Method result (y-axis) against the Comparative Method result (x-axis) [6]. This is useful for visualizing the overall relationship and identifying the line of best fit, especially when 1:1 agreement is not expected.

Statistical Analysis

Statistical calculations provide numerical estimates of systematic error.

- Linear Regression: For data covering a wide analytical range, use linear regression (Y = a + bX) to estimate the slope (b, proportional error) and y-intercept (a, constant error) [6]. The systematic error (SE) at a critical medical decision concentration (Xc) is calculated as: SE = (a + bXc) - Xc.

- Correlation Coefficient (r): Calculate 'r' primarily to assess if the data range is wide enough for reliable regression. An 'r' value ≥ 0.99 suggests reliable estimates [6].

- Bias (for Narrow Range Data): For analytes with a narrow range (e.g., electrolytes), calculate the average difference (bias) between the two methods using a paired t-test [6].

The following workflow diagram summarizes the key steps in the analysis and interpretation phase.

Regulatory and Practical Considerations

The Context of Use (CoU) is a paramount concept emphasized by regulatory bodies and organizations like the European Bioanalysis Forum (EBF) [13]. The validation approach and the acceptability of the comparative method should be justified based on the intended use of the assay. For biomarker assays, the FDA's 2025 guidance maintains that while the validation parameters (accuracy, precision, etc.) are similar to those for drug assays, the technical approaches must be adapted to demonstrate suitability for measuring endogenous analytes [13]. It is critical to remember that this guidance does not require biomarker assays to technically follow the ICH M10 approach for bioanalytical method validation. Sponsors are encouraged to discuss their validation plans, including the choice of a comparative method, with the appropriate FDA review division early in development [13].

For researchers designing a method comparison study for assay validation, a deep understanding of key analytical performance parameters is fundamental. These parameters provide the statistical evidence required to demonstrate that an analytical procedure is reliable and fit for its intended purpose, a core requirement in drug development and regulatory submissions [14]. This document outlines the core concepts of bias, precision, Limit of Blank (LoB), Limit of Detection (LoD), Limit of Quantitation (LoQ), and linearity. It provides detailed experimental protocols for their determination, framed within the context of a method validation life cycle, which begins with defining an Analytical Target Profile (ATP) and employs a risk-based approach as emphasized in modern guidelines like ICH Q2(R2) and ICH Q14 [15].

The following workflow diagram illustrates the logical relationship and sequence for establishing these key performance parameters in an assay validation study.

Core Parameter Definitions and Statistical Formulas

Bias measures the systematic difference between a measurement value and an accepted reference or true value, indicating the accuracy of the method [14]. Precision describes the dispersion between independent measurement results obtained under specified conditions, typically divided into repeatability (within-run), intermediate precision (within-lab), and reproducibility (between labs) [15].

The limits of Blank, Detection, and Quantitation define the lower end of an assay's capabilities. The Limit of Blank (LoB) is the highest apparent analyte concentration expected to be found when replicates of a blank sample containing no analyte are tested [16]. The Limit of Detection (LoD) is the lowest analyte concentration that can be reliably distinguished from the LoB [16]. The Limit of Quantitation (LoQ) is the lowest concentration at which the analyte can be quantified with acceptable accuracy and precision, meeting predefined goals for bias and imprecision [16]. Finally, Linearity is the ability of a method to elicit test results that are directly, or through a well-defined mathematical transformation, proportional to the concentration of the analyte within a given Range [15].

Table 1: Summary of Key Performance Parameters

| Parameter | Definition | Sample Type | Typical Replicates (Verification) | Key Statistical Formula/Description |

|---|---|---|---|---|

| Bias | Systematic difference from a true value [14]. | Certified Reference Material (CRM) or sample vs. reference method. | 20 | ( \text{Bias} = \text{Mean}{measured} - \text{True}{value} ) |

| Precision | Dispersion between independent measurements [15]. | Quality Control (QC) samples at multiple levels. | 20 per level | ( \text{SD} = \sqrt{\frac{\sum(x_i - \bar{x})^2}{n-1}} ) ; ( \text{CV} = \frac{\text{SD}}{\bar{x}} \times 100\% ) |

| LoB | Highest apparent concentration of a blank sample [16]. | Sample containing no analyte (blank). | 20 | ( \text{LoB} = \text{mean}{blank} + 1.645(\text{SD}{blank}) ) |

| LoD | Lowest concentration reliably distinguished from LoB [16]. | Low-concentration sample near expected LoD. | 20 | ( \text{LoD} = \text{LoB} + 1.645(\text{SD}_{low concentration sample}) ) |

| LoQ | Lowest concentration quantified with acceptable accuracy and precision [16]. | Low-concentration sample at or above LoD. | 20 | ( \text{LoQ} \geq \text{LoD} ); Defined by meeting pre-set bias & imprecision goals. |

| Linearity | Proportionality of response to analyte concentration [15]. | Minimum of 5 concentrations across claimed range. | 2-3 per concentration | Polynomial regression (e.g., 1st order): ( y = ax + b ) |

Detailed Experimental Protocols

Protocol for Determining Limit of Blank (LoB) and Limit of Detection (LoD)

The CLSI EP17 guideline provides a standard framework for determining LoB and LoD [16]. This protocol requires measuring replicates of both a blank sample and a low-concentration sample.

- Step 1: Prepare Samples. For LoB, use a blank sample confirmed to contain no analyte (e.g., a zero calibrator or appropriate matrix). For LoD, prepare a low-concentration sample that is expected to be slightly above the LoB [16].

- Step 2: Data Acquisition. Measure a minimum of 20 replicates each for the blank and the low-concentration sample. For a full establishment, 60 replicates are recommended to capture method variability; 20 is typical for verification [16].

- Step 3: Data Analysis. Calculate the mean and standard deviation (SD) for the blank measurements. Compute the LoB as ( \text{LoB} = \text{mean}{blank} + 1.645(\text{SD}{blank}) ). This defines the concentration value where 95% of blank measurements fall below, assuming a Gaussian distribution [16]. Next, calculate the mean and SD for the low-concentration sample. Compute the provisional LoD as ( \text{LoD} = \text{LoB} + 1.645(\text{SD}_{low concentration sample}) ) [16].

- Step 4: Verification. Test a sample with a concentration at the provisional LoD. No more than 5% of the results (about 1 in 20) should fall below the LoB. If a higher proportion falls below, the LoD must be re-estimated using a sample with a higher concentration [16].

Protocol for Determining Limit of Quantitation (LoQ)

The LoQ is the point at which a method transitions from merely detecting an analyte to reliably quantifying it.

- Step 1: Prepare Samples. Prepare a series of samples at concentrations at or above the previously determined LoD.

- Step 2: Data Acquisition. Analyze multiple replicates (e.g., 20) for each concentration level.

- Step 3: Data Analysis. For each concentration, calculate the bias and imprecision (as %CV). The LoQ is the lowest concentration where the measured bias and %CV meet pre-defined acceptance criteria (e.g., ≤20% bias and ≤20% CV, or tighter limits based on the assay's intended use) [16]. The "functional sensitivity" of an assay, often defined as the concentration yielding a 20% CV, is closely related to the LoQ [16].

Protocol for Verifying Linearity and Range

The linearity of a method and its corresponding reportable range are verified using a polynomial regression method, as described in CLSI EP06 [14].

- Step 1: Prepare Samples. Create a minimum of 5 concentrations that span the entire claimed analytical measurement range (AMR), from the lowest to the highest reportable value. These can be prepared by serial dilution or using certified linearity materials.

- Step 2: Data Acquisition. Analyze each concentration in duplicate or triplicate, randomizing the order of analysis to avoid systematic drift.

- Step 3: Data Analysis. Perform regression analysis on the mean measured value versus the expected concentration. First, fit a first-order (linear) model: ( y = ax + b ). Then, fit a second-order model: ( y = a + bx + cx^2 ).

- Step 4: Interpretation. The method is considered linear if the second-order coefficient ('c') is not statistically significantly different from zero. If it is significant, the relationship may be curvilinear, and the range may need to be constrained, or mathematical transformation may be required before quantification [14]. The verified range is the interval over which acceptable linearity, accuracy, and precision are confirmed.

The Scientist's Toolkit: Essential Research Reagents and Materials

Table 2: Key Research Reagent Solutions for Method Validation Studies

| Item | Function and Importance in Validation |

|---|---|

| Certified Reference Materials (CRMs) | Provides an traceable standard with a defined value and uncertainty; essential for the unambiguous determination of method bias and for establishing accuracy [14]. |

| Matrix-Matched Blank Samples | A sample (e.g., serum, buffer) identical to the test matrix but devoid of the analyte; critical for conducting LoB studies and for assessing specificity and potential matrix effects [16]. |

| Quality Control (QC) Materials | Stable materials with known concentrations (low, mid, high); used throughout the validation and during routine use to monitor method precision (repeatability and intermediate precision) and long-term stability [14]. |

| Linearit y/Calibration Verification Material | A set of samples with defined concentrations spanning the entire claimed range; used to verify the analytical measurement range (AMR) and the linearity of the method [14]. |

| Stable Analytic Stocks | High-purity, stable preparations of the analyte for spiking experiments; used in recovery studies to assess accuracy and in the preparation of LoD/LoQ and linearity samples [16]. |

| CK0106023 | CK0106023, CAS:336115-72-1, MF:C30H32BrClN4O2, MW:596.0 g/mol |

| CK-636 | CK-636, CAS:442632-72-6, MF:C16H16N2OS, MW:284.4 g/mol |

A rigorous method comparison study is built upon the precise determination of bias, precision, LoB, LoD, LoQ, and linearity. The experimental protocols outlined herein, grounded in CLSI and ICH guidelines, provide a roadmap for generating the high-quality data necessary to prove an analytical method is fit for its purpose. By integrating these performance parameters within a phase-appropriate, lifecycle approach and starting with a clear Analytical Target Profile, researchers can efficiently design robust assay validation studies that meet the stringent demands of modern drug development [17] [15].

Blueprint for Execution: Designing and Running Your Comparison Study

In the context of assay validation research, a method comparison study is fundamental for demonstrating that a new or modified analytical method produces results comparable to a known reference method. These studies are critical in pharmaceutical development, clinical diagnostics, and biomedical research, where measurement accuracy and reliability directly impact data integrity and decision-making. The design of these studies requires careful consideration of three interdependent components: sample size (the number of independent biological samples or subjects to include), range (the concentration interval of the analyte that the method can quantitatively measure), and replication strategy (the approach for repeated measurements to estimate precision). Proper experimental design ensures that studies yield scientifically valid, reproducible, and reliable data capable of detecting clinically or practically significant differences between methods.

The fundamental objective is to strike a balance between scientific rigor and practical feasibility. An underpowered study with insufficient sample size may fail to detect important differences between methods, while an excessively large study wastes resources and potentially exposes more subjects than necessary to experimental procedures. Similarly, an inadequate replication strategy can lead to misleading precision estimates, and an insufficient analytical range may limit the clinical utility of the method. This protocol provides detailed guidance on these three critical elements to ensure robust method comparison studies.

Determining Appropriate Sample Size

Fundamental Principles and Requirements

Sample size calculation provides an objective basis for determining the number of samples or subjects needed to achieve the study's primary objective. In method comparison studies, this typically involves detecting a specified difference between methods (effect size) with adequate statistical power. All included studies reported a sample size, but only approximately 33% provided any justification for their chosen sample size, and of those, not all reported using statistical sample size formulae [18]. This represents a significant gap in methodological rigor that researchers should address.

The sample size for a method comparison study depends primarily on the statistical parameter used to assess agreement and the required precision of that estimate. Studies measuring continuous endpoints (e.g., concentration values) had a median sample size of 50 (IQR: 25 to 100), while those focusing on categorical endpoints (e.g., binary outcomes) required larger median sample sizes of 119 (IQR: 50 to 271) [18]. This difference reflects the generally greater information content in continuous measurements compared to categorical data.

Table 1: Observed Sample Sizes in Agreement Studies by Statistical Method

| Statistical Method | Endpoint Type | Median Sample Size | Interquartile Range (IQR) |

|---|---|---|---|

| Bland-Altman Limits of Agreement | Continuous | 65 | 35 - 124 |

| Intraclass Correlation Coefficient (ICC) | Continuous | 42 | 27 - 65 |

| Kappa Coefficients | Categorical | 71 | 50 - 233 |

| Significance Tests (e.g., paired t-test) | Continuous | 62 | 28 - 108 |

| Correlation Coefficients | Continuous | 50 | 30 - 89 |

Practical Steps for Sample Size Calculation

Before calculating sample size, researchers must define several key parameters:

Define the Primary Statistical Analysis: The choice of statistical method for assessing agreement directly influences sample size requirements. Bland-Altman Limits of Agreement is the most common approach for continuous variables, while Kappa statistics are preferred for categorical variables [18]. Each method has different sample size requirements, as shown in Table 1.

Determine the Effect Size of Practical Significance: The effect size represents the minimum difference between methods that would be considered clinically or practically important. This is often the most challenging parameter to specify. When possible, base this value on biological variation, clinical decision points, or regulatory requirements. If specific information is unavailable, Cohen's convention of small (0.2), medium (0.5), or large (0.8) standardized effect sizes can provide initial guidance, though these arbitrary values require careful judgment regarding their appropriateness for the specific research context [19].

Specify Statistical Power and Confidence Level: Conventional values are 80% or 90% for power (probability of detecting a true effect) and 95% for confidence level (precision of estimates). For example, to compare the mean difference between two groups using an effect size of 0.5 (medium) with a power of 80%, the total sample size required is 128 participants (64 per group) [19].

Use Specialized Software for Calculations: Sample size calculation need not be done manually. Several specialized software tools can assist, including:

- PASS (Power Analysis and Sample Size)

- G*Power (a free statistical power analysis tool)

- PS Power and Sample Size Calculation

- R packages (e.g., pwr, BlandAltmanLeh)

Engage in Team Discussion: Research team members should discuss the calculated sample size to ensure it is appropriate for the research question, available data records, research timeline, and budgetary constraints [19].

Sample Size for Descriptive Studies

For studies focused on estimating population parameters (e.g., mean concentration or prevalence) rather than comparing methods, sample size calculation follows a different approach. The required sample size depends on:

- Confidence level (typically 95%)

- Margin of error (the maximum acceptable difference between the sample estimate and the true population value)

- Expected standard deviation or proportion (based on prior knowledge or pilot studies)

For example, estimating a prevalence of 15% with a margin of error of ±5% and 95% confidence requires approximately 196 samples [19].

Establishing the Analytical Range

Defining the Measurable Interval

The analytical range (also called the reportable range) represents the interval between the lowest and highest concentrations of an analyte that the method can measure with acceptable accuracy and precision. Properly defining this range is essential for ensuring the method will be clinically useful across the concentrations encountered in practice.

The range should encompass all medically important decision levels for the test. For example, cholesterol has medical decision levels at 200 mg/dL and 240 mg/dL according to NCEP recommendations, while glucose is typically interpreted at multiple decision levels (50 mg/dL for hypoglycemia, 120 mg/dL for fasting samples, 160 mg/dL for glucose tolerance tests, and higher levels for monitoring diabetic patients) [20].

Determining Limits of Quantification

The limits of quantification define the boundaries of the analytical range and include:

- Lower Limit of Quantification (LLOQ): The lowest concentration of an analyte that can be quantitatively measured with acceptable precision and accuracy

- Upper Limit of Quantification (ULOQ): The highest concentration of an analyte that can be quantitatively measured with acceptable precision and accuracy

To establish these limits, prepare samples at progressively decreasing (for LLOQ) or increasing (for ULOQ) concentrations and analyze multiple replicates (typically n ≥ 5) at each concentration. The LLOQ is the lowest concentration where both precision (CV ≤ 20%) and accuracy (mean value within ±20% of theoretical concentration) meet acceptance criteria. Similar criteria apply for ULOQ [21].

Table 2: Key Parameters for Establishing Analytical Range

| Parameter | Definition | Experimental Approach | Acceptance Criteria |

|---|---|---|---|

| Lower Limit of Quantification (LLOQ) | Lowest concentration measurable with acceptable accuracy and precision | Analyze replicates (n≥5) of decreasing concentrations | CV ≤ 20%, Accuracy within ±20% |

| Upper Limit of Quantification (ULOQ) | Highest concentration measurable with acceptable accuracy and precision | Analyze replicates (n≥5) of increasing concentrations | CV ≤ 20%, Accuracy within ±20% |

| Medical Decision Levels | Concentrations where clinical interpretation changes | Literature review, clinical guidelines | Should fall within analytical range |

| Linearity | Ability to obtain results proportional to analyte concentration | Analyze serial dilutions of high-concentration sample | R² ≥ 0.95, deviations < 5% |

Designing Replication Strategies

Purpose of Replication Experiments

Replication experiments are performed to estimate the imprecision or random error of an analytical method. All measurement methods are subject to some random variation, where repeated measurements of the same sample yield slightly different results. The purpose of replication is to quantify this variation under normal operating conditions, which is essential for understanding the inherent variability of the method and determining whether it meets performance requirements [20].

Factors Influencing Replication Design

Several critical factors must be considered when designing replication experiments:

Time Period: The duration of the experiment significantly impacts the interpretation of results.

- Within-run: Analyses performed consecutively in a single analytical run; represents the best-case scenario for method performance.

- Within-day: Analyses performed in multiple runs within the same day; captures additional sources of variation from run-to-run changes.

- Between-day: Analyses performed over multiple days (typically 20+ days); provides the most realistic estimate of total imprecision encountered in routine practice [20].

Sample Matrix: The materials present in a sample constitute its matrix (e.g., serum, urine, whole blood). Use test samples with a matrix as close as possible to the actual patient specimens. Available options include:

- Standard solutions (simpler matrix, may provide optimistic estimates)

- Control solutions (commercially available, matrix similar to patient samples)

- Patient pools (fresh patient samples, best representation of real conditions) [20]

Number of Concentrations: Test at least 2-3 different concentrations that represent medically important decision levels. For example, for cholesterol, include concentrations at 200 mg/dL and 240 mg/dL; for glucose, include levels at 50 mg/dL, 120 mg/dL, and potentially higher concentrations for diabetic monitoring [20].

Number of Replicates: A minimum of 20 replicates per concentration is generally recommended for reliable estimates of imprecision. While more replicates provide better estimates, practical considerations often limit the number to 20 [20].

Recommended Replication Protocol

The following replication strategy is recommended for comprehensive precision estimation:

Short-term Imprecision:

- Select at least 2 different control materials representing low and high medical decision concentrations.

- Analyze 20 replicates of each material within a single run or within one day.

- Calculate mean, standard deviation (SD), and coefficient of variation (CV) for each material.

- Acceptance criterion: CV < 0.25 × TEa (where TEa is the allowable total error) [20].

Long-term Imprecision:

- Analyze 1 sample of each control material on 20 different days.

- Calculate mean, SD, and CV for each material across all days.

- Acceptance criterion: CV < 0.33 × TEa [20].

Diagram 1: Replication Experiment Workflow. This diagram illustrates the sequential steps for designing and executing replication studies to estimate method imprecision.

Data Analysis and Interpretation

For replication experiments, calculate the following statistical parameters for each concentration level:

- Mean (x̄): Average of all measurements

- Standard Deviation (s): Measure of dispersion around the mean

- Coefficient of Variation (CV): Relative standard deviation (CV = [s/x̄] × 100)

For duplicate measurements of patient specimens, calculate the standard deviation from the differences (d) between duplicates using the formula: s = √(Σd²/2n) [20]

It is useful to visualize the replication data using histograms to display the distribution of results and better understand the magnitude of random variation expected for individual measurements.

Integrated Experimental Design

Comprehensive Workflow for Method Comparison Studies

A robust method comparison study integrates sample size determination, range establishment, and replication strategies into a cohesive experimental design. The following workflow provides a structured approach:

Diagram 2: Integrated Experimental Design Workflow. This diagram shows the sequential relationship between key components in designing a method comparison study.

The Scientist's Toolkit: Essential Research Reagents and Materials

Table 3: Essential Research Reagents and Materials for Method Validation Studies

| Item Category | Specific Examples | Function in Method Validation |

|---|---|---|

| Reference Materials | Certified Reference Materials (CRMs), Standard solutions | Provide known analyte concentrations for calibration and trueness assessment |

| Quality Control Materials | Commercial control sera, Patient pools, Spiked samples | Monitor method performance over time and estimate precision |

| Clinical Samples | Patient serum/plasma, Urine, CSF, Tissue homogenates | Assess method performance with real-world matrices |

| Calibrators | Standard curves with known concentrations | Establish relationship between signal response and analyte concentration |

| Matrix Blank | Analyte-free matrix (e.g., charcoal-stripped serum) | Assess specificity and detect potential interference |

| Stability Materials | Aliquots stored under various conditions | Evaluate sample stability under different storage conditions |

| CMPD1 | MK2a Inhibitor CMPD1 | |

| LEDGIN6 | HIV-1 Integrase Inhibitor 2 | Research Compound | Research-grade HIV-1 Integrase Inhibitor 2 for antiviral studies. This product is For Research Use Only, not for human consumption. |

Proper experimental design for method comparison studies requires careful integration of sample size determination, analytical range establishment, and replication strategies. The protocols outlined in this document provide a structured approach to ensure studies are adequately powered, cover clinically relevant concentrations, and generate reliable precision estimates. By following these guidelines, researchers can produce method validation data that meets scientific and regulatory standards, ultimately supporting the development of robust analytical methods for drug development and clinical practice.

Remember that while these protocols provide general guidance, specific applications may require modifications based on the particular methodology, analyte characteristics, and intended use of the assay. Always document any deviations from standard protocols and provide rationale for design decisions.

Within the framework of a method comparison study for assay validation, the selection and handling of specimens are foundational activities that directly determine the validity and reliability of the study's conclusions. Proper practices ensure that the estimated bias between the test and comparative method accurately reflects analytical performance, rather than being confounded by pre-analytical variables. This protocol outlines detailed procedures for selecting and handling specimens to ensure stability and cover the clinically relevant range, thereby supporting the overall thesis that a well-designed method comparison is critical for robust assay validation.

Core Principles of Specimen Selection

The objective of specimen selection is to obtain a set of patient samples that will challenge the methods across the full spectrum of conditions encountered in routine practice and enable a realistic estimation of systematic error [6]. The following principles are critical:

- Covering the Clinical Range: Specimens should be carefully selected to cover the entire working range of the method, including medically important decision concentrations [7] [6]. This is more critical than the sheer number of specimens, as a wide range of test results allows for a comprehensive assessment of method comparability.

- Representing Pathological Diversity: The specimen pool should represent the spectrum of diseases and conditions expected during the routine application of the method [6]. This helps identify potential interferences specific to certain patient populations.

- Utilizing Fresh Patient Specimens: The use of fresh patient specimens is preferred over stored or spiked samples, as they best represent the matrix and potential interferents encountered in real-world testing [7].

Specimen Handling and Stability Protocols

Maintaining specimen integrity from collection through analysis is paramount. Differences observed between methods should be attributable to analytical systematic error, not specimen degradation.

Stability and Timing

- Stability Definition: Specimens should generally be analyzed by both the test and comparative method within two hours of each other to minimize the effects of analyte deterioration [7] [6]. For tests with known shorter stability (e.g., ammonia, lactate), this window must be significantly shortened.

- Extended Studies: For comparison studies extended over multiple days, specimen handling must be carefully defined and systematized. Stability can be improved for some tests by adding preservatives, separating serum or plasma from cells, refrigeration, or freezing [6]. The chosen stabilization method must be validated and applied consistently.

Sample Handling Workflow

The following diagram illustrates the critical path for specimen handling in a method comparison study.

Experimental Design and Protocol

A robust experimental design minimizes the impact of random variation and ensures that systematic error is accurately estimated.

Specimen Volume and Replication

- Sample Size: A minimum of 40 different patient specimens should be tested, with larger numbers (100-200) being preferable to identify unexpected errors due to interferences or sample matrix effects, especially when the new method uses a different principle of measurement [7] [6].

- Replicate Measurements: While single measurements by each method are common practice, performing duplicate measurements is highly advantageous. Duplicates should be two different aliquots analyzed in different runs or at least in a randomized order—not simple back-to-back replicates. This provides a check for sample mix-ups, transposition errors, and other mistakes, and helps confirm whether large observed differences are real or artifactual [6].

Study Duration and Sample Analysis

- Time Period: The experiment should be conducted over several different analytical runs on different days to minimize the impact of systematic errors occurring in a single run. A minimum of 5 days is recommended, but extending the study over a longer period (e.g., 20 days) while analyzing fewer specimens per day is preferable [6].

- Sample Sequence: The sample analysis sequence should be randomized to avoid carry-over effects and other sequence-related biases [7].

The table below summarizes the key quantitative parameters for designing the specimen selection and handling protocol.

Table 1: Specimen Selection and Handling Protocol Specifications

| Parameter | Minimum Recommendation | Enhanced Recommendation | Comments |

|---|---|---|---|

| Number of Specimens | 40 | 100 - 200 | Larger numbers help assess method specificity and identify matrix effects [7] [6]. |

| Clinical Range Coverage | Cover medically important decision points | Even distribution across the entire reportable range | Carefully select specimens based on observed concentrations [6]. |

| Analysis Stability Window | Within 2 hours | As short as possible for labile analytes | Applies to the time between analysis by the test and comparative method [7] [6]. |

| Study Duration | 5 days | 20 days | Mimics real-world conditions and incorporates more routine variation [6]. |

| Replicate Measurements | Single measurement | Duplicate measurements | Duplicates are from different aliquots, analyzed in different runs/order [6]. |

| Sample State | Fresh patient specimens | - | Avoids changes associated with storage; use spiked samples only for supplementation [7]. |

The Scientist's Toolkit: Essential Research Reagent Solutions

The following table details key materials and reagents essential for executing the specimen handling protocols in a method comparison study.

Table 2: Essential Materials for Specimen Handling and Stability

| Item | Function & Application |

|---|---|

| Validated Sample Collection Tubes | Ensures sample integrity from the moment of collection. Tubes must be compatible with both the test and comparative methods (e.g., correct anticoagulant, no interfering substances). |

| Aliquoting Tubes/Vials | For dividing the primary sample into portions for analysis by the two methods and for any repeat testing. Must be made of materials that do not leach or adsorb the analyte. |

| Stable Reference Materials/Controls | Used to verify the calibration and ongoing performance of both the test and comparative methods throughout the study period. |

| Documented Preservatives | Chemical additives (e.g., sodium azide, protease inhibitors) used to extend analyte stability for specific tests, following validated protocols. |

| Temperature-Monitored Storage | Refrigerators (2-8°C) and freezers (-20°C or -70°C) with continuous temperature logging to ensure specimen stability when immediate analysis is not possible. |

In the context of a method comparison study for assay validation, time and run-to-run variation are critical components of the data collection protocol. Incorporating these factors is essential for robust method evaluation, as it ensures that the assessment of a candidate method's performance (e.g., its bias relative to a comparative method) reflects the typical variability encountered in routine laboratory practice. A well-designed protocol that accounts for these sources of variation increases the reliability and generalizability of the study's conclusions, ultimately supporting confident decision-making in drug development and clinical research.

Protocol for Data Collection

Core Design Considerations

Table 1: Protocol for Integrating Time and Run-to-Run Variation

| Protocol Component | Detailed Methodology | Rationale & Key Parameters |

|---|---|---|

| Time Period | Conduct the study over a minimum of 5 days, and ideally extend it to 20 days or longer [6]. Perform analyses in several separate analytical runs on different days [6]. | This design minimizes the impact of systematic errors that could occur in a single run and captures long-term sources of variation, providing a more realistic estimate of method performance [6]. |

| Run-to-Run Variation | Incorporate a minimum of 5 to 8 independent analytical runs conducted over the specified time period. Within each run, analyze a unique set of patient specimens [22]. | Using multiple runs captures the random variation inherent in the analytical process itself, from factors like reagent re-constitution, calibration, and operator differences. |

| Sample Replication | For each unique patient sample within a run, perform duplicate measurements. Ideally, these should be from different sample cups analyzed in a different order, not immediate back-to-back replicates [6]. | Duplicates provide a check for measurement validity, help identify sample mix-ups or transposition errors, and allow for the assessment of within-run repeatability [6]. |

| Specimen Selection & Stability | Select a minimum of 40 different patient specimens to cover the entire working range of the method [6]. Analyze specimens by the test and comparative methods within two hours of each other, unless specimen stability requires a shorter window [6]. | A wide concentration range is more important than a large number of specimens for reliable statistical estimation. Simultaneous (or near-simultaneous) analysis ensures observed differences are due to analytical error, not real physiological changes [6] [22]. |

Experimental Workflow

The following diagram illustrates the logical workflow for a data collection protocol that incorporates time and run-to-run variation.

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 2: Key Reagent Solutions and Materials for Method Comparison Studies

| Item | Function in the Protocol |

|---|---|

| Candidate Method Reagents | The new test reagents (e.g., specific reagent lots) whose performance is being evaluated against a comparative method. Their stability over the study duration is critical [23]. |

| Comparative Method Reagents | The reagents used by the established, reference, or currently in-use method. These serve as the benchmark for comparison [6] [24]. |

| Characterized Patient Specimens | A panel of 40 or more unique patient samples that span the analytical measurement range and represent the expected pathological conditions. These are the core "test subjects" for the method comparison [6] [22]. |

| Quality Control Materials | Materials with known target values analyzed at the beginning and/or end of each run to verify that both the candidate and comparative methods are performing within acceptable parameters before study data is accepted [6]. |

| Data Management System (e.g., Validation Manager) | Specialized software used to plan the study, record instrument and reagent lot information, import results, automatically manage data pairs, perform statistical calculations, and generate reports [23]. |

In assay validation research, method comparison studies are fundamental for determining whether a new measurement method (test method) can satisfactorily replace or be used interchangeably with an existing procedure (comparative method). These studies are critical in pharmaceutical development, clinical chemistry, and biomarker research, where accurate and reliable measurements directly impact research conclusions and therapeutic decisions. The core question addressed is whether two methods provide equivalent results without affecting experimental outcomes or the scientific validity of data [7] [6].

Proper statistical analysis in method comparison moves beyond simple correlation to quantify agreement and identify specific systematic errors. While correlation analysis examines whether two variables are related, it cannot determine whether the methods agree [25]. As demonstrated in a glucose measurement example, two methods can have a perfect correlation (r = 1.00) yet exhibit substantial, clinically unacceptable differences [7]. Similarly, t-tests often fail to detect clinically meaningful differences, especially with small sample sizes [7]. Consequently, specialized statistical approaches are required, primarily including Bland-Altman analysis, Deming regression, and Passing-Bablok regression, each with specific applications and underlying assumptions [26] [25] [27].

The following table summarizes the key statistical methods used in method comparison studies, their primary applications, and fundamental assumptions.

Table 1: Statistical Methods for Method Comparison Studies

| Method | Primary Use | Key Assumptions | Data Requirements | Outputs |

|---|---|---|---|---|

| Bland-Altman Analysis [28] [25] | Assess agreement between two methods; identify systematic bias and outliers | Differences are normally distributed; no specific assumption about measurement error distribution | Paired measurements from each subject | Mean difference (bias); limits of agreement (mean ± 1.96 SD) |

| Simple Linear Regression [6] | Model relationship between methods when reference method has negligible error | Only Y variable measured with error; homoscedastic residuals | Paired measurements | Slope (proportional difference); intercept (constant difference) |

| Deming Regression [26] [29] | Estimate systematic error when both methods have measurement errors | Errors for both variables normally distributed; error ratio known or estimable | Paired measurements; error ratio or replicates for estimation | Slope with CI; intercept with CI; accounts for errors in both variables |

| Passing-Bablok Regression [30] [27] | Non-parametric method for estimating systematic error | Linear relationship; variables highly correlated; no distributional assumptions | Paired measurements covering clinical range | Robust slope and intercept with CIs; resistant to outliers |

Detailed Experimental Protocol for Method Comparison

Study Design and Sample Planning

A properly designed method comparison study requires careful experimental planning to ensure results are reliable and scientifically valid.

Sample Size Determination: A minimum of 40 patient specimens is recommended, with 100 specimens preferable to identify unexpected errors from interferences or sample matrix effects [7] [6]. For Passing-Bablok regression, sample sizes of 40-90 specimens are suggested, with at least 30 being the absolute minimum [27]. Larger sample sizes improve the precision of agreement estimates and enhance the ability to detect proportional differences between methods.

Sample Selection and Handling: Specimens should be selected to cover the entire clinically meaningful measurement range rather than simply representing random samples [7] [6]. This ensures the comparison is relevant across all potential values encountered in practice. Specimens should be analyzed within their stability period, preferably within 2 hours of each other between methods, unless stability data supports longer intervals [6]. Sample handling should be standardized to prevent introduced variability.

Measurement Protocol: The experiment should be conducted over multiple days (minimum of 5 days) to capture typical routine variability [7] [6]. When possible, duplicate measurements should be performed by both methods to minimize random variation effects and help identify sample mix-ups or transcription errors [6]. The analysis sequence should be randomized to avoid carry-over effects or systematic timing biases.

Data Collection Procedures

Measurement Process: Analyze each sample with both the test and comparative methods under identical conditions where possible. For reagent stability assessment, follow manufacturer specifications and validate stability under actual usage conditions [31]. Include appropriate controls to monitor assay performance throughout the study.

Data Recording and Management: Record all measurements systematically with appropriate metadata. Perform initial graphical analysis during data collection to identify discrepant results while specimens are still available for reanalysis [6].

Statistical Analysis Procedures

Bland-Altman Analysis

The Bland-Altman plot (also known as the Tukey mean-difference plot) provides a visual assessment of agreement between two measurement methods [28] [25].

Calculation Protocol:

- Calculate the mean of each pair of measurements:

Mean = (Method A + Method B)/2 - Calculate the difference between each pair:

Difference = Method A - Method B - Plot the means (x-axis) against the differences (y-axis) in a scatter plot

- Calculate the mean difference (bias):

d̄ = Σ(Method A - Method B)/n - Calculate the standard deviation (SD) of the differences

- Compute Limits of Agreement:

d̄ ± 1.96 × SD[25]

Interpretation Guidelines:

- The mean difference represents the systematic bias between methods

- The limits of agreement define the range within which 95% of differences between methods are expected to fall

- Outliers can be identified as points outside the limits of agreement

- The clinical relevance of agreement is determined by comparing the bias and limits of agreement to predefined acceptable differences based on biological variation or clinical requirements [25]

Figure 1: Bland-Altman Analysis Workflow - This diagram illustrates the step-by-step process for creating and interpreting a Bland-Altman plot to assess agreement between two measurement methods.

Deming Regression

Deming regression is used when both measurement methods contain random errors, unlike simple linear regression which assumes only the Y variable has measurement error [26] [29].

Calculation Protocol:

- Determine the error ratio (λ):

λ = (SD of method X error / SD of method Y error)² - Calculate the slope (B):

- Calculate the intercept (A):

Intercept = ȳ - Slope × x̄ - Compute confidence intervals for slope and intercept using jackknife or bootstrap methods [29]

Interpretation Guidelines:

- Slope ≠1 indicates proportional bias between methods

- Intercept ≠0 indicates constant bias between methods

- The 95% confidence intervals for slope and intercept determine if deviations from 1 and 0, respectively, are statistically significant

- Weighted Deming regression should be used when measurement errors are proportional to the analyte concentration [29]

Passing-Bablok Regression

Passing-Bablok regression is a non-parametric method that does not assume normal distribution of errors and is robust against outliers [30] [27].

Calculation Protocol:

- Calculate all possible pairwise slopes:

Sij = (Yj - Yi) / (Xj - Xi)for i < j - Exclude slopes with 0/0 or -1/0 denominators

- Apply correction factor (K): Count the number of slopes less than -1

- Calculate the slope as the median of all slopes:

B = median(Sij) - Calculate the intercept:

A = median(Yi - B × Xi) - Compute confidence intervals using bootstrap or approximate methods [27]

Interpretation Guidelines:

- Use the Cusum test for linearity to verify the linear relationship assumption

- Slope ≠1 indicates proportional differences between methods

- Intercept ≠0 indicates constant differences between methods

- Residual analysis helps identify outliers and nonlinearity

- The method requires variables with high correlation and linear relationship [27]

Method Selection Framework

Selecting the appropriate statistical method depends on the error structure of the measurement methods and the data characteristics. The following decision framework guides method selection.

Figure 2: Method Selection Decision Framework - This flowchart provides a systematic approach for selecting the most appropriate statistical method based on measurement error characteristics and data properties.

Integration of Multiple Approaches

Comprehensive method comparison should integrate multiple statistical approaches rather than relying on a single method:

- Begin with graphical analysis (scatter plots and difference plots) to identify outliers, nonlinear relationships, and heteroscedasticity [7] [6]

- Use Bland-Altman analysis to assess overall agreement and identify systematic biases [28] [25]

- Apply Deming or Passing-Bablok regression to quantify constant and proportional errors, depending on error structure and distributional assumptions [26] [27]

- Supplement with residual analysis to verify model assumptions and identify patterns not captured by primary analyses [27]

Research Reagent Solutions and Materials

Successful method comparison studies require appropriate reagents, materials, and analytical tools. The following table details essential components for method validation studies.

Table 2: Essential Research Reagents and Materials for Method Comparison Studies

| Category | Specific Items | Function in Method Comparison | Quality Requirements |

|---|---|---|---|

| Reference Materials | Certified reference materials (CRMs); Calibrators with traceable values | Establish measurement traceability; Verify accuracy of methods | Value assignment with stated uncertainty; Traceability to reference methods |

| Quality Controls | Commercial quality control materials; Pooled patient samples | Monitor assay performance stability throughout study | Cover clinically relevant concentrations; Commutable with patient samples |

| Clinical Samples | Fresh patient specimens; Archived samples with known stability | Provide authentic matrix for method comparison | Cover entire measuring interval; Represent intended patient population |

| Reagents | Method-specific reagents; Diluents; Buffers | Perform measurements according to manufacturer protocols | Lot-to-lot consistency documented; Stored according to specifications |

| Analytical Software | Statistical packages (R, NCSS, MedCalc, StatsDirect); Spreadsheet applications | Perform statistical analyses and create visualization | Validated algorithms; Appropriate for planned statistical methods |

Reagent Stability and Compatibility

- Reagent Stability: Determine stability under storage and assay conditions following manufacturer specifications. Test stability after multiple freeze-thaw cycles if applicable [31]

- DMSO Compatibility: For assays involving compound testing, validate compatibility with DMSO concentrations spanning expected final concentrations (typically 0-10%) [31]

- Sample Stability: Establish stability under storage conditions and between measurements (typically within 2 hours for most analytes) [6]