Analytical Chemistry: The Enabling Science Powering Modern Drug Development and Biomedical Research

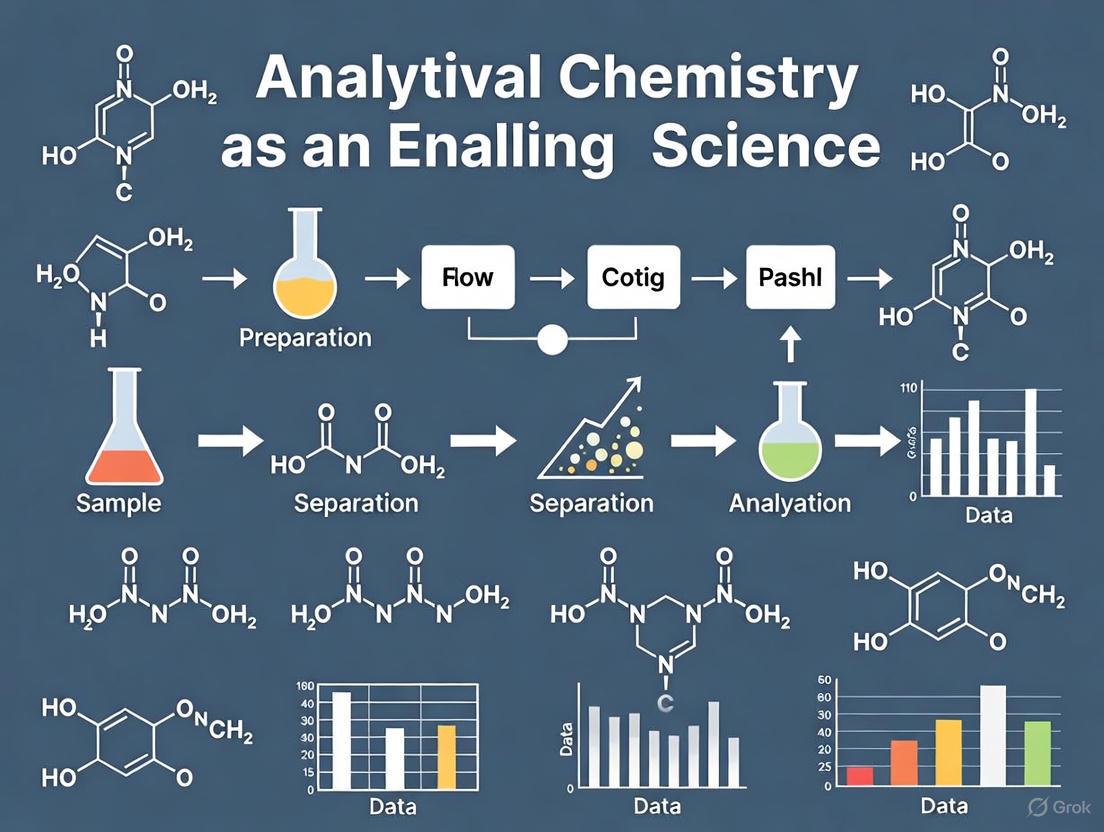

This article explores the indispensable role of analytical chemistry as a foundational enabler across the biomedical and pharmaceutical sciences.

Analytical Chemistry: The Enabling Science Powering Modern Drug Development and Biomedical Research

Abstract

This article explores the indispensable role of analytical chemistry as a foundational enabler across the biomedical and pharmaceutical sciences. It provides researchers and drug development professionals with a comprehensive overview, from core principles and advanced methodologies to practical troubleshooting and rigorous validation frameworks. By synthesizing foundational knowledge with current trends like automation, AI, and sustainability, the content offers actionable insights for developing robust, efficient, and compliant analytical methods that accelerate translational research and ensure product quality and safety.

The Silent Workhorse: Core Principles and Future Trends Defining Analytical Chemistry

Analytical chemistry is the enabling science of measurement and characterization, providing the fundamental tools and methodologies to determine the composition, structure, and quantity of matter. As a discipline, it is defined by its systematic approach to obtaining chemical information, playing a critical role in advancing research across pharmaceuticals, environmental science, materials science, and forensics. This discipline is not merely a set of techniques but a science of its own, governed by metrological principles and a rigorous framework for ensuring that data is reliable, accurate, and fit-for-purpose. Within the broader context of scientific research, analytical chemistry acts as a critical enabler, transforming uncharacterized materials into quantified, understood entities that can form the basis of scientific discovery and product development [1] [2]. For drug development professionals, this translates into the ability to reliably identify active ingredients, quantify potency, detect impurities, and understand degradation pathways, thereby ensuring both the safety and efficacy of pharmaceutical products.

The core of this discipline lies in the science of measurement—the quantification of attributes of an object or event, which allows for meaningful comparison with other objects or events [2]. This process of comparison against a standard reference is foundational, and its proper execution is what allows analytical chemistry to serve as a cornerstone of trade, technology, and quantitative research.

Philosophical and Historical Foundations of Measurement

The science of measurement, or metrology, is built upon a rich philosophical history that seeks to understand the nature of quantities and the process of quantification. Modern philosophical discussions about measurement span several complementary perspectives, including mathematical theories that map empirical relations to numbers, realist views that see measurement as the estimation of mind-independent properties, and model-based accounts that view it as the assignment of values to parameters in a theoretical model [3].

A pivotal historical development was the challenge to the strict Aristotelian dichotomy between quantities (which admit equality but not degrees) and qualities (which admit degrees but not equality). During the 13th and 14th centuries, scholars like Duns Scotus and Nicole Oresme developed theories of qualitative intensity, using geometrical figures to represent changes in the intensity of qualities like velocity. This work established that a subset of qualities was amenable to quantitative treatment, paving the way for the formulation of quantitative scientific laws during the 16th and 17th centuries [3]. This historical context underscores that analytical chemistry is not limited to mere counting but involves the sophisticated quantification of properties that were once considered purely qualitative.

The Evolution of Measurement Systems

The methodology of any property measurement is categorized by type, magnitude, unit, and uncertainty. The level of measurement (e.g., ratio, interval, ordinal) is a taxonomy for the methodological character of a comparison. The magnitude is the numerical value itself, while the unit provides the mathematical weighting factor derived as a ratio to a standardized property. Finally, the uncertainty represents the random and systemic errors of the measurement procedure, indicating the confidence level in the measurement [2].

Today, measurements most commonly use the International System of Units (SI), which defines seven base units in terms of invariable natural phenomena and physical constants, a shift from historical reliance on standard artifacts subject to deterioration. This system ensures global consistency and reliability in measurements, a prerequisite for international research and commerce [2].

Core Principles of Analytical Measurement

The practice of analytical chemistry is governed by a set of core principles that ensure the quality and reliability of the generated data. These principles form the basis of method validation, a required process for demonstrating that an analytical procedure is suitable for its intended use [4].

Table 1: Key Validation Parameters in Analytical Chemistry

| Parameter | Definition | Typical Evaluation Method |

|---|---|---|

| Accuracy | Closeness of the analytical result to the true value. | Comparison with a reference method or certified reference material. |

| Precision | Degree of agreement among individual results. | Analysis of replicate samples; calculation of Relative Standard Deviation (RSD). |

| Specificity | Ability to distinguish the analyte from other components. | Analysis of samples with and without the analyte to check for interference. |

| Linearity | Ability to produce results proportional to analyte concentration. | Analysis of a series of standards; calculation of correlation coefficient (r). |

| Range | The interval between upper and lower concentrations where linearity, accuracy, and precision are acceptable. | Established from linearity studies. |

| Limit of Detection (LOD) | Lowest concentration of the analyte that can be detected. | Based on standard deviation of response; often 3 × standard deviation. |

| Limit of Quantitation (LOQ) | Lowest concentration that can be quantified with acceptable accuracy and precision. | Based on standard deviation of response; often 10 × standard deviation. |

These validation parameters are not isolated concepts but are interconnected, forming a coherent framework for assessing method performance. The process of validation can be prospective (before routine use), concurrent (during routine use, often during transfer between labs), or retrospective (after a method has been in use) [4]. The following workflow outlines the typical steps in a method validation protocol, from planning to conclusion.

The Modern Analytical Toolkit: Techniques and Characterization

The discipline of characterization and analysis employs a wide array of techniques to identify, isolate, or quantify chemicals or materials, or to characterize their physical properties [5]. These techniques form the scientist's essential toolkit for tackling complex analytical problems.

Table 2: Essential Research Reagents and Materials in Analytical Chemistry

| Item / Technique | Primary Function | Key Application Example |

|---|---|---|

| Mass Spectrometry (MS) | Identifies and quantifies compounds by measuring their mass-to-charge ratio. | Biomarker discovery, metabolomics, drug metabolite identification [1] [6]. |

| High-Performance Liquid Chromatography (HPLC) | Separates components in a mixture for purification or quantification. | Pharmaceutical quality control, separating complex biological samples [1]. |

| Tandem Mass Spectrometry (MS/MS) | Provides structural information by fragmenting ions and analyzing the product ions. | Detailed structural elucidation of unknown compounds and proteomics [1]. |

| Gas Chromatography (GC) | Separates volatile compounds without decomposition. | Environmental monitoring of pollutants, forensic analysis [1]. |

| Spectroscopy (e.g., IR, NMR) | Probes molecular structure by measuring interaction with electromagnetic radiation. | Determining functional groups and molecular structure; NMR is a key topic in modern research [5] [7]. |

| Ionic Liquids | Serves as green solvents with reduced environmental impact. | Used in extractions and chromatography to reduce solvent consumption [1]. |

| Certified Reference Materials | Provides a standardized reference with known properties to calibrate equipment and validate methods. | Essential for establishing accuracy and traceability in measurements [4]. |

The modern laboratory often combines these techniques into hyphenated systems, such as GC-MS or LC-MS/MS, which couple a powerful separation technique with a sensitive detection method. This combination provides a robust platform for analyzing complex mixtures, such as those encountered in drug development and bioanalysis [1] [7]. Furthermore, the field is increasingly focused on green analytical chemistry, which promotes the use of environmentally friendly procedures, miniaturized processes, and energy-efficient instruments to reduce the environmental impact of analytical activities [1].

Data Presentation and Communication of Results

The final, critical step in the analytical process is the effective presentation of data. Well-presented data communicates findings clearly, attracts and sustains reader interest, and efficiently presents complex information. Data can be presented in textual, tabular, or graphical forms, with the choice depending on the specific information to be emphasized [8].

- Textual Presentation: Ideal for explaining findings, outlining trends, and providing context. It is most appropriate when conveying one or two numbers, as it integrates them into the narrative without occupying excessive space [8].

- Tabular Presentation: Best suited for presenting individual information and representing both quantitative and qualitative information where all data points require equal attention. Tables allow for precise representation of numbers and can accommodate information with different units [9] [8].

- Graphical Presentation: A highly effective visual tool for displaying data at a glance, facilitating comparison, and revealing trends and relationships. Common graphical tools for quantitative data include [10] [9] [8]:

- Histograms: Display the frequency distribution of quantitative data using contiguous bars.

- Frequency Polygons: Show trends by joining the midpoints of histogram bars with straight lines.

- Line Diagrams: Prominently used to display time trends of an event.

- Scatter Diagrams: Visualize the correlation between two quantitative variables.

For comparative analysis, a frequency polygon is particularly useful, as it allows for multiple distributions to be overlaid on the same diagram for direct visual comparison [10]. The choice of presentation method is crucial, as inappropriately presented data fails to convey information effectively to readers, reviewers, and fellow scientists [8].

The field of analytical chemistry is dynamic, continuously evolving to meet global demands and integrate technological innovations. Several key trends are shaping its future beyond 2025 [1]:

- Artificial Intelligence and Automation: AI and machine learning are transforming the field by enhancing data analysis, automating complex processes, and optimizing method development, thereby enabling the addressing of increasingly complex analytical challenges.

- Miniaturization and Portability: The need for on-site testing in environmental monitoring, food safety, and forensic science is driving the demand for portable and miniaturized devices, such as portable gas chromatographs for real-time air quality monitoring.

- Advanced Instrumentation: Developments in areas like multidimensional chromatography, which offers greater separation power, and the integration of mass spectrometry into multi-omics approaches, are providing deeper insights into complex biological systems.

- Quantum Technologies: Although still in early stages, quantum sensors show great potential for unprecedented sensitivity, enabling extremely precise measurements in environmental monitoring and biomedical applications.

These trends highlight the trajectory of analytical chemistry as a discipline moving towards greater speed, sensitivity, integration, and intelligence. The market reflects this growth, with the global analytical instrumentation market, estimated at $55.29 billion in 2025, projected to grow at a compound annual growth rate (CAGR) of 6.86% to reach $77.04 billion by 2030 [1].

In conclusion, analytical chemistry, as the science of measurement and characterization, is a fundamental enabling discipline. It provides the critical data and insights that drive research and development across the scientific spectrum. From its deep philosophical foundations to its rigorous methodological principles and its embrace of transformative technologies, the discipline remains central to solving complex problems and advancing human knowledge, particularly in mission-critical fields like drug development. Its role in ensuring the quality, safety, and efficacy of pharmaceutical products is indispensable, solidifying its position as a cornerstone of modern science.

Analytical chemistry serves as a fundamental enabling science across numerous research and industrial fields, providing the critical tools and methodologies for precise measurement, characterization, and validation. In pharmaceutical development, environmental monitoring, and materials science, robust analytical processes ensure the reliability, safety, and efficacy of products and conclusions [1]. This technical guide delineates the comprehensive analytical workflow from initial problem definition through final reporting, providing researchers and drug development professionals with a structured framework for implementing rigorous analytical practices. The systematic approach outlined herein underscores the indispensable role of analytical chemistry in generating valid, reproducible scientific data that drives innovation and decision-making.

The Analytical Workflow: A Step-by-Step Guide

The analytical process represents a systematic sequence of stages that transforms a research question into reliable, interpretable data. Each stage builds upon the previous one, creating a robust framework for scientific inquiry.

Step 1: Problem Definition and Objective Setting

The analytical process begins with precise problem definition, establishing clear, measurable objectives that guide all subsequent activities. This foundational stage requires researchers to:

- Define the analyte and matrix: Precisely identify the target substance(s) for measurement and the sample medium in which they reside [11].

- Establish required parameters: Determine critical measurement requirements including specificity, sensitivity (detection and quantification limits), accuracy, precision, and the applicable concentration range [11].

- Specify intended use: Define the analytical method's purpose, as this determines validation requirements—whether for research use, quality control, or regulatory submission [11].

- Identify constraints: Recognize practical limitations including time, cost, equipment availability, and regulatory considerations that will influence method selection.

Well-defined objectives at this initial stage prevent costly misdirection and establish clear criteria for method evaluation throughout the analytical process.

Step 2: Method Selection and Literature Review

With objectives established, researchers must identify the most appropriate analytical technique(s) through comprehensive literature review and evaluation of existing methodologies:

- Conduct literature review: Identify established methods for similar analytes or matrices, leveraging scientific databases and internal organizational knowledge [11].

- Evaluate technique suitability: Assess potential methods (e.g., HPLC, LC-MS, GC-MS, spectroscopy, electrophoresis) against defined objectives, considering factors like detection limits, selectivity, throughput, and compatibility with the sample matrix [12].

- Consider resource requirements: Evaluate instrumentation needs, reagent availability, operator expertise, and cost implications for potential methods.

- Assess sustainability: Incorporate green chemistry principles by considering methods that reduce solvent consumption, energy use, and waste generation, such as supercritical fluid chromatography or microextraction techniques [1].

This systematic evaluation ensures selection of the most fit-for-purpose analytical approach while potentially avoiding unnecessary method development from scratch.

Step 3: Method Development and Optimization

Method development transforms a selected analytical approach into a robust, reliable procedure tailored to specific research needs:

- Define method plan: Develop a comprehensive protocol outlining methodology, instrumentation, experimental design, reference standards, and reagents [11].

- Optimize critical parameters: Systematically adjust and refine key variables that influence analytical performance [11]:

- Separation conditions: Mobile phase composition, column chemistry, temperature, and flow rate for chromatographic methods

- Detection settings: Wavelength selection, ionization parameters, and detector voltage

- Sample preparation: Extraction efficiency, cleanup procedures, and derivatization

- Establish system suitability: Define criteria that verify the analytical system's proper function before analysis, including precision, resolution, and peak symmetry requirements [11].

- Document optimization: Thoroughly record all parameter modifications and their effects on method performance to establish a knowledge base for future troubleshooting.

This optimization process typically follows an iterative approach, refining parameters based on experimental results until the method demonstrates adequate performance characteristics.

Step 4: Method Validation

Method validation provides documented evidence that the analytical procedure is suitable for its intended purpose, establishing reliability and reproducibility:

- Accuracy: Demonstrate closeness of agreement between the accepted reference value and the value found, typically assessed through recovery studies of spiked samples [11].

- Precision: Establish degree of agreement among individual test results when the procedure is applied repeatedly to multiple samplings, including repeatability (intra-assay) and intermediate precision (inter-assay) [11].

- Specificity: Confirm ability to assess the analyte unequivocally in the presence of other components, including impurities, degradants, or matrix components [11].

- Linearity and Range: Demonstrate the analytical procedure's ability to elicit results directly proportional to analyte concentration within a specified range [11].

- Limit of Detection (LOD) and Quantification (LOQ): Establish the lowest amount of analyte that can be detected and quantified with acceptable accuracy and precision [11].

- Robustness: Evaluate method capacity to remain unaffected by small, deliberate variations in method parameters, indicating reliability during normal usage [11].

- Ruggedness: Assess reproducibility of results when the method is performed under different conditions, such as different laboratories, analysts, or instruments [11].

Table 1: Key Analytical Method Validation Parameters and Acceptance Criteria

| Validation Parameter | Definition | Typical Acceptance Criteria |

|---|---|---|

| Accuracy | Agreement between test result and true value | Recovery: 98-102% for APIs |

| Precision | Agreement among repeated measurements | RSD ≤ 2% for assay methods |

| Specificity | Ability to measure analyte accurately in presence of interferences | No interference from blank |

| Linearity | Proportionality of response to analyte concentration | R² ≥ 0.998 |

| Range | Interval between upper and lower concentration levels | Within linearity limits |

| LOD | Lowest detectable analyte concentration | Signal-to-noise ≥ 3:1 |

| LOQ | Lowest quantifiable analyte concentration | Signal-to-noise ≥ 10:1 |

| Robustness | Resistance to deliberate parameter variations | System suitability criteria met |

Validation should follow established regulatory guidelines (ICH, FDA, EMA) and be phase-appropriate—more extensive for methods supporting regulatory submissions versus research use [11].

Step 5: Sample Analysis and Data Collection

The validated method enters routine use for sample analysis, requiring strict adherence to established protocols:

- Sample preparation: Execute consistent, documented procedures for sample handling, extraction, purification, and derivatization to minimize variability [13].

- Instrumental analysis: Perform analyses using qualified instruments following standardized operating procedures, incorporating appropriate system suitability tests [11].

- Quality controls: Include method blanks, calibration standards, replicate samples, and reference materials to monitor analytical performance throughout the batch [13].

- Documentation: Maintain comprehensive records of all analytical activities, including sample tracking, instrument logs, raw data files, and any deviations from established procedures.

Consistent execution during this phase ensures generation of reliable, defensible data that accurately represents sample composition.

Step 6: Data Processing and Quality Assurance

Raw analytical data requires systematic processing and rigorous quality assessment to transform instrument output into meaningful results:

- Data cleaning: Identify and address anomalies, including checking for duplications, removing outliers based on statistical criteria, and addressing missing data through appropriate imputation methods or threshold-based exclusion [13].

- Statistical analysis: Apply appropriate statistical methods based on data distribution and measurement type, beginning with descriptive statistics and progressing to inferential analyses as needed [13].

- Quality assurance verification: Confirm data meets pre-established quality criteria, including evaluation of calibration curve performance, control sample recovery, and precision metrics [13].

- Data transformation: Convert raw instrument responses to meaningful concentrations or values using established mathematical models, calibration curves, or response factors.

This systematic approach to data evaluation ensures identification of potential issues before final interpretation, maintaining data integrity throughout the analytical process.

Step 7: Data Interpretation and Reporting

The final analytical stage transforms processed data into actionable information through contextual interpretation and clear communication:

- Contextualize findings: Interpret results in relation to original research questions, hypotheses, and existing scientific literature [14].

- Differentiate findings: Clearly distinguish statistically significant results from non-significant findings, addressing multiplicity when multiple comparisons increase chance associations [13].

- Integrate quantitative and qualitative data: Combine numerical results with contextual observations to provide comprehensive understanding, using qualitative data to explain quantitative trends [14].

- Create comprehensive reports: Structure reports to include background, methods, results, discussion, and conclusions, tailoring content and detail to the intended audience [15].

- Visualize data effectively: Employ appropriate tables, graphs, and figures to enhance understanding while maintaining data integrity [16].

Table 2: Market Context for Analytical Chemistry (2025-2030 Projections)

| Market Segment | 2025 Market Size (USD Billion) | Projected 2030 Market Size (USD Billion) | CAGR | Primary Growth Drivers |

|---|---|---|---|---|

| Analytical Instrumentation | $55.29 | $77.04 | 6.86% | Rising pharmaceutical R&D, regulatory requirements, AI integration |

| Pharmaceutical Analytical Testing | $9.74 | $14.58 | 8.41% | Increasing clinical trials, CRO concentration in North America |

| Gas Sensors | - | $5.34 (2030) | 8.9% | Stringent safety regulations, portable detector demand |

Effective reporting not only presents data but tells a compelling scientific story that facilitates informed decision-making by stakeholders.

Emerging Trends and Future Directions

The analytical chemistry landscape continues to evolve, driven by technological innovations and changing global demands:

- Artificial Intelligence and Automation: AI and machine learning are transforming analytical chemistry by enhancing data analysis, automating complex processes, and identifying patterns in large datasets that human analysts might miss [1].

- Miniaturization and Portability: Increasing demand for on-site testing in environmental monitoring, food safety, and forensic science is driving development of portable, miniaturized devices such as portable gas chromatographs for real-time air quality monitoring [1].

- Advanced Characterization Techniques: Emerging methods including molecular dynamics simulations enable researchers to model cellular-scale systems, providing atomistic insights into complex biophysical processes [17].

- Sustainable Analytical Practices: Green analytical chemistry continues to gain prominence, focusing on environmentally friendly procedures, reduced solvent consumption, and energy-efficient instruments [1].

These trends highlight the dynamic nature of analytical chemistry and its continuing evolution to address complex analytical challenges across scientific disciplines.

The Scientist's Toolkit: Essential Research Reagent Solutions

Modern analytical laboratories rely on specialized reagents, materials, and instrumentation to perform sophisticated analyses across diverse applications.

Table 3: Essential Analytical Instrumentation and Reagents

| Tool/Category | Specific Examples | Primary Functions and Applications |

|---|---|---|

| Separation Techniques | HPLC/UHPLC, GC, CE, IC | Separate complex mixtures into individual components for identification and quantification |

| Detection Systems | MS, MS/MS, UV-Vis, NMR, FLD | Detect and characterize separated analytes with high sensitivity and specificity |

| Sample Preparation | SPE cartridges, filtration units, derivatization reagents | Extract, clean up, and concentrate analytes while removing matrix interferences |

| Binding Assays | ELISA, Biolayer Interferometry, SPR | Measure biomolecular interactions, binding affinity, and kinetics |

| Characterization Reagents | Proteolytic enzymes, glycosidases, reduction/alkylation kits | Determine post-translational modifications, protein structure, and glycan profiles |

| Quality Control | Reference standards, system suitability mixtures, QC samples | Verify method performance, instrument calibration, and data quality |

Method Validation Framework

The method validation process follows a structured pathway to establish method reliability, with iterative refinement as needed.

The analytical process represents a systematic, iterative framework that transforms research questions into reliable, actionable data. From initial problem definition through final reporting, each stage builds upon the previous to ensure scientific rigor and methodological soundness. As an enabling science, analytical chemistry continues to evolve through technological innovations—including artificial intelligence, miniaturization, and sustainable practices—that expand its capabilities and applications across diverse scientific domains. By adhering to this structured approach and maintaining awareness of emerging trends, researchers and drug development professionals can leverage analytical chemistry as a powerful tool for generating valid, reproducible data that drives scientific advancement and informed decision-making.

Analytical chemistry serves as a fundamental enabling science in pharmaceutical research and development, providing the critical framework for ensuring drug safety, efficacy, and quality. This discipline supplies the tools and methodologies for identifying, quantifying, and characterizing chemical substances throughout the drug development lifecycle [18] [19]. Without robust analytical methods, even the most promising therapeutic molecules remain theoretical constructs, invalidated and unfit for human use [18].

The implementation of Quality by Design (QbD) principles in modern pharmaceutical development relies heavily on analytical chemistry to define and control Critical Quality Attributes (CQAs), including purity, potency, and stability [18]. This systematic approach builds quality into the manufacturing process from the start, ensuring consistent product performance. Within this framework, specific performance parameters—accuracy, precision, specificity, and limits of detection and quantification—form the foundation of reliable analytical data, enabling researchers to make informed decisions from early discovery through clinical trials and commercial production.

Core Performance Parameters for Data Quality

Accuracy and Precision: Foundations of Reliability

Accuracy refers to the closeness of agreement between a measured value and its corresponding true value or accepted reference value. It measures trueness and is typically expressed as percent recovery. In pharmaceutical analysis, accuracy determinations are performed using certified reference materials or through spike recovery experiments across the method's range [19].

Precision describes the closeness of agreement between a series of measurements obtained from multiple sampling of the same homogeneous sample under prescribed conditions. Precision has three hierarchical levels:

- Repeatability: Precision under the same operating conditions over a short interval (intra-assay)

- Intermediate Precision: Variation within laboratories (different days, analysts, equipment)

- Reproducibility: Precision between laboratories (collaborative studies)

Precision is expressed statistically as standard deviation, variance, or coefficient of variation (%RSD) [18].

Table 1: Comparison of Accuracy and Precision Parameters

| Parameter | Definition | Typical Expression | Key Evaluation Method |

|---|---|---|---|

| Accuracy | Closeness to true value | Percent recovery | Reference materials, spike recovery |

| Precision | Agreement between measurements | Standard deviation, %RSD | Repeated measurements |

Specificity and Selectivity: Establishing Identity

Specificity is the ability to assess unequivocally the analyte in the presence of components that may be expected to be present, such as impurities, degradation products, and matrix components. In chromatographic methods, specificity demonstrates that the peak response is attributable only to the analyte of interest [19].

For bioanalytical methods, specificity requires demonstration that the method can differentiate and quantify the analyte in the presence of endogenous matrix components, metabolites, and concomitant medications. This is typically established by analyzing blank matrix samples from at least six different sources and comparing responses with those from samples spiked with the analyte [18].

Limits of Detection and Quantification: Establishing Sensitivity

The Limit of Detection (LOD) is the lowest concentration of an analyte that can be detected, but not necessarily quantified, under stated experimental conditions. The Limit of Quantification (LOQ) is the lowest concentration that can be quantitatively determined with acceptable precision and accuracy [19].

Table 2: LOD and LOQ Determination Methods

| Method | Description | Calculation | Application Context |

|---|---|---|---|

| Signal-to-Noise Ratio | Visual or mathematical comparison | LOD: S/N ≥ 3:1LOQ: S/N ≥ 10:1 | Chromatographic methods |

| Standard Deviation of Response | Based on SD of blank or calibration curve | LOD: 3.3σ/SLOQ: 10σ/S | Spectroscopic and separation methods |

| Calibration Curve | Using slope and SD of residuals | LOD: 3.3×SDresidual/slopeLOQ: 10×SDresidual/slope | Linear regression approaches |

Techniques such as UPLC and LC-MS/MS have dramatically enhanced sensitivity, allowing detection and quantification of increasingly lower analyte concentrations, which is particularly crucial for trace analysis and metabolite identification [18].

Experimental Protocols and Methodologies

Protocol for Accuracy Determination via Spike Recovery

Principle: This experiment determines method accuracy by measuring the recovery of known amounts of analyte spiked into a blank matrix or sample solution.

Materials:

- Certified reference standard of target analyte

- Appropriate solvent system matching mobile phase

- Blank matrix (e.g., placebo formulation, biological fluid, synthetic mixture)

- Analytical instrument with validated method conditions

Procedure:

- Prepare a stock solution of the reference standard at a concentration approximately 100 times the expected LOQ

- Prepare blank matrix samples from at least six different sources

- Spike the blank matrices with the analyte at three concentration levels (low, medium, high) across the calibration range

- For each level, prepare a minimum of three replicates

- Analyze all samples using the validated analytical method

- Calculate percent recovery for each sample using the formula:

Recovery (%) = (Measured Concentration / Spiked Concentration) × 100

- Calculate mean recovery and relative standard deviation across all replicates

Acceptance Criteria: Mean recovery should be within 98-102% for drug substance assays, 95-105% for drug product assays, and 85-115% for biological matrices, with precision (RSD) not exceeding 2%, 3%, and 15% respectively [18].

Protocol for LOD and LOQ Determination via Signal-to-Noise

Principle: This method determines detection and quantification limits based on the ratio of analyte response to background noise, particularly applicable to chromatographic and spectroscopic techniques.

Materials:

- Reference standard solution at known concentration near expected LOQ

- Appropriate blank solution (mobile phase or matrix)

- Instrument with data acquisition software capable of noise measurement

Procedure:

- Prepare and inject the blank solution a minimum of six times

- Measure the baseline noise (N) over a region typical of analyte peak width (usually 5-20 times the peak width at baseline)

- Prepare and inject a standard solution at a concentration that produces a peak response approximately 3-10 times the baseline noise

- Measure the peak height (H) from baseline to peak maximum

- Calculate signal-to-noise ratio: S/N = H/N

- For LOD: Prepare serial dilutions until S/N ≈ 3:1

- For LOQ: Prepare serial dilutions until S/N ≈ 10:1 with precision (RSD ≤ 20%) and accuracy (80-120%)

- Confirm LOD and LOQ with a minimum of six replicates at the determined concentrations

Acceptance Criteria: The LOD concentration should yield S/N ≥ 3:1, while LOQ should yield S/N ≥ 10:1 with accuracy of 80-120% and precision RSD ≤ 20% for the six replicate measurements [19].

Workflow Visualization

Analytical Method Development and Validation Workflow

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 3: Essential Research Reagents and Materials for Analytical Quality Assessment

| Reagent/Material | Function | Application Example |

|---|---|---|

| Certified Reference Standards | Provides known purity substance for calibration and accuracy determination | Quantification of Active Pharmaceutical Ingredients (APIs) [18] |

| Chromatographic Columns | Separation of complex mixtures; different selectivities for specificity | UPLC columns for resolution of drug metabolites [19] |

| Mass Spectrometry-Grade Solvents | High purity solvents for minimal background interference | LC-MS mobile phase preparation [18] |

| Stable Isotope-Labeled Internal Standards | Correction for matrix effects and recovery variations in mass spectrometry | Quantitative bioanalysis of drugs in plasma [19] |

| Quality Control Materials | Monitors method performance over time; assesses precision | Commercially available QC samples for method validation [18] |

Advanced Applications in Pharmaceutical Development

Modern analytical techniques have revolutionized pharmaceutical quality assessment. High-performance liquid chromatography (HPLC) and ultra-high-performance liquid chromatography (UHPLC) offer high resolution and reproducibility in quantifying active pharmaceutical ingredients (APIs) and their metabolites [19]. These techniques are fundamental for determining parameters like accuracy and precision in complex matrices.

The integration of mass spectrometry (MS) with chromatographic systems provides unparalleled specificity through structural elucidation capabilities. As noted in recent pharmaceutical developments, "UPLC and LC-MS were used to determine the concentration of olanzapine and its metabolites in blood, plasma, and serum" [19]. This approach demonstrates the critical role of specificity in distinguishing parent compounds from metabolites in biological systems.

Emerging technologies continue to push sensitivity boundaries. Techniques like Raman spectroscopy show promise in early cancer detection through analysis of in vivo samples, highlighting the importance of low LOD/LOQ values in diagnostic applications [19]. Similarly, advancements in point-of-care testing (POCT) and lab-on-a-chip (LOC) platforms rely on rigorously validated analytical parameters to ensure reliability in decentralized healthcare settings [19].

Analytical chemistry is undergoing a transformative evolution, emerging as a critical enabling science that accelerates research and development across pharmaceutical, environmental, and materials fields. This transformation is driven by the convergence of three powerful trends: artificial intelligence (AI), miniaturization, and sustainable practices. These interconnected domains are reshaping traditional laboratory workflows, enhancing efficiency, reducing environmental impact, and unlocking new capabilities for scientific discovery.

The integration of AI into analytical chemistry provides sophisticated data-driven insights and predictive capabilities that were previously unimaginable. Miniaturization technologies are revolutionizing experimental scale, enabling high-throughput analysis while dramatically reducing resource consumption. Concurrently, the principles of green and sustainable chemistry are being systematically embedded into analytical methodologies, aligning scientific progress with environmental stewardship. Together, these advancements are positioning analytical chemistry as a pivotal discipline that enables breakthroughs across the scientific spectrum, from drug discovery to environmental monitoring.

Artificial Intelligence in Analytical Chemistry

Machine Learning and Deep Learning Applications

Modern AI in chemistry primarily consists of neural networks that encode information as numerical values determined by inputs from other artificial neurons. These systems learn from training data through processes referred to as machine learning (ML) and deep learning (DL), with the latter featuring multiple "deep" layers of neurons that pass information to subsequent layers [20]. The amount and quality of training data strongly influence AI performance, with effectiveness typically increasing logarithmically with data volume—from 1,000 data points providing basic functionality to 100,000 enabling robust performance [20].

In analytical chemistry, AI applications span multiple domains:

Property and Structure Prediction: Graph neural networks (GNNs) have demonstrated particular effectiveness for predicting molecular properties from structures. These networks represent molecules as mathematical graphs where edges connect nodes, analogous to chemical bonds connecting atoms [20]. GNNs excel in supervised learning tasks where models are trained with chemical structures and their associated properties, enabling prediction of properties for new structures based on learned patterns.

Molecular Simulation: Machine learning potentials (MLPs) represent a significant advancement in molecular simulation, effectively replacing computationally demanding density functional theory (DFT) calculations while maintaining comparable accuracy [20]. MLPs trained on DFT data can perform simulations that are "way faster" than conventional approaches, potentially reducing the substantial computational resources traditionally required for these calculations.

Reaction Prediction: Recent innovations in reaction prediction incorporate fundamental physical principles to enhance accuracy. The FlowER (Flow matching for Electron Redistribution) system developed at MIT uses a bond-electron matrix to represent electrons in reactions, explicitly tracking all electrons to ensure conservation of mass and energy [21]. This approach grounds AI predictions in physical reality, addressing a significant limitation of earlier models that sometimes generated chemically impossible reactions.

Table 1: Types of Artificial Intelligence in Chemistry

| AI Type | Key Features | Chemistry Applications | Performance Considerations |

|---|---|---|---|

| Graph Neural Networks (GNNs) | Represents molecules as mathematical graphs of connected nodes | Property prediction, structure-function relationships | Requires thousands of labeled data points for training; suitable for large datasets |

| Large Language Models (LLMs) | Transformer architecture, generative capabilities | Reaction prediction, synthesis planning | May violate physical constraints; requires careful validation |

| Machine Learning Potentials (MLPs) | Trained on quantum chemical data | Molecular dynamics simulations | "Way faster" than DFT; limited transferability between chemical systems |

| Generative Models | Creates new information similar to training data | Molecular design, reaction discovery | Effective for exploring new chemical spaces; may require fine-tuning |

Practical Implementation and Validation

Successful implementation of AI tools requires careful consideration of their limitations and appropriate validation strategies. General-purpose LLMs like ChatGPT may function as "glorified Google searches" with "more-efficient summarization" capabilities but often struggle with structural and equation-based chemical problems [20]. Their reproducibility challenge—producing different outputs for identical inputs—makes them unsuitable for applications requiring consistent results.

Benchmarking against established standards provides critical validation for AI tools. Resources like SciBench (containing university-level questions), Tox21 (for toxicity predictions), and MatBench (for material property predictions) enable objective comparison of AI performance [20]. For AI tools claiming to enhance molecule discovery, experimental validation remains essential to confirm real-world utility.

The FlowER system demonstrates how incorporating chemical knowledge addresses key limitations of previous approaches. By using a bond-electron matrix with nonzero values representing bonds or lone electron pairs and zeros representing their absence, the system conserves both atoms and electrons during reaction prediction [21]. This physically-grounded approach matches or outperforms existing methods in identifying standard mechanistic pathways while ensuring chemical validity.

Miniaturization Technologies and Methodologies

Miniaturized Sample Preparation and Analysis

Miniaturization of manual sample preparation methods represents a cornerstone of modern analytical chemistry, offering significant advantages in efficiency, safety, cost, and data quality. By scaling down sample volumes and optimizing processes, miniaturization addresses critical challenges in traditional analytical workflows [22].

Microextraction techniques exemplify this trend, with methods including:

- Solid-Phase Microextraction (SPME): Uses a single fiber for extraction, integrating extraction and concentration into a single step

- Liquid-Phase Microextraction (LPME): Employs microliter quantities of solvents

- Dispersive Liquid-Liquid Microextraction (DLLME): Reduces solvent consumption by up to 90% while maintaining analytical performance

- Microextraction in Packed Syringe (MEPS): Combines with HPLC-UV for highly sensitive determination of compounds like bisphenol A in water samples at picogram levels [23]

These techniques dramatically simplify workflows by reducing intermediate steps and consumables. Where traditional methods like liquid-liquid extraction (LLE) or solid-phase extraction (SPE) might require 30-60 minutes per sample and consume tens of milliliters of solvents, miniaturized approaches can process batches of 12-48 samples in 5-10 minutes with minimal solvent use [22]. This efficiency enhancement allows analysts to process more samples daily, significantly increasing throughput.

Table 2: Impact of Miniaturization on Analytical Parameters

| Parameter | Traditional Methods | Miniaturized Methods | Improvement |

|---|---|---|---|

| Sample Volume | 10-50 mL | 1-100 μL | 100-1000x reduction |

| Solvent Consumption | 10-50 mL per sample | <100 μL per sample | Up to 99% reduction |

| Processing Time | 30-60 minutes per sample | 5-10 minutes per batch | 6-12x faster |

| Cost per Sample | £5-£20 | £1-£3 | 60-85% reduction |

| Waste Generation | High (grams of glass, solvent waste) | Minimal (mg waste) | Up to 90% reduction |

Ultrahigh-Throughput Experimentation

Miniaturization enables ultrahigh-throughput experimentation, particularly in drug discovery, where it accelerates the evaluation of chemical reactions and compound synthesis. Recent advances demonstrate the miniaturization of popular medicinal chemistry reactions—including reductive amination, N-alkylation, N-Boc deprotection, and Suzuki coupling—for utilization in 1.2 μL reaction droplets [24].

This extreme miniaturization to the limits of chemoanalytical and bioanalytical detection accelerates drug discovery by maximizing the amount of experimental data collected per milligram of material consumed. Reaction methods evolved to perform in high-boiling solvents at room temperature enable the diversification of precious starting materials, such as complex natural products like staurosporine [24].

The experimental workflow for reaction miniaturization involves:

- Droplet Generation: Creating nanoliter to microliter scale reaction vessels

- Solvent Selection: Utilizing high-boiling solvents compatible with room-temperature reactions

- Parallel Processing: Simultaneously executing hundreds to thousands of reactions

- Automated Analysis: Integrating directly with analytical instrumentation like LC-MS

This approach transforms traditional chemical synthesis, enabling rapid exploration of structure-activity relationships and expanding accessible chemical space with minimal material consumption.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Miniaturized Analytical Chemistry

| Reagent/Equipment | Function | Application Example |

|---|---|---|

| DLLME Vials | Miniaturized container for dispersive liquid-liquid microextraction | Sample preparation for chromatographic analysis |

| SmartSPE Cartridges | Solid-phase extraction with reduced solvent consumption | Environmental sample cleanup and concentration |

| SPME Fibers | Solid-phase microextraction with integrated concentration | VOC analysis in environmental and biological samples |

| MEPS Packed Syringes | Microextraction in packed syringe for small sample volumes | Bisphenol A determination in water samples [23] |

| PAL3 Consumables | Automated sample preparation components | High-throughput laboratory automation |

| Zivak Multitasker Kits | Automated sample preparation for clinical diagnostics | Forensic toxicology and clinical sample processing |

| CE-IVD Reagents | In vitro diagnostic reagents for clinical testing | Patient sample analysis in diagnostic laboratories |

Sustainable Practices in Analytical Chemistry

Green Analytical Chemistry Principles and Framework

Green Analytical Chemistry (GAC) represents a transformative approach that embeds the 12 principles of green chemistry into analytical methodologies, emphasizing sustainability while maintaining high standards of accuracy and precision [25]. These principles provide a comprehensive strategy for designing environmentally benign analytical techniques:

- Waste Prevention: Designing analytical processes that avoid generating waste

- Atom Economy: Maximizing incorporation of starting materials into final products

- Less Hazardous Chemical Syntheses: Minimizing toxicity in reagents and solvents

- Designing Safer Chemicals: Protecting both analysts and the environment

- Safer Solvents and Auxiliaries: Using non-toxic, biodegradable alternatives

- Energy Efficiency: Developing techniques that operate under milder conditions

- Renewable Feedstocks: Replacing finite resources with bio-based alternatives

- Reducing Derivatives: Minimizing temporary chemical modifications

- Catalysis: Using catalytic reagents over stoichiometric ones

- Design for Degradation: Ensuring chemicals decompose into harmless products

- Real-time Analysis: Monitoring processes to prevent hazardous by-products

- Inherently Safer Chemistry: Minimizing risk of accidents [25]

Life Cycle Assessment (LCA) has emerged as a critical tool for evaluating the environmental impact of analytical methods across their entire lifespan—from raw material extraction to waste disposal [25]. LCA provides a systemic perspective that captures often-overlooked environmental burdens, such as energy demands during instrument manufacturing or agricultural impacts of bio-based solvent production, enabling informed decisions about method selection and optimization.

Green Method Transfer in Liquid Chromatography

A significant focus of sustainable analytical chemistry involves transferring classical HPLC and UHPLC methods into greener alternatives. This process typically centers on substituting organic solvent components in mobile phases with more environmentally benign options while maintaining analytical performance [26].

The method transfer process involves:

- Solvent Selection: Evaluating greenness properties and chromatographic suitability of alternative solvents

- Method Optimization: Adjusting parameters to maintain separation efficiency with new solvents

- Validation: Confirming method performance meets analytical requirements

Green solvent alternatives include water, supercritical carbon dioxide, ionic liquids, and bio-based solvents, which replace volatile organic compounds (VOCs) and reduce toxicity [25]. The transfer to greener chromatographic methods aligns with the broader objectives of sustainable development while maintaining the precision and accuracy required for analytical applications.

Quantitative Benefits of Sustainable Practices

The implementation of green analytical chemistry principles yields measurable benefits across multiple dimensions:

Environmental Impact: Miniaturization techniques reduce solvent consumption by up to 90% compared to conventional approaches [22]. Even modest scale-down, such as transitioning from 20mL to 10mL vials for headspace VOC analysis, reduces solvent, surrogate, and calibration standard usage by 50%, while eliminating up to half a tonne of borosilicate glass waste annually per instrument [22].

Economic Savings: Miniaturization offers substantial cost reductions, with traditional sample preparation costing £5-£20 per sample compared to £1-£3 for miniaturized methods [22]. Laboratories processing 10,000 samples annually could save £45,000-£95,000 by adopting microextraction techniques, creating a compelling business case for capital investment in automated systems.

Safety Enhancement: Reduced chemical volumes minimize analyst exposure to hazardous substances. While conventional LLE might require 10-50mL of chloroform, microextraction alternatives use less than 100μL, drastically lowering exposure potential [22]. Miniaturized workflows often employ closed systems, further reducing direct contact with hazardous materials.

Data Quality Improvement: Miniaturized methods enhance analytical precision by reducing variability from multiple manual steps. Techniques like SPME and DLLME achieve enrichment factors of 100-1000, improving detection limits for trace analytes—a critical advantage in environmental monitoring and clinical diagnostics [22].

Integrated Workflows and Future Perspectives

Converging Technologies in Analytical Chemistry

The most significant advancements emerge from the integration of AI, miniaturization, and sustainability practices into unified workflows. These converging technologies create synergistic effects that transcend their individual capabilities:

AI-Optimized Miniaturization: Machine learning algorithms guide the design of miniaturized experiments, optimizing conditions for minimal resource use while maximizing information content. AI tools can predict optimal solvent systems, reaction conditions, and analytical parameters for microscale experiments.

Intelligent Sustainability: AI-driven life cycle assessment tools evaluate the environmental impact of analytical methods, suggesting modifications to improve greenness metrics while maintaining performance. These systems can automatically identify opportunities for solvent replacement or energy reduction.

Closed-Loop Automation: Integrated systems combine miniaturized experimentation with AI-guided decision making, creating self-optimizing analytical platforms. These systems continuously refine methods based on experimental outcomes, progressively enhancing efficiency and sustainability.

The integration of these technologies positions analytical chemistry as a key enabling science that accelerates discovery while reducing environmental impact. This convergence is particularly impactful in pharmaceutical development, where accelerated reaction screening and analysis directly translate to reduced time-to-market for new therapeutics.

Emerging Trends and Future Directions

The future landscape of analytical chemistry will be shaped by several emerging trends:

Explainable AI in Chemistry: As AI systems become more sophisticated, developing interpretable models that provide chemical insights beyond predictions will be essential. Understanding the rationale behind AI recommendations builds trust and facilitates scientific discovery.

Nanoscale Synthesis and Analysis: Miniaturization will continue advancing toward nanoscale reactions and analysis, further reducing material requirements while enabling unprecedented experimental density.

Sustainable AI: Addressing the substantial energy consumption of large AI models through efficient algorithms and specialized hardware will be necessary to align AI advancements with sustainability goals.

Democratization of Tools: User-friendly interfaces and automated platforms will make advanced AI and miniaturization technologies accessible to non-specialists, broadening their impact across scientific disciplines.

Regulatory Integration: Development of standardized frameworks for validating and implementing AI-guided, miniaturized methods in regulated environments like pharmaceutical quality control.

These advancements will further solidify analytical chemistry's role as an enabling science that not only supports but actively drives innovation across research domains. By providing more information with less material, reducing environmental impact, and accelerating discovery cycles, the integrated application of AI, miniaturization, and sustainable practices represents the future of analytical science.

From Bench to Bedside: Essential Techniques and Their Real-World Applications in Biomedicine

Analytical chemistry serves as a critical enabling science in pharmaceutical research and development, providing the foundational tools to ensure drug safety and efficacy. Among these tools, chromatographic techniques stand as pillars for the separation, identification, and quantification of drug components and their impurities. The International Council for Harmonisation (ICH) guidelines mandate that pharmaceutical manufacturers provide validated, stability-indicating methods to prove the identity, potency, and purity of drug substances and products [27]. Chromatography comprehensively addresses these requirements by separating complex mixtures into individual components, allowing for precise characterization.

The journey of a drug molecule from discovery to market requires rigorous analytical oversight to monitor stability and detect degradants that could compromise patient safety. Well-documented cases in pharmaceutical history, such as the teratogenic effects of one thalidomide enantiomer, underscore the critical importance of separating and analyzing individual components within drug substances [28]. This technical guide explores the application of Liquid Chromatography (LC), Gas Chromatography (GC), and High-Performance Liquid Chromatography (HPLC) in assessing drug purity and stability, providing scientists with a comprehensive resource for method selection and implementation within a rigorous analytical framework.

High-Performance Liquid Chromatography (HPLC): The Workhorse of Pharmaceutical Analysis

HPLC has nearly completely replaced gas chromatography and numerous spectroscopic methods in pharmaceutical analysis over the past decades [29] [30]. Its dominance stems from its versatility, specificity, and applicability to a wide range of compounds, including those that are non-volatile, thermally labile, or high in molecular mass.

Core Principles and Pharmaceutical Applications

HPLC operates by forcing a pressurized liquid mobile phase containing the sample mixture through a column packed with a solid stationary phase. Components separate based on their different interaction strengths with the stationary phase, eluting at characteristic retention times [31]. This process is exceptionally adaptable; by modifying the mobile phase composition, pH, temperature, and stationary phase chemistry, analysts can achieve separations for diverse compound types.

Key applications of HPLC in pharmaceutical analysis include [32] [29] [30]:

- Assay and Purity Testing: Quantifying the active pharmaceutical ingredient (API) and related substances in bulk drugs and final formulations.

- Stability and Forced Degradation Studies: Identifying and quantifying degradation products formed under stress conditions (e.g., heat, light, acid, base, oxidation).

- Bioanalysis: Measuring drug and metabolite concentrations in biological fluids (plasma, serum, urine) to support pharmacokinetic and therapeutic drug monitoring.

- Dissolution Testing: Assessing drug release from pharmaceutical formulations.

- Chiral Separations: Resolving enantiomers using chiral stationary phases or chiral additives, which is crucial as enantiomers can exhibit different pharmacological or toxicological effects.

HPLC Methodologies and Stability-Indicating Assays

A stability-indicating method is a validated analytical procedure that can reliably detect and quantify changes in the API concentration over time and discriminate the API from its degradation products [27]. HPLC is the dominant technique for this purpose. Method development involves screening columns of different selectivity and mobile phases at different pH values to achieve optimal separation of the API from all potential impurities and degradants [28].

Table 1: Typical HPLC Conditions for Stability-Indicating Methods of Various Drug Substances

| Drug Substance | Elution Mode | Mobile Phase Composition | Reference |

|---|---|---|---|

| Ezetimibe | Gradient | Ammonium acetate buffer (pH 7.0) and Acetonitrile | [27] |

| Sacubitril and Valsartan | Isocratic | Trifluoroacetic acid in water-methanol | [27] |

| Atorvastatin and Amlodipine | Isocratic | Acetonitrile-NaH₂PO₄ buffer (pH 4.5) | [27] |

| Vancomycin Hydrochloride | Isocratic | Buffer citrate (pH 4)-Acetonitrile-Methanol | [27] |

| Flibanserin | Isocratic | Ammonium acetate buffer (pH 3) and Acetonitrile | [27] |

Forced degradation studies are a critical component of validating a stability-indicating method. Samples of the drug substance and product are subjected to harsh conditions (acid, base, peroxide, heat, light) to generate potential degradants. The HPLC method must then be able to resolve the main API peak from these degradation products, demonstrating its specificity and ability to monitor product stability throughout its shelf life [28] [27].

Figure 1: HPLC Analysis Workflow for Drug Purity

Advanced HPLC Techniques: Hyphenated Systems and Chiral Separations

The connection of HPLC to specific and sensitive detector systems vastly expands its capabilities. Hyphenated systems like HPLC-DAD (Diode Array Detector), LC-MS (Mass Spectrometry), and LC-NMR (Nuclear Magnetic Resonance) are now fundamental in modern laboratories [29] [30]. While a UV/VIS detector is versatile, a DAD provides UV spectrum for each point of the chromatographic peak, which is crucial for peak purity assessment [28]. LC-MS provides structural information and is highly specific and sensitive for identifying and quantifying impurities and degradants [33] [27].

Chiral separations represent another critical application. Since enantiomers can have vastly different biological activities—as seen with thalidomide—their separation is a pharmaceutical imperative [28] [30]. This is typically achieved using a chiral stationary phase (CSP), which incorporates a chiral selector (e.g., proteins, cyclodextrins, derivatized polysaccharides) that interacts differentially with each enantiomer, enabling their resolution [30].

Gas Chromatography (GC) in Pharmaceutical Analysis

GC is a powerful technique for separating volatile and semi-volatile compounds. Its application, while more specialized than HPLC, remains vital for specific analyses within the pharmaceutical industry.

Principle and Pharmaceutical Applications

GC separates analytes based on their partitioning between a gaseous mobile phase and a liquid stationary phase coated on a column wall or packing material. The sample is vaporized and carried by an inert gas (e.g., Helium, Nitrogen) through the column, with components separating based on their volatility and interaction with the stationary phase [34] [27].

Table 2: Key Applications of Gas Chromatography in Pharmaceutical Analysis

| Application Area | Primary Function | Example Analytes |

|---|---|---|

| Residual Solvent Analysis | Quantification of organic solvents from manufacturing | Methanol, Ethyl Acetate, Dichloromethane [34] |

| Impurity Profiling | Identification and quantification of process impurities and degradants | Reaction by-products, volatile degradation products [34] |

| Drug Formulation Analysis | Assessment of composition and stability | Excipients, additives, API in some cases [34] |

| Pharmacokinetic Studies | Analysis of drug concentrations in biological samples | Volatile drugs and their metabolites in blood, urine [34] |

| Forensic Analysis | Identification and confirmation of drugs of abuse | Cocaine, amphetamines, cannabinoids (via GC-MS) [34] |

Experimental Protocol: Residual Solvent Analysis by GC

Residual solvent testing is a classic GC application to ensure compliance with regulatory limits [34] [27].

Sample Preparation: The pharmaceutical sample (e.g., bulk drug substance) is accurately weighed and dissolved in a suitable high-purity solvent, such as dimethyl sulfoxide (DMSO) or water. The solution is often prepared in a headspace vial.

Instrumentation and Conditions:

- Instrument: Gas Chromatograph equipped with a Headspace Sampler (HS-GC) and a Flame Ionization Detector (FID) or Mass Spectrometer (GC-MS).

- Column: Fused-silica capillary column with a stationary phase such as (5%-Phenyl)-methylpolysiloxane.

- Carrier Gas: Helium or Nitrogen at a constant flow rate.

- Temperature Program: The oven temperature is ramped from a low initial hold (e.g., 40°C) to a high final temperature (e.g., 240°C) at a defined rate to separate all volatile components.

- Detection: FID is commonly used for quantification. MS detection provides definitive identification of unknown peaks.

Analysis: The sample solution is heated in the headspace sampler to partition the volatile solvents into the gas phase. An aliquot of the headspace gas is automatically injected into the GC system. The resulting chromatogram is analyzed by comparing retention times and peak areas of the sample against those of certified reference standards.

The Critical Assessment of Peak Purity

A fundamental challenge in chromatographic analysis is confirming that an observed peak corresponds to a single compound and is not the result of two or more co-eluting substances. Peak purity assessment is therefore essential for accurate quantification and identification.

Peak Purity Assessment Using Photodiode Array Detection (PDA)

PDA-based assessment is the most common approach for evaluating spectral peak purity [28] [35]. It answers the question: "Is this chromatographic peak composed of compounds having a single spectroscopic signature?" [28]

Theoretical Basis: The method treats a UV spectrum as a vector in n-dimensional space, where 'n' is the number of data points in the spectrum. The spectral similarity is calculated by determining the angle (θ) between the vector of a spectrum at the peak apex and the vectors of spectra from other parts of the peak (e.g., upslope and tail). A purity angle less than a purity threshold (determined from noise) suggests spectral homogeneity [28] [35]. This is often expressed as a purity angle vs. threshold or as a spectral contrast angle.

Workflow:

- Baseline Correction: Spectra are baseline-corrected by subtracting interpolated baseline spectra.

- Spectral Comparison: Multiple spectra across the chromatographic peak are compared to the apex spectrum.

- Algorithmic Calculation: The software calculates a purity angle (weighted average of all spectral contrast angles) and a purity threshold (based on noise).

- Assessment: The peak is considered spectrally pure if the purity angle is less than the purity threshold [35].

Limitations and Complementary Techniques

PDA-based purity assessment has limitations. It cannot detect co-eluting impurities that have identical or highly similar UV spectra to the main compound, or those with very poor UV response [28] [35]. False negatives can occur in these situations.

To increase confidence, scientists employ complementary techniques:

- Mass Spectrometry (MS): MS-based PPA is highly effective. It involves demonstrating that the same precursor ions, product ions, and/or adducts are present across the peak attributed to the parent compound. Any significant change in the mass spectrum across the peak indicates a potential co-elution [35].

- Orthogonal Chromatography: Analyzing the sample using a second, analytically different chromatographic method (e.g., different column chemistry or separation mechanism) can confirm the results of the primary method.

- Two-Dimensional Liquid Chromatography (2D-LC): This advanced technique provides a powerful solution by subjecting the effluent from a first column to a second, orthogonal separation, greatly enhancing resolving power and the ability to detect co-elutions [28] [35].

Figure 2: Multi-Technique Strategy for Peak Purity Assessment

The Scientist's Toolkit: Essential Reagents and Materials

Successful chromatographic analysis relies on a suite of high-quality reagents and materials. The following table details key components of the chromatographer's toolkit.

Table 3: Essential Research Reagent Solutions and Materials for Chromatographic Analysis

| Item | Function/Description | Application Notes |

|---|---|---|

| HPLC Grade Solvents (Acetonitrile, Methanol, Water) | High-purity mobile phase components to minimize baseline noise and ghost peaks. | Essential for achieving high-sensitivity detection. |

| Buffer Salts (e.g., Ammonium acetate, Potassium phosphate) | Modify mobile phase pH and ionic strength to control ionization and retention of analytes. | Must be HPLC grade; volatile buffers are preferred for LC-MS. |

| Stationary Phases (C18, C8, Phenyl, HILIC, Chiral) | The heart of the separation; interacts with analytes to cause differential migration. | Selection is critical and depends on analyte properties (polarity, pKa, size). |

| Derivatization Reagents | Chemically modify analytes to enhance volatility (for GC) or detectability (e.g., fluorescence). | Used for compounds lacking a chromophore or for improved GC behavior. |

| Internal Standards (e.g., deuterated analogs) | Added in known quantity to correct for variability in sample prep and injection. | Improves quantitative accuracy and precision. |

| Certified Reference Standards | Highly pure, well-characterized substances used for instrument calibration and method validation. | Critical for ensuring the accuracy and legality of analytical results [36]. |

Chromatographic techniques, including HPLC, LC, and GC, are indispensable enabling technologies in the pharmaceutical sciences. They provide the specific, sensitive, and robust analytical data required to ensure the identity, purity, potency, and stability of drug products from discovery through manufacturing and quality control. As the industry advances, so too do chromatographic methods, with trends pointing towards increased automation, more sophisticated hyphenated systems like LC-MS and LC-NMR, and the development of new stationary phases and software tools for data analysis. The rigorous application of these techniques, guided by regulatory standards and scientific best practices, remains fundamental to the mission of delivering safe and effective medicines to patients.

Mass spectrometry (MS) stands at the forefront of analytical chemistry, offering unparalleled sensitivity and precision for the identification and quantification of chemical compounds. As a cornerstone enabling technology, MS transforms research capabilities across diverse scientific domains from pharmaceutical development to environmental monitoring. Its unique capacity to elucidate molecular structures and detect trace-level analytes in complex matrices makes it indispensable for modern scientific inquiry. This technical guide examines the fundamental principles, advanced methodologies, and practical applications that establish mass spectrometry as a critical enabler of scientific progress, particularly in fields requiring rigorous structural characterization and ultra-sensitive quantification.

The evolution of mass spectrometry has been marked by continuous innovation in ionization techniques, mass analyzer design, and data processing capabilities. These advancements have progressively pushed the boundaries of detection limits, resolution, and analytical throughput. In contemporary research environments, MS platforms serve as central analytical tools that generate critical data for decision-making in drug development, diagnostic medicine, forensic analysis, and environmental protection. The technology's versatility enables researchers to address fundamental scientific questions while solving practical analytical challenges that were previously intractable with conventional analytical approaches.

Fundamentals of Mass Spectrometry for Structural Elucidation

Core Components and Principles

Structural elucidation via mass spectrometry relies on generating gas-phase ions from sample molecules, separating these ions based on their mass-to-charge ratio (m/z), and detecting them to produce a mass spectrum. The interpretation of this spectrum provides critical information about molecular weight, elemental composition, and structural features through analysis of fragmentation patterns. The fundamental process involves ionization of the analyte, mass analysis of the resulting ions, and detection of the separated ion populations.

The specificity of structural information derives from controlled fragmentation processes that break molecular ions into characteristic fragment ions. The pattern of these fragments serves as a molecular fingerprint, revealing details about functional groups, molecular connectivity, and stereochemistry. Successful structure elucidation requires understanding the gas-phase ion chemistry that governs these fragmentation pathways and the relationship between molecular structure and fragmentation behavior.

Essential Ionization Techniques

The selection of an appropriate ionization method is critical for successful structural analysis, as it determines the types of molecules that can be analyzed and the quality of structural information obtained.

Electrospray Ionization (ESI): This soft ionization technique produces intact molecular ions by generating a fine spray of charged droplets from a liquid sample under the influence of a high electric field. Recent enhancements, particularly nano-electrospray ionization (nano-ESI), utilize extremely fine capillary needles to produce highly charged droplets from very small sample volumes, significantly enhancing sensitivity and resolution while minimizing background noise [37]. ESI is exceptionally well-suited for analyzing polar molecules, biomolecules, and macromolecules that are susceptible to thermal degradation.

Matrix-Assisted Laser Desorption/Ionization (MALDI): This technique incorporates the analyte within a light-absorbing matrix material that facilitates desorption and ionization when irradiated with a laser pulse. Continuous innovations in MALDI have focused on improving spatial resolution and quantification capabilities through the development of novel matrix materials with improved ultraviolet absorption properties, leading to better ionization efficiency and reduced matrix-related noise [37]. MALDI imaging extensions enable researchers to visualize the spatial distribution of metabolites, proteins, and lipids within tissue sections.

Ambient Ionization Techniques: Methods including desorption electrospray ionization (DESI) and direct analysis in real time (DART) represent significant advances for direct sample analysis without extensive preparation. DESI involves spraying charged solvent droplets onto a sample surface to desorb and ionize analytes for immediate analysis, while DART utilizes a stream of excited atoms or molecules to ionize samples at ambient temperatures and pressures [37]. These techniques have dramatically expanded MS applications to include rapid, on-site analysis in field investigations and quality control environments.

Mass Analyzer Technologies

The mass analyzer serves as the core component responsible for separating ions based on their m/z ratios. Different analyzer technologies offer complementary capabilities for structural elucidation applications.

Table 1: Performance Characteristics of Mass Analyzer Technologies

| Analyzer Type | Mass Resolution | Mass Accuracy | Key Strengths | Structural Elucidation Applications |

|---|---|---|---|---|

| Quadrupole | Unit (1,000-2,000) | Moderate (100-500 ppm) | Robustness, cost-effectiveness, tandem MS capability | Quantitative analysis, targeted proteomics, environmental monitoring |

| Time-of-Flight (TOF) | High (20,000-60,000) | High (1-5 ppm) | Rapid analysis, high mass accuracy, unlimited m/z range | Peptide mass fingerprinting, polymer analysis, complex mixture analysis |

| Ion Trap | Unit (1,000-4,000) | Moderate (100-500 ppm) | Multi-stage mass spectrometry (MSn), compact design | Peptide sequencing, structural characterization of organic compounds |

| Orbitrap | Very High (>100,000) | Very High (1-3 ppm) | Exceptional resolution, high mass accuracy, stability | Detailed molecular characterization, proteomics, metabolomics |

| FT-ICR | Ultra-High (>1,000,000) | Ultra-High (<1 ppm) | Unparalleled resolution and mass accuracy | Complex mixture analysis, petroleum, natural products |

Recent advancements in mass analyzer technology have significantly enhanced capabilities for structural elucidation. Orbitrap technology utilizes an electrostatic field to trap ions in an orbiting motion around a central electrode, with the orbital frequency related to the ion's m/z ratio, enabling highly accurate mass measurements [37]. Fourier Transform Ion Cyclotron Resonance (FT-ICR) MS achieves exceptional mass resolution and accuracy by trapping ions in a magnetic field and measuring their cyclotron motion [37]. Multi-reflecting time-of-flight (MR-TOF) technology extends the ion pathlength through multiple reflection stages within a compact footprint, improving mass resolution and accuracy without increasing instrument size [37].

Advanced Approaches for Trace-Level Quantification

Sensitivity Enhancement Strategies