ANOVA for Method Comparison: A Statistical Framework for Biomedical Research and Drug Development

This article provides a comprehensive guide to using Analysis of Variance (ANOVA) for comparing methods in biomedical and pharmaceutical research.

ANOVA for Method Comparison: A Statistical Framework for Biomedical Research and Drug Development

Abstract

This article provides a comprehensive guide to using Analysis of Variance (ANOVA) for comparing methods in biomedical and pharmaceutical research. Tailored for researchers, scientists, and drug development professionals, it covers foundational concepts, practical application steps, troubleshooting for common pitfalls, and advanced validation techniques. Readers will learn to design robust comparison studies, select the correct ANOVA model, interpret results for regulatory compliance, and apply multivariate extensions like MANOVA for complex datasets, thereby enhancing the reliability and impact of their scientific research.

Understanding ANOVA: The Statistical Foundation for Comparing Methods

What is ANOVA? Defining the Core Concept and Its Importance in Research

Analysis of Variance, universally known as ANOVA, is a foundational statistical method used to determine if there are statistically significant differences between the means of three or more independent groups [1] [2]. Developed by the renowned statistician Ronald Fisher in the 1920s, it revolutionized the comparison of multiple groups at once, overcoming the limitations and error rates associated with performing multiple t-tests [1] [3].

At its core, ANOVA analyzes the variance within a dataset to make inferences about group means [1] [4]. It works by comparing two sources of variance:

- Variance Between Groups: Measures the variation between the means of the different groups, indicating if the groups are spread out.

- Variance Within Groups: Measures the variation within each individual group, serving as a baseline for natural, random error [5] [6].

The comparison is formalized using an F-test. The F-statistic is the ratio of the variance between groups to the variance within groups (F = MSBetween / MSWithin) [3] [5]. If the between-group variance is significantly larger than the within-group variance, the F-ratio will be greater than 1, providing evidence that the group means are not all equal [1] [7].

Core Concepts of ANOVA

The Logic of Variance Analysis

The power of ANOVA lies in its ability to use variance to test for differences in means. Instead of looking at means directly, it assesses whether the variability of group means around the overall grand mean is larger than the variability of individual observations around their respective group means [4]. This makes it an omnibus test, which can indicate that a difference exists but cannot specify exactly which groups differ [2] [6].

Key Terminology and the ANOVA Table

To systematically organize an ANOVA, results are presented in a standard table [3] [8]:

Table 1: Standard ANOVA Table Structure

| Source of Variation | Sum of Squares (SS) | Degrees of Freedom (df) | Mean Square (MS) | F-Value |

|---|---|---|---|---|

| Between Groups | SSB = Σnⱼ(Ȳⱼ - Ȳ)² | df1 = k - 1 | MSB = SSB / (k-1) | F = MSB / MSE |

| Within Groups (Error) | SSE = ΣΣ(Y - Ȳⱼ)² | df2 = N - k | MSE = SSE / (N-k) | |

| Total | SST = SSB + SSE | df3 = N - 1 |

Where:

- Ȳⱼ is the mean of group j

- Ȳ is the overall grand mean

- nâ±¼ is the sample size of group j

- k is the number of groups

- N is the total number of observations across all groups [3] [8]

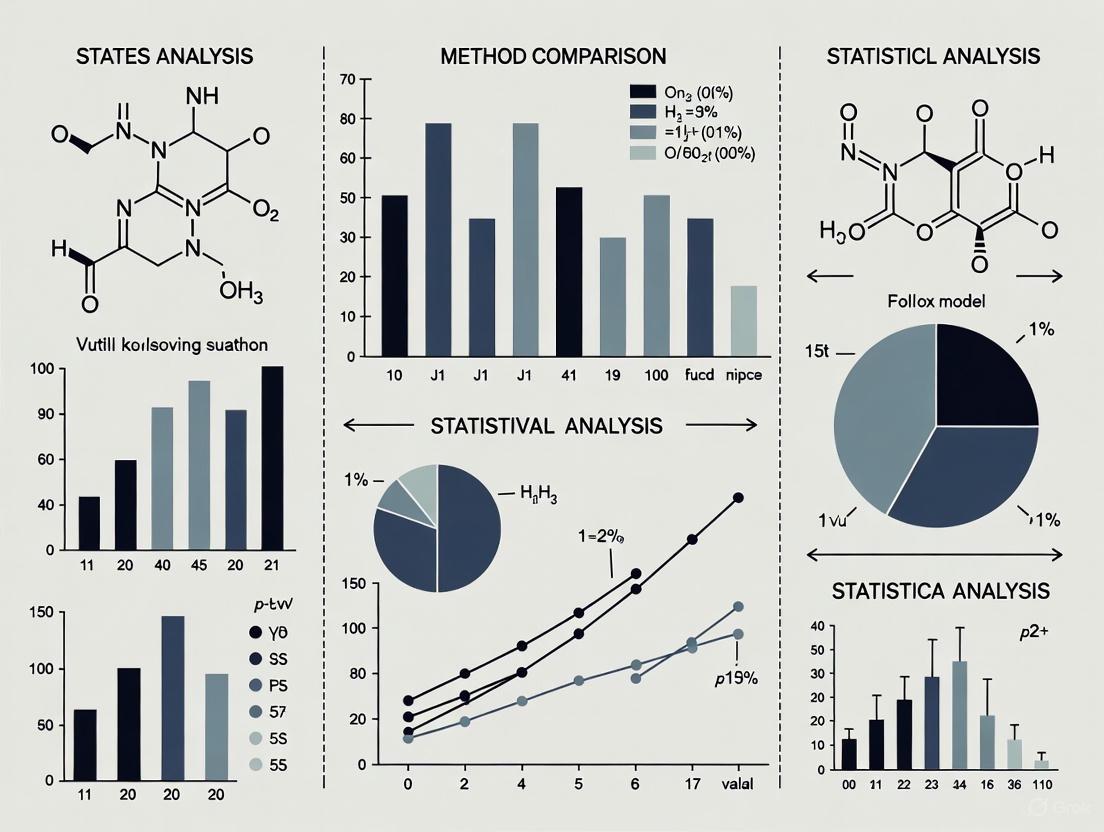

The following diagram illustrates the logical workflow and decision process for conducting a one-way ANOVA.

Key Types of ANOVA and Their Applications

ANOVA is not a single test but a family of methods. Choosing the right type depends on the research design and the number of independent variables [2] [6].

Table 2: Types of ANOVA Tests

| Type of ANOVA | Independent Variables | Purpose & Key Feature | Research Example |

|---|---|---|---|

| One-Way ANOVA [6] [7] | One | Tests for differences between the means of three or more groups based on one factor. | Comparing the average yield of a crop using three different fertilizers [3]. |

| Two-Way ANOVA [6] [9] | Two | Assesses the effect of two independent variables and their interaction effect on the dependent variable. | Analyzing plant growth based on both fertilizer type and watering frequency to see if the effect of fertilizer depends on watering [3] [7]. |

| Factorial ANOVA [2] | More than two | Evaluates the effects of multiple independent variables and their complex interactions. | Studying the combined impact of age, income, and education level on consumer spending [2]. |

| Repeated Measures ANOVA [9] | One or more (within-subjects) | Used when the same subjects are measured multiple times under different conditions. | Tracking patient stress levels before, during, and after a clinical intervention [9]. |

Methodological Comparison: ANOVA vs. Multiple T-Tests

A fundamental reason for ANOVA's importance is its control over Type I errors (false positives). Conducting multiple pairwise t-tests on three or more groups inflates the overall chance of error [4].

Table 3: Alpha (α) Inflation with Multiple T-Tests (α=0.05 per test)

| Number of Groups | Number of Pairwise Comparisons | Overall Significance Level |

|---|---|---|

| 2 | 1 | 0.05 |

| 3 | 3 | ~0.14 |

| 4 | 6 | ~0.26 |

| 5 | 10 | ~0.40 |

| 6 | 15 | ~0.54 |

As shown in Table 3, while each individual t-test might have a 5% error rate, the cumulative probability of making at least one Type I error across all comparisons rises dramatically to 26% for four groups and 54% for six groups [4]. ANOVA avoids this by testing all groups simultaneously with a single, omnibus test.

Experimental Protocols and Data Presentation

Detailed Methodology for a One-Way ANOVA

The following steps outline a standard protocol for conducting a one-way ANOVA, adaptable to various research contexts [5] [8].

Formulate Hypotheses

Verify Assumptions

- Independence: Observations must be independent of each other [6] [9].

- Normality: The dependent variable should be approximately normally distributed within each group. This can be checked with tests like Shapiro-Wilk or Q-Q plots [5] [6].

- Homogeneity of Variances: The variances in each group should be approximately equal. This can be tested using Levene's test or Bartlett's test [5] [6].

Calculate the ANOVA Statistics

- Calculate Sums of Squares (SS): Compute SSBetween, SSWithin, and SSTotal using the formulas in Table 1 [8].

- Calculate Degrees of Freedom (df): dfBetween = k - 1, dfWithin = N - k, dfTotal = N - 1 [3].

- Calculate Mean Squares (MS): MSBetween = SSBetween / dfBetween, MSWithin = SSWithin / dfWithin [3] [5].

- Compute the F-statistic: F = MSBetween / MSWithin [3] [5].

Interpret the Results and Draw Conclusions

Conduct Post-Hoc Analysis (if needed)

Example: Pharmaceutical Drug Efficacy Study

Consider a hypothetical clinical trial where a pharmaceutical company tests the effectiveness of three different drug formulations (A, B, and C) on a standardized health improvement score [3].

Table 4: Example Dataset and Summary Statistics

| Group | Sample Size (n) | Mean Health Improvement Score | Standard Deviation |

|---|---|---|---|

| Drug A | 20 | 75 | 8 |

| Drug B | 20 | 80 | 7 |

| Drug C | 20 | 78 | 9 |

After performing the calculations (SSBetween, SSWithin, MSB, MSW, etc.), the results would be summarized in an ANOVA table.

Table 5: ANOVA Table for Drug Efficacy Study

| Source of Variation | Sum of Squares | Degrees of Freedom | Mean Squares | F-Value | p-Value |

|---|---|---|---|---|---|

| Between Groups | 253.33 | 2 | 126.67 | 3.36 | 0.04 |

| Within Groups | 2148.00 | 57 | 37.68 | ||

| Total | 2401.33 | 59 |

Interpretation: With a p-value of 0.04 (less than α=0.05), we reject the null hypothesis. This indicates a statistically significant difference in the average health improvement scores among the three drug formulations. A post-hoc test would subsequently be conducted to determine which specific drugs (e.g., A vs. B, A vs. C, B vs. C) show different effects.

While ANOVA itself is a computational procedure, its proper application in experimental research relies on several key components.

Table 6: Key Research Reagent Solutions for ANOVA-Based Studies

| Item | Function in Research |

|---|---|

| Statistical Software (R, SPSS, Python) | Essential for performing complex ANOVA calculations, checking assumptions, running post-hoc tests, and creating diagnostic plots [2] [9]. |

| Assumption Testing Tools | Statistical tests like Levene's Test (for homogeneity of variance) and Shapiro-Wilk Test (for normality) are critical reagents for validating the ANOVA model before interpretation [5] [6]. |

| Post-Hoc Test Procedures | Methods like Tukey's HSD and Bonferroni correction are applied after a significant ANOVA to control for Type I errors while making multiple comparisons [3] [6]. |

| Data Visualization Tools | Box plots and residual plots are used to visually assess distributions, check for outliers, and verify model assumptions, complementing numerical tests [5] [9]. |

Importance and Applications in Research

ANOVA's versatility makes it indispensable across numerous fields, from medicine and agriculture to marketing and industrial research [3] [10].

- Informed Decision-Making: Businesses use ANOVA to inform decisions on product development, marketing strategies, and resource allocation by identifying which variables have the most significant impact on outcomes [2] [10]. For instance, it can determine if geographical region significantly affects sales performance [2].

- Experimental Research: In scientific fields like pharmacology and agriculture, ANOVA is fundamental for determining the effectiveness of different treatments, interventions, or conditions [3]. It allows researchers to go beyond simple comparisons and understand interaction effects between multiple factors [6].

- Quality Control and Optimization: Industries use ANOVA to assess whether variations in manufacturing processes lead to differences in product quality, or to optimize production parameters for cost-effectiveness and efficiency [3] [10].

ANOVA remains a cornerstone of modern statistical analysis. Its core concept—using variance to make inferences about means—provides a robust and efficient framework for comparing multiple groups. By controlling for Type I error inflation and extending to complex, multi-factor designs, ANOVA empowers researchers, scientists, and professionals to draw reliable, data-driven conclusions from their experiments. Its continued relevance is secured by its adaptability, forming the basis for advanced models and remaining an essential tool in the quest for scientific discovery and informed decision-making.

In the realm of statistical analysis for scientific research, selecting the appropriate tool for comparing group means is a fundamental decision that directly impacts the validity and interpretability of experimental results. While the t-test is a well-known and robust method for comparing two groups, its inappropriate application to studies involving three or more groups introduces substantial statistical risks. This guide objectively examines the technical limitations of using multiple t-tests in multi-group comparisons and establishes Analysis of Variance (ANOVA) as the essential, statistically sound alternative. Framed within the context of method comparison and pharmaceutical research, this article details the theoretical foundation, practical application, and experimental protocols for ANOVA, providing researchers and drug development professionals with the data and methodologies necessary to ensure rigorous, reliable data analysis.

The Fundamental Problem: Multiple T-Tests and Inflated Error

The Logic of Hypothesis Testing and Type I Error

In statistical hypothesis testing, the significance level (α) represents the maximum acceptable probability of committing a Type I error—falsely rejecting a true null hypothesis (i.e., finding a difference where none exists). A common α level is 0.05 (5%), meaning a 5% risk of a false positive for a single test [11].

The Compounding Error of Multiple Comparisons

The critical pitfall of using multiple t-tests for multi-group comparisons is the compounding of Type I error. When multiple independent t-tests are performed, the error rates accumulate across the tests, dramatically increasing the overall chance of a false discovery [12].

For example, comparing 3 groups (A, B, and C) requires three t-tests (A vs. B, A vs. C, B vs. C). The overall error rate (αfamilywise) is calculated as: αfamilywise = 1 - (1 - αper-test)^k where k = number of tests. With α=0.05 and k=3, αfamilywise = 1 - (0.95)^3 ≈ 0.143. This means a ~14% chance of at least one Type I error, not the intended 5% [13] [12]. With more groups, this risk becomes unacceptably high, rendering findings unreliable.

Table 1: Inflation of Familywise Type I Error with Multiple T-Tests

| Number of Groups | Number of T-Tests Required | Familywise Type I Error Rate (α=0.05) |

|---|---|---|

| 2 | 1 | 5.0% |

| 3 | 3 | 14.3% |

| 4 | 6 | 26.5% |

| 5 | 10 | 40.1% |

ANOVA as the Statistically Sound Alternative

Core Principle and F-Statistic

Analysis of Variance (ANOVA) overcomes this problem by providing an omnibus test—a single, simultaneous comparison of all group means. It partitions the total variability observed in the data into two components [14] [13]:

- Variance Between Groups: Variability due to the different experimental treatments or factors.

- Variance Within Groups: Variability due to random chance or individual differences (experimental error).

The test statistic for ANOVA is the F-statistic, which is the ratio of these two variances: F = (Variance Between Groups) / (Variance Within Groups) [14] [13] A significantly large F-statistic (typically associated with a p-value < 0.05) indicates that the differences between the group means are substantially larger than the random variation expected within the groups. This leads to rejecting the null hypothesis (Hâ‚€: all population means are equal) in favor of the alternative (Hâ‚: at least one population mean is different) [14] [15].

Key Advantages for Multi-Group Comparison

- Controls Familywise Error Rate: A single ANOVA test maintains the prescribed α level (e.g., 0.05), regardless of the number of groups being compared, preventing the error inflation seen with multiple t-tests [12].

- Global Test of Significance: It efficiently answers the initial question: "Is there any significant difference among these groups?" This prevents unnecessary digging into individual group differences if no overall effect exists [16].

- Foundation for Detailed Analysis: A significant ANOVA result justifies further investigation using post-hoc tests (e.g., Tukey's HSD, Bonferroni) to identify which specific groups differ, while controlling for the increased error from these multiple pairwise comparisons [15].

Comparative Analysis: T-Test vs. ANOVA

The following table provides a structured, side-by-side comparison of the two statistical methods, highlighting their distinct purposes, structures, and outputs.

Table 2: Objective Comparison between T-Test and ANOVA

| Feature | T-Test | ANOVA (One-Way) |

|---|---|---|

| Purpose & Scope | Compares means between two groups only [15] [16] | Compares means across three or more groups simultaneously [15] [16] |

| Underlying Hypothesis | H₀: μ₠= μ₂ (The two group means are equal) [15] | H₀: μ₠= μ₂ = μ₃ = ... (All group means are equal) [15] [12] |

| Test Statistic | t-statistic [15] | F-statistic (Ratio of between-group to within-group variance) [14] [15] |

| Experimental Design | Simple comparison: Control vs. Treatment, or two independent conditions. | Multi-level factor: Multiple dosages, formulations, or treatment regimens. |

| Post-Hoc Analysis | Not required. A significant result directly indicates which of the two groups is different. | Required after a significant F-test to identify which specific group pairs differ (e.g., Tukey's HSD) [15] |

| Key Assumptions | Normality, Independence, Homogeneity of Variance [15] [16] | Normality, Independence, Homogeneity of Variance (can be checked with Levene's Test) [15] |

Experimental Protocols and Applications in Pharmaceutical Research

ANOVA is not a single method but a family of techniques tailored to different experimental designs. Its application is ubiquitous in pharmaceutical research for ensuring drug efficacy, safety, and quality [14] [13].

Protocol 1: One-Way ANOVA for Drug Efficacy Testing

This is used to compare the effect of a single factor with multiple levels (e.g., different drug dosages) on a continuous outcome (e.g., reduction in blood pressure) [14].

Experimental Workflow:

- Design: Randomly assign subjects to three or more independent groups (e.g., Placebo, Drug Dose 50mg, Drug Dose 100mg).

- Intervention: Administer the respective treatment to each group over the study period.

- Measurement: Record the primary efficacy outcome variable (e.g., mean blood pressure reduction) for each subject.

- Analysis: Perform a One-Way ANOVA to test for any significant difference in mean reduction across the groups.

- Post-hoc: If ANOVA is significant (p < 0.05), conduct a post-hoc test (e.g., Tukey's HSD) to compare all possible pairs of groups (Placebo vs. 50mg, Placebo vs. 100mg, 50mg vs. 100mg).

Statistical Model: The model for a One-Way ANOVA is represented as:

Y_ij = μ + τ_i + ε_ijwhereY_ijis the response of the j-th subject in the i-th group,μis the overall mean,τ_iis the effect of the i-th treatment, andε_ijis the random error term [14].

Protocol 2: Two-Way ANOVA for Analyzing Interaction Effects

Two-Way ANOVA extends the analysis to two independent factors, allowing researchers to test for interaction effects—where the effect of one factor depends on the level of another factor [14].

- Example: Investigating a new drug's effect on cholesterol levels, considering both

Drug Dosage(Low, High) andPatient Age Group(Young, Elderly) as factors. Experimental Workflow:

- Design: Assign subjects to groups that represent all combinations of the two factors (e.g., Young/Low Dose, Young/High Dose, Elderly/Low Dose, Elderly/High Dose).

- Measurement: Record the final cholesterol level for each subject.

- Analysis: Perform a Two-Way ANOVA. The model will test three hypotheses:

- Main effect of

Dosage: Is there a difference between Low and High dose across all age groups? - Main effect of

Age Group: Is there a difference between Young and Elderly across all dosages? - Interaction effect (

Dosage * Age Group): Does the effect of dosage depend on the patient's age group (or vice versa)?

- Main effect of

Statistical Model:

Y_ijk = μ + α_i + β_j + (αβ)_ij + ε_ijkwhereα_iis the effect of the i-th level of the first factor,β_jis the effect of the j-th level of the second factor, and(αβ)_ijis the interaction effect between them [14].

The following diagram illustrates the logical decision process for selecting the appropriate statistical test based on the experimental design, incorporating the key concepts of ANOVA.

The following table details key solutions and resources essential for implementing ANOVA in a research or drug development setting.

Table 3: Key Research Reagent Solutions for ANOVA-Based Experiments

| Item / Solution | Function in Experimental Analysis |

|---|---|

| Statistical Software (R, SAS, Python) | Provides the computational engine to perform complex ANOVA calculations, generate accurate F- and p-values, and run necessary assumption checks and post-hoc tests [14]. |

| Data Visualization Tools | Enables the creation of plots (e.g., box plots, interaction plots) to visually assess data distribution, group differences, and potential interaction effects between factors before and after formal statistical testing. |

| Normality Test Algorithms | Statistical routines (e.g., Shapiro-Wilk test) used to validate the key ANOVA assumption that the dependent variable is approximately normally distributed within each group. |

| Homogeneity of Variance Tests | Procedures (e.g., Levene's Test) that check the critical ANOVA assumption that the variances across the compared groups are equal, ensuring the validity of the F-test result [15]. |

| Post-Hoc Test Suite | A collection of follow-up tests (e.g., Tukey's HSD, Bonferroni) used after a significant ANOVA result to perform all pairwise comparisons between groups while controlling the familywise error rate [15]. |

The choice between a t-test and ANOVA is not a matter of preference but of rigorous statistical principle. Using multiple t-tests for multi-group comparisons is a fundamentally flawed approach that leads to an unacceptably high risk of false discoveries, jeopardizing the integrity of research conclusions. ANOVA provides a scientifically sound framework that controls error rates and offers a robust omnibus test for detecting any significant differences among three or more groups. For researchers and drug development professionals committed to data integrity and methodological rigor, mastering the application of ANOVA and its variants is not just beneficial—it is essential for generating reliable, defensible, and impactful scientific evidence.

Analysis of Variance (ANOVA) is a fundamental statistical method developed by Ronald Fisher in the early 20th century that allows researchers to compare means across three or more groups by analyzing different sources of variation [1] [6]. In pharmaceutical research and method comparison studies, understanding the sources of variability is crucial for validating analytical methods, ensuring manufacturing consistency, and interpreting clinical trial results accurately. The core principle of ANOVA involves partitioning total observed variance into systematic between-group components and random within-group components, providing a powerful framework for determining whether observed differences in data reflect true treatment effects or merely random fluctuations [17] [18].

Variance decomposition enables drug development professionals to distinguish between meaningful experimental effects and natural variability, which is particularly important when assessing drug efficacy, batch consistency, or analytical method performance [19]. By quantifying how much variability arises from different sources, researchers can make informed decisions about product quality, process stability, and experimental findings. This article will explore both the theoretical foundations and practical applications of variance partitioning through ANOVA, with specific examples relevant to pharmaceutical and scientific research contexts.

Theoretical Foundations: Between-Group vs. Within-Group Variance

Defining the Variance Components

In ANOVA, total variance is partitioned into two primary components: between-group variance and within-group variance [17]. Between-group variance (also called treatment variance or SSB) quantifies how much the group means differ from each other and from the overall grand mean [20]. This component represents the systematic variation that potentially results from experimental treatments or group classifications. In pharmaceutical contexts, this might reflect differences between drug formulations, manufacturing batches, or analytical methods. The between-group variation is calculated as the sum of squared differences between each group's mean and the overall grand mean, weighted by sample size: SSB = Σnⱼ(X̄ⱼ - X̄..)², where nⱼ is the sample size of group j, X̄ⱼ is the mean of group j, and X̄.. is the overall mean [17].

Within-group variance (also called error variance or SSW) measures the variability of individual observations within each group around their respective group means [21]. This component represents random, unexplained variation that occurs even under identical experimental conditions. In drug development, this might encompass biological variability between subjects, measurement error in analytical instruments, or environmental fluctuations. The within-group variation is calculated as the sum of squared differences between each observation and its group mean across all groups: SSW = ΣΣ(Xij - X̄ⱼ)², where Xij represents the i-th observation in group j [17]. The relationship between these components can be visualized as follows:

The F-Statistic: Ratio of Variances

The core test statistic in ANOVA is the F-ratio, which compares between-group variance to within-group variance [17] [18]. This ratio follows an F-distribution under the null hypothesis that all group means are equal. The F-statistic is calculated as F = MSB/MSW, where MSB (Mean Square Between) is SSB divided by its degrees of freedom (k-1, where k is the number of groups), and MSW (Mean Square Within) is SSW divided by its degrees of freedom (N-k, where N is the total sample size) [17]. A significantly large F-value indicates that the between-group variation substantially exceeds what would be expected from random within-group variation alone, providing evidence that not all group means are equal [18].

When the between-group variation is large compared to the within-group variation, the F-statistic increases, making it more likely to reject the null hypothesis [17]. As shown in the conceptual diagram below, the same between-group difference can yield different conclusions depending on the amount of within-group variability:

Experimental Protocols for Variance Component Analysis

One-Way ANOVA Design and Execution

The one-way ANOVA protocol provides the fundamental framework for partitioning variance when comparing multiple groups under a single experimental factor [6]. This design is particularly useful in pharmaceutical research for comparing drug formulations, manufacturing processes, or analytical methods. The experimental workflow involves several key stages, from study design through interpretation, as illustrated below:

For valid ANOVA results, three key assumptions must be verified: normality (residuals should be approximately normally distributed), homogeneity of variance (groups should have similar variances), and independence (observations must be independent of each other) [6]. Violations of independence are particularly serious and can invalidate results, while ANOVA is generally robust to minor violations of normality and homogeneity, especially with equal sample sizes [6]. Pharmaceutical researchers should use diagnostic plots and statistical tests (e.g., Levene's test for homogeneity, Shapiro-Wilk test for normality) to verify these assumptions before interpreting ANOVA results.

Random and Mixed Effects Models for Stability Studies

In drug development, variance components analysis extends basic ANOVA to quantify different sources of random variability, which is particularly important in stability studies and quality control [19]. Unlike fixed-effects models where levels are predetermined, random-effects models treat factor levels as random samples from larger populations, allowing generalization beyond the specific levels studied [1]. For example, in a stability study examining drug shelf life, batches might be treated as random factors if they represent a larger population of manufacturing batches.

The mixed-effects model incorporates both fixed and random factors and is commonly used in pharmaceutical research [1]. The variance components output from such analyses provides estimates of the contribution of each random factor to total variability. For example, Minitab's variance components analysis for a stability study might show that 72.91% of total variance comes from batch-to-batch differences, while only 27.06% comes from random error, indicating that batch variability is the dominant source of variation [19]. The interpretation of variance components includes examining the standard error of each variance estimate, Z-values, and associated p-values to determine if each variance component is significantly greater than zero [19].

Comparative Experimental Data and Analysis

Drug Stability Study: Variance Components Analysis

In pharmaceutical stability testing, variance components analysis helps quantify different sources of variability to determine product shelf life and assess manufacturing consistency. The following table summarizes results from a simulated stability study analyzing the percentage of active pharmaceutical ingredient (API) over time across multiple batches:

Table 1: Variance Components Analysis for Drug Stability Study

| Variance Source | Variance Component | % of Total Variance | Standard Error | Z-Value | P-Value |

|---|---|---|---|---|---|

| Batch | 0.527 | 72.91% | 0.304 | 1.736 | 0.041 |

| Month × Batch | 0.0002 | 0.02% | 0.0001 | 1.224 | 0.110 |

| Error (Within) | 0.196 | 27.06% | 0.037 | 5.326 | <0.001 |

| Total | 0.723 | 100% | - | - | - |

Data adapted from Minitab variance components interpretation guide [19].

The results demonstrate that batch-to-batch differences account for most of the variability (72.91%) in the stability data, while the time × batch interaction contributes minimally (0.02%). This pattern suggests that manufacturing consistency across batches is the primary factor influencing drug stability, rather than degradation patterns over time varying between batches. The significant p-value for batch variance (p=0.041) confirms that batch differences represent real systematic variation rather than random noise. Such findings would typically prompt investigations into manufacturing process control and potentially justify establishing more stringent batch release specifications.

Method Comparison: Bond Strength Testing

ANOVA is frequently used to compare analytical methods or testing procedures in pharmaceutical research. The following example compares bond strength measurements across three different resin cement types used in dental drug delivery systems:

Table 2: One-Way ANOVA for Resin Bond Strength Comparison

| Resin Type | Sample Size | Mean Bond Strength (MPa) | Standard Deviation | Grouping* |

|---|---|---|---|---|

| A | 15 | 28.3 | 2.1 | a |

| B | 15 | 31.7 | 2.3 | b |

| C | 15 | 35.2 | 2.0 | c |

Note: Groups with different letters indicate statistically significant differences (p < 0.05) based on Tukey's HSD post-hoc test. Data structure adapted from clinical ANOVA example [18].

The corresponding ANOVA table for this comparison shows a statistically significant difference between resin types:

Table 3: ANOVA Table for Bond Strength Data

| Variance Source | Sum of Squares | Degrees of Freedom | Mean Square | F-Value | P-Value |

|---|---|---|---|---|---|

| Between Groups | 362.7 | 2 | 181.4 | 8.4 | 0.001 |

| Within Groups | 906.2 | 42 | 21.6 | - | - |

| Total | 1268.9 | 44 | - | - | - |

Table adapted from clinical research ANOVA example [18].

The significant F-value (F=8.4, p=0.001) indicates that differences between resin types exceed what would be expected by random variation alone. Post-hoc testing with Tukey's HSD would reveal that all three resins differ significantly from each other, with Resin C showing superior bond strength. This method comparison provides objective data for selecting materials in drug delivery system design, with Resin C representing the statistically superior option while considering both statistical significance and practical implications for product performance.

Essential Research Reagent Solutions

Successful variance partitioning in pharmaceutical research requires appropriate experimental materials and statistical tools. The following table outlines key resources for implementing ANOVA-based method comparisons:

Table 4: Essential Research Reagents and Tools for Variance Analysis

| Reagent/Tool | Function | Application Example |

|---|---|---|

| Statistical Software (Minitab, R, SPSS, SAS) | Variance components estimation and ANOVA calculation | Calculating F-statistics, p-values, and variance component percentages [19] |

| Levene's Test Protocol | Verification of homogeneity of variance assumption | Testing equal variance assumption before ANOVA interpretation [6] |

| Shapiro-Wilk Normality Test | Assessment of normal distribution assumption | Validating normality assumption for residual values [6] |

| Tukey's HSD Procedure | Post-hoc multiple comparisons after significant ANOVA | Identifying which specific group means differ significantly [18] |

| Bonferroni Correction | Adjustment for multiple comparisons | Controlling Type I error rate when conducting multiple hypothesis tests [18] |

| Random/Mixed Effects Models | Analysis with random factors | Partitioning variance in stability studies with randomly selected batches [19] |

These tools enable researchers to implement proper variance partitioning methodologies, validate statistical assumptions, and draw appropriate conclusions from experimental data. Pharmaceutical researchers should select tools based on their specific experimental design, with commercial software like Minitab offering specialized variance components analysis for stability studies [19], while open-source options like R provide flexibility for complex experimental designs.

Variance partitioning through ANOVA provides a powerful framework for method comparison and decision-making in pharmaceutical research and drug development. By systematically distinguishing between-group and within-group variability, researchers can identify significant treatment effects while accounting for random variation. The experimental data and protocols presented demonstrate how variance components analysis quantifies different sources of variability, enabling evidence-based decisions about product quality, manufacturing consistency, and analytical method performance.

For researchers implementing these techniques, attention to experimental design and statistical assumptions is crucial. Ensuring adequate sample sizes, verifying normality and homogeneity of variance, and selecting appropriate post-hoc tests all contribute to valid and interpretable results. When properly applied, variance partitioning becomes an indispensable tool for advancing pharmaceutical science through rigorous, data-driven methodology comparisons.

Understanding the core terminology of experimental design is fundamental to conducting valid research, particularly when using statistical methods like Analysis of Variance (ANOVA) for comparing different methods or treatments. This guide provides a clear comparison of these essential concepts, framed within the context of ANOVA research for scientific and drug development applications.

Core Concepts: Variables, Factors, and Levels

At the heart of any experiment is the investigation of a cause-and-effect relationship. The key terminology helps to precisely define this investigation [22].

- Independent Variable: This is the variable that the experimenter intentionally manipulates or controls to observe its effect. It is the presumed cause in the cause-and-effect relationship [22] [23]. In the context of ANOVA, an independent variable is often called a factor [24] [25].

- Dependent Variable: This is the variable that is measured or observed as the outcome of the experiment. It is the presumed effect, and its value depends on the changes made to the independent variable [22] [23].

- Levels: These represent the different variations or categories of a single factor (independent variable) [25] [26]. For example, a factor "Dosage" might have three levels: "0 mg," "50 mg," and "100 mg" [27].

- Factors: In ANOVA, an independent variable is referred to as a factor. An experiment can have one factor (One-way ANOVA) or multiple factors (e.g., Factorial ANOVA) [25] [26].

The table below provides a comparative summary of these core terms.

Table 1: Comparison of Key Terminology in Experimental Design

| Term | Definition | Role in the Experiment | Example in a Drug Study |

|---|---|---|---|

| Independent Variable [22] | The variable that is manipulated or controlled by the researcher. | The presumed cause; what is changed to see if it has an effect. | The dosage of a new drug administered to patients [27] [22]. |

| Dependent Variable [22] | The variable that is measured as the outcome. | The presumed effect; what changes in response to the independent variable. | The measured blood sugar level of the patients after the trial period [24]. |

| Factor [25] | Another term for an independent variable in the context of ANOVA. | Defines a categorical variable whose effect on the dependent variable is being studied. | "Drug Dosage" is one factor. "Patient Gender" could be a second factor [25]. |

| Levels [25] | The different values or categories that a factor can take. | Specifies the distinct groups within a factor for comparison. | For the "Drug Dosage" factor, levels could be "0 mg," "50 mg," and "100 mg" [27]. |

Application in ANOVA Research Designs

ANOVA uses this terminology to partition the total variability in data, determining if the differences between group means (defined by factor levels) are statistically significant [1]. The design is named based on the number of factors used.

Types of ANOVA and Their Terminology

Table 2: Comparison of ANOVA Types Based on Experimental Design

| ANOVA Type | Number of Factors | Typical Design Notation | Example Research Question |

|---|---|---|---|

| One-Way ANOVA [26] | One | Single factor with k levels (e.g., 3 levels). | Does the type of fertilizer (Factor with 3 levels: Brand A, B, C) affect plant growth? [24] |

| Factorial ANOVA (e.g., Two-Way) [25] [26] | Two or more | Number of levels in each factor (e.g., 3x2 design). | Do both drug dosage (3 levels) and patient gender (2 levels) influence recovery rate? [25] |

Experimental Design and Workflow

A typical workflow for a factorial ANOVA study, such as a drug efficacy trial, involves several key stages from defining the research question to interpreting the results. The following diagram visualizes this process and the role of the key terminology within it.

Experimental Protocols and Data Presentation

To illustrate these concepts with concrete data, consider a hypothetical experiment comparing the effectiveness of two new drugs (Drug A and Drug B) against a Placebo, while also accounting for patient gender.

Detailed Methodology

- Research Question: Do the type of drug and patient gender have a significant effect on post-treatment blood pressure?

- Independent Variables (Factors):

- Factor 1: Drug Type, with three levels: Placebo, Drug A, Drug B.

- Factor 2: Patient Gender, with two levels: Male, Female [25].

- Dependent Variable: Mean reduction in systolic blood pressure (mm Hg) after a 4-week treatment period.

- Experimental Design: 3x2 Factorial ANOVA, Between-Subjects Design [27] [25].

- Subjects: 120 participants (60 Male, 60 Female) with mild hypertension.

- Assignment: Within each gender group, participants are randomly assigned to one of the three drug-level groups (20 participants per cell).

- Procedure: After screening and baseline measurements, subjects undergo a 4-week double-blind treatment. Systolic blood pressure is measured again at the end of the period under standardized conditions.

- Control: A placebo group is included to control for the placebo effect. Other variables like diet and time of measurement are kept constant where possible [23].

Summarized Quantitative Data

The simulated results of such an experiment, showing the mean reduction in blood pressure for each group, might be structured as follows.

Table 3: Simulated Data Table - Mean Blood Pressure Reduction (mm Hg) by Drug and Gender

| Drug Type | Male | Female | Row Mean |

|---|---|---|---|

| Placebo | 3.2 | 2.8 | 3.0 |

| Drug A | 7.5 | 12.1 | 9.8 |

| Drug B | 11.8 | 9.4 | 10.6 |

| Column Mean | 7.5 | 8.1 | Grand Mean = 7.8 |

This data structure allows an ANOVA to test for:

- Main Effect of Drug: Is there a significant difference between the row means (3.0, 9.8, 10.6)?

- Main Effect of Gender: Is there a significant difference between the column means (7.5, 8.1)?

- Interaction Effect (Drug x Gender): Does the effect of the drug depend on the patient's gender? For instance, is Drug A more effective for females while Drug B is more effective for males? [25]

The Scientist's Toolkit: Research Reagent Solutions

Beyond the statistical concepts, conducting a robust ANOVA-based study requires specific materials and methodological tools.

Table 4: Essential Materials and Methodological Tools for ANOVA Experiments

| Item / Solution | Function in the Experiment |

|---|---|

| Statistical Software (e.g., R, SPSS) | To perform the complex calculations of ANOVA, generate F-statistics, p-values, and post-hoc tests [28] [26]. |

| Randomization Protocol | A method to randomly assign subjects to treatment groups to minimize selection bias and distribute extraneous variables evenly [27]. |

| Placebo | An inert substance used in the control group to account for the placebo effect, helping to isolate the true effect of the active drug [26]. |

| Standardized Measurement Protocol | A strict procedure for measuring the dependent variable (e.g., blood pressure) to ensure consistency and reduce measurement error across all subjects. |

| Blinding (Double-Blind Design) | A procedure where neither the subjects nor the experimenters know who is receiving which treatment, to prevent bias in the results [1]. |

| (3-Ethoxypropyl)benzene | (3-Ethoxypropyl)benzene, CAS:5848-56-6, MF:C11H16O, MW:164.24 g/mol |

| 3-Isocyanophenylisocyanide | 3-Isocyanophenylisocyanide, CAS:935-27-3, MF:C8H4N2, MW:128.13 g/mol |

Analysis of Variance (ANOVA) stands as a cornerstone of modern statistical science, bridging the visionary work of its creator, Sir Ronald Fisher, with cutting-edge applications in today's most data-intensive fields. This framework provides an robust methodological foundation for comparing multiple group means simultaneously, making it indispensable for researchers, scientists, and drug development professionals engaged in rigorous method comparison.

Historical Foundation: Sir Ronald Fisher's Revolutionary Work

The genesis of ANOVA is inextricably linked to Sir Ronald Aylmer Fisher (1890–1962), a British polymath widely regarded as the "Father of Modern Statistics" [29]. Fisher's work at the Rothamsted Experimental Station in England during the 1920s marked a pivotal moment in statistical history [30]. Confronted with vast amounts of agricultural data from crop experiments dating back to the 1840s, he sought to develop more sophisticated methods for analyzing complex experimental data [30] [31].

Fisher's revolutionary insight was recognizing that total variation in a dataset could be systematically partitioned into meaningful components. He introduced the term "variance" in a 1918 article on theoretical population genetics and developed its formal analysis [1]. His first application of ANOVA to data analysis was published in 1921 as Studies in Crop Variation I, which divided time series variation into components representing annual causes and slow deterioration [1]. This was followed in 1923 by Studies in Crop Variation II, written with Winifred Mackenzie, which studied yield variation across plots sown with different varieties and subjected to different fertilizer treatments [1].

ANOVA gained widespread recognition after Fisher included it in his seminal 1925 book Statistical Methods for Research Workers, which became one of the twentieth century's most influential books on statistical methods [30] [1]. Beyond the technique itself, Fisher pioneered the principles of experimental design, including randomization and randomized blocks, to minimize bias and control external variables [29]. He argued that experiments should be designed to ensure high validity in data collection, writing in 1935 that "to call in the statistician after the experiment may be no more than asking him to perform a post-mortem examination: he may be able to say what the experiment died of" [29].

Core Concepts and Methodology

The Fundamental Principle of ANOVA

ANOVA operates on a deceptively simple but powerful principle: comparing the amount of variation between group means to the amount of variation within each group [1]. If the between-group variation is substantially larger than the within-group variation, it suggests the group means are likely different [1]. This comparison is quantified using Fisher's F-statistic, which represents the ratio of between-group variance to within-group variance [18] [24]:

F = Variance between groups / Variance within groups [32]

A larger F-value indicates that differences between group means are greater than what would be expected by chance alone [18]. The statistical significance of this F-value is determined by comparing it to critical values in the F-distribution, which Fisher also introduced [29].

Key ANOVA Terminology and Components

To effectively implement ANOVA, researchers must understand its core components and terminology:

- Dependent Variable: The item being measured that is theorized to be affected by the independent variables [24].

- Independent Variable(s): The items being measured that may have an effect on the dependent variable; in ANOVA terminology, these are called factors [24].

- Levels: The different values or categories of an independent variable [24].

- Null Hypothesis (H₀): The hypothesis that there is no difference between the group means (μ₠= μ₂ = μ₃ = ... = μₖ) [33].

- Alternative Hypothesis (Hâ‚): The hypothesis that at least one group mean differs significantly from the others [24].

- Sum of Squares: The sum of squared deviations used to quantify variation [18]:

The relationship between these components is expressed as: SST = SSB + SSW [32].

Types of ANOVA Models

ANOVA encompasses several classes of models suited to different experimental designs:

- Fixed-effects models (Class I): Applied when the experimenter applies one or more treatments to subjects to see whether response values change; the treatments are fixed and of specific interest [1].

- Random-effects models (Class II): Used when various factor levels are sampled from a larger population; the levels themselves are random variables [1].

- Mixed-effects models (Class III): Contain both fixed and random effects types, with appropriately different interpretations for each [1].

Figure 1: ANOVA Analysis Workflow

ANOVA in Modern Pharmaceutical Research and Drug Development

Key Innovations and Applications

The pharmaceutical industry has driven significant advancements in ANOVA applications, transforming it from a basic statistical technique to an advanced analytical tool that drives evidence-based decision-making [32].

Table 1: Modern ANOVA Innovations in Pharmaceutical Research

| Innovation | Key Application | Impact |

|---|---|---|

| Mixed Effects Models [32] | Multi-center trials, longitudinal studies | Accounts for hierarchical data structures, increases statistical power while controlling Type I error |

| Integration with Big Data Infrastructures [32] | Processing terabytes of patient data and genomic information | Detects subtle treatment effects invisible in smaller datasets |

| Real-time Analytics [32] | Drug safety monitoring, clinical trial management | Enables continuous assessment of accumulating data without inflating Type I error rates |

| Adaptive Trial Designs [32] | Clinical research with protocol modifications based on interim analyses | Reallocates participants to promising treatment arms, adjusts sample sizes based on observed effect sizes |

| AI-Driven Enhancements [32] | Identification of optimal transformation functions, detecting interaction effects | Increases sensitivity and specificity of treatment effect detection |

Experimental Protocols and Methodologies

Protocol for Metabolomic Studies Using ANOVA-Based Approaches

Recent research has evaluated multivariate ANOVA-based methods for determining relevant variables in experimentally designed metabolomic studies [34]. The protocol involves:

- Experimental Design: Creating studies with multiple factors (e.g., zebrafish embryos exposed to two endocrine disruptor chemicals, each at two concentration levels) [34].

- Data Collection: Using liquid chromatography coupled to mass spectrometry (LC-MS) to generate complex metabolomic datasets [34].

- Method Application: Implementing multiple ANOVA-based approaches:

- ASCA (ANOVA Simultaneous Component Analysis): Does not consider residuals for modelling ANOVA-decomposed matrices of effects [34].

- rMANOVA (regularized MANOVA): Allows variable correlation without forcing all variance equality [34].

- GASCA (group-wise ANOVA-simultaneous component analysis): Uses group-wise sparsity in the presence of correlated variables to facilitate interpretation [34].

- Validation: Comparing results with traditional methods like partial least squares discriminant analysis (PLS-DA) to verify detected significant factors and relevant variables [34].

Protocol for Clinical Trial Analysis

In clinical trial settings, ANOVA implementation follows a structured approach:

- Trial Design: Establishing inclusion/exclusion criteria, randomization procedures, and blinding protocols [32].

- Data Collection: Measuring primary and secondary endpoints across treatment groups [18].

- Assumption Verification: Testing for normality, homogeneity of variance, and independence of observations [33].

- Model Selection: Choosing appropriate ANOVA design based on trial structure (one-way, factorial, repeated measures) [24].

- Analysis: Calculating F-statistics and p-values for main effects and interactions [18].

- Post-hoc Testing: If significant effects are found, conducting appropriate follow-up tests (Tukey's HSD, Bonferroni, etc.) to identify specific group differences [18].

Table 2: Comparison of Multivariate ANOVA Methods in Metabolomic Studies

| Method | Key Features | Advantages | Limitations |

|---|---|---|---|

| ASCA [34] | Does not consider residuals for modelling effects | Successful for high-dimensional data | Assumes equal variance and no correlation between variables |

| rMANOVA [34] | Intermediate between MANOVA and ASCA | Allows variable correlation without forcing all variance equality | Requires careful parameter selection |

| GASCA [34] | Uses group-wise sparsity approach | More reliable relevant variable identification; handles correlated variables | Complex implementation |

Practical Implementation and Analysis

Assumptions and Requirements

Valid application of ANOVA requires verifying several statistical assumptions [33]:

- Normal Distribution: Data within each group should follow a normal distribution pattern, verifiable through histograms, Q-Q plots, or Shapiro-Wilk test [33].

- Independence of Observations: Each data point must remain independent of other observations [24] [33].

- Homogeneity of Variance: The variance within each group should remain approximately equal, testable using Levene's test [33].

When data violates these assumptions, researchers should consider data transformation techniques, non-parametric alternatives like Kruskal-Wallis test, or Welch's ANOVA for unequal variances [33].

Step-by-Step Calculation and Interpretation

The ANOVA calculation process involves several sequential steps [33]:

Calculate Sum of Squares Components:

- Total Sum of Squares (SST) = Σ(x - x̄)²

- Between-Groups Sum of Squares (SSB) = Σnᵢ(x̄ᵢ - x̄)²

- Within-Groups Sum of Squares (SSW) = SST - SSB

Determine Degrees of Freedom:

- Between groups (dfb) = k - 1

- Within groups (dfw) = N - k

- Total (dft) = N - 1 Where k = number of groups, N = total sample size

Calculate Mean Square Values:

- Mean Square Between (MSB) = SSB/dfb

- Mean Square Within (MSW) = SSW/dfw

Compute F-Statistic: F = MSB/MSW

Interpreting results involves examining the ANOVA table and considering both statistical and practical significance [33]. The p-value determines statistical significance (typically compared to α = 0.05), while effect size measures like eta-squared (η² = SSB/SST) provide context for practical applications [33].

Figure 2: ANOVA Variance Partitioning Logic

The Researcher's Toolkit: Essential Materials and Software

Table 3: Research Reagent Solutions for ANOVA-Based Experiments

| Item | Function | Application Context |

|---|---|---|

| Statistical Software (R, SAS, SPSS) [33] | Performs complex ANOVA calculations with various designs | All research contexts requiring statistical analysis |

| LC-MS Instrumentation [34] | Generates high-dimensional metabolomic data | Metabolomic studies, biomarker discovery |

| Data Visualization Tools | Creates diagnostic plots (Q-Q plots, residual plots) | Assumption checking, result interpretation |

| Randomization Protocols [29] | Ensures unbiased assignment to treatment groups | Clinical trials, experimental studies |

| Sample Size Calculation Tools | Determines adequate sample size for sufficient power | Study planning and design |

| 3-Ethyl-4-nitrobenzoic acid | 3-Ethyl-4-nitrobenzoic Acid | 3-Ethyl-4-nitrobenzoic acid is a key synthetic building block for Friedel-Crafts acylation. This product is for research use only and is not intended for personal use. |

| 1,2,6-Trichloronaphthalene | 1,2,6-Trichloronaphthalene, CAS:51570-44-6, MF:C10H5Cl3, MW:231.5 g/mol | Chemical Reagent |

The ANOVA framework, from its origins in Sir Ronald Fisher's pioneering work to its contemporary applications, remains an indispensable tool for researchers conducting method comparisons. In pharmaceutical development and healthcare research, ANOVA has evolved from basic mean comparisons to sophisticated mixed models integrated with big data infrastructures and artificial intelligence [32]. These advancements have enhanced the precision of treatment effect detection, improved patient safety outcomes, and accelerated drug development timelines [32].

For today's researchers, scientists, and drug development professionals, mastering the ANOVA framework—from its fundamental principles to its modern implementations—provides a powerful approach for extracting meaningful insights from complex data. As Fisher himself demonstrated nearly a century ago, proper application of statistical reasoning remains essential for advancing scientific knowledge and improving human health outcomes.

Executing ANOVA: A Step-by-Step Guide for Method Comparison Studies

Analysis of Variance (ANOVA) is a family of statistical methods used to compare the means of two or more groups by analyzing the variance within and between these groups [1]. Developed by statistician Ronald Fisher in the early 20th century, ANOVA has become a cornerstone of modern experimental design, particularly in scientific fields such as biology, psychology, medicine, and drug development [1] [35]. The fundamental principle behind ANOVA is the partitioning of total observed variance into components attributable to different sources of variation, allowing researchers to test whether the differences between group means are statistically significant [1].

At its core, ANOVA compares the amount of variation between group means to the amount of variation within each group. If the between-group variation is substantially larger than the within-group variation, it suggests that the group means are likely different [1]. This comparison is formalized through an F-test, which produces a statistic that follows the F-distribution under the null hypothesis [1] [26]. The null hypothesis for ANOVA typically states that all population means are equal, while the alternative hypothesis states that at least one population mean is different from the others [6].

ANOVA offers significant advantages over conducting multiple t-tests when comparing more than two groups. Performing repeated t-tests increases the probability of committing a Type I error (falsely rejecting a true null hypothesis) due to the problem of multiple comparisons [36]. ANOVA controls this experiment-wise error rate by providing a single omnibus test for mean differences across all groups simultaneously [36] [37]. Following a significant ANOVA result, post-hoc tests can be conducted to determine which specific groups differ, while maintaining appropriate error control [6] [38].

Fundamental Concepts and Terminology

Key ANOVA Components

Understanding ANOVA requires familiarity with several fundamental concepts and terms that form the building blocks of this statistical method. The dependent variable, also called the response variable or outcome, is the continuous measure being studied that is expected to change as a result of experimental manipulations [6] [35]. This variable must be measured on an interval or ratio scale, such as weight, test scores, or reaction time [6] [37].

Independent variables, known as factors in ANOVA terminology, are the categorical variables that define the groups being compared [36] [35]. These factors are manipulated or controlled by the researcher to observe their effect on the dependent variable. Each factor consists of two or more levels, which represent the specific categories or conditions within that factor [36] [26]. For example, in a study comparing three different dosages of a drug, the factor "dosage" would have three levels: low, medium, and high.

The concepts of between-group variance and within-group variance are central to ANOVA's logic. Between-group variance measures how much the group means differ from each other and from the overall mean, reflecting the effect of the independent variable as well as random error [1]. Within-group variance, also called error variance, measures how much individual scores within each group differ from their group mean, representing random variability not explained by the independent variable [1]. The F-ratio, the test statistic for ANOVA, is calculated as the ratio of between-group variance to within-group variance [1] [26].

Types of Factors and Designs

In ANOVA, factors can be classified as either fixed or random effects. A fixed factor is one where the levels are specifically selected by the researcher and are of direct interest in themselves [38]. The conclusions drawn from the analysis apply only to these specific levels. In contrast, a random factor is one where the levels are randomly selected from a larger population of possible levels, and the researcher is interested in generalizing to the entire population of levels [35] [38].

Experimental designs in ANOVA can be categorized as crossed or nested. In crossed designs, every level of one factor appears in combination with every level of another factor [35]. For example, if all drug dosages are tested in both male and female participants, the factors are crossed. In nested designs, the levels of one factor appear only within specific levels of another factor [35]. For instance, if different researchers conduct the experiment in different cities, and each city has its own set of researchers, the researcher factor is nested within the city factor.

Table 1: Key Terminology in ANOVA

| Term | Definition | Example |

|---|---|---|

| Factor | An independent variable with categorical levels | Drug dosage, teaching method |

| Levels | The specific categories or conditions of a factor | Low/medium/high dosage; CBT/medication/placebo |

| Between-Group Variance | Variation between the means of different groups | Differences in average recovery time between drug dosages |

| Within-Group Variance | Variation among subjects within the same group | Differences in recovery time among patients receiving the same dosage |

| Fixed Effects | Factors where levels are specifically selected | Comparison of three specific drug formulations |

| Random Effects | Factors where levels are randomly sampled from a population | Random selection of clinics from all clinics in a country |

One-Way ANOVA

Definition and Applications

One-way ANOVA is the simplest form of analysis of variance, used to compare the means of three or more independent groups determined by a single categorical factor [36] [37]. This statistical test determines whether there are any statistically significant differences between the means of the groups or if the observed differences are due to random chance [26]. The "one-way" designation indicates that there is only one independent variable (factor) being studied, though this variable can have multiple levels [39] [36].

This type of ANOVA is particularly useful in experimental situations where researchers want to compare the effects of different treatments, conditions, or categories on a continuous outcome variable [37]. For example, in pharmaceutical research, a one-way ANOVA could be used to compare the efficacy of three different dosages of a new drug and a placebo on blood pressure reduction [36]. In agricultural studies, it might be used to compare crop yields across four different fertilizer types [35]. In psychological research, it could help determine whether three different therapies produce different outcomes on depression scores [36] [26].

The one-way ANOVA is an extension of the independent samples t-test for situations with more than two groups [26]. While a t-test can only compare two means, one-way ANOVA can simultaneously compare three or more means, controlling the Type I error rate across all comparisons [36] [37]. After obtaining a significant overall F-test in one-way ANOVA, researchers typically conduct post-hoc tests to determine which specific group means differ from each other [36] [6].

Hypotheses and Assumptions

In a one-way ANOVA, two mutually exclusive hypotheses are tested. The null hypothesis (Hâ‚€) states that all group means are equal, implying that the independent variable has no effect on the dependent variable [37]. The alternative hypothesis (Hâ‚) states that at least one group mean is significantly different from the others, suggesting that the independent variable does influence the dependent variable [6] [37]. These hypotheses can be expressed mathematically as:

- H₀: μ₠= μ₂ = μ₃ = ... = μₖ

- Hâ‚: At least one μᵢ differs from the others

For valid application of one-way ANOVA, several assumptions must be met. The assumption of normality requires that the dependent variable is normally distributed within each group [6] [26]. The assumption of homogeneity of variances (homoscedasticity) requires that the population variances in each group are equal [6] [37]. The assumption of independence dictates that observations are independent of each other, meaning the value of one observation does not influence another [36] [6]. Additionally, the dependent variable should be continuous (measured at the interval or ratio level), and groups should be categorical [35] [37].

While one-way ANOVA is generally robust to minor violations of normality and homogeneity of variances, particularly with equal sample sizes, severe violations can affect the validity of results [6]. When assumptions are violated, researchers may consider data transformations, non-parametric alternatives such as the Kruskal-Wallis test, or other robust statistical methods [36] [38].

Experimental Protocol and Statistical Analysis

To illustrate a typical one-way ANOVA experimental protocol, consider a pharmaceutical research scenario comparing the efficacy of three formulations of a new antihypertensive drug. The research question would be: "Do the three drug formulations differ in their effect on systolic blood pressure reduction?" The dependent variable is the reduction in systolic blood pressure (measured in mmHg), a continuous variable. The independent variable is drug formulation, with three categorical levels: Formulation A, Formulation B, and Formulation C.

The experimental design would involve random assignment of 150 hypertensive patients into three equal groups of 50. Each group receives one of the three formulations for eight weeks. Blood pressure measurements are taken at baseline and after the treatment period, with the reduction calculated for each patient. To ensure the validity of results, researchers would control for potential confounding variables such as age, sex, baseline blood pressure, and concomitant medications through proper randomization or statistical adjustment.

Statistical analysis begins with checking ANOVA assumptions. Normality can be assessed using Shapiro-Wilk tests or normal probability plots for each group [6]. Homogeneity of variances can be tested using Levene's test or Bartlett's test [6]. If assumptions are met, the one-way ANOVA is conducted, producing an ANOVA table with between-group and within-group sums of squares, degrees of freedom, mean squares, and the F-statistic with its corresponding p-value.

A significant F-statistic (typically p < 0.05) indicates that at least one formulation differs from the others in its effect on blood pressure reduction [6]. To identify which specific formulations differ, post-hoc tests such as Tukey's HSD, Bonferroni, or Scheffé's method are conducted [6] [38]. These tests control the family-wise error rate while comparing all possible pairs of group means. Effect size measures such as eta-squared (η²) or partial eta-squared should also be calculated to determine the practical significance of the findings, indicating how much of the variance in blood pressure reduction is accounted for by the drug formulation [6].

Table 2: One-Way ANOVA Experimental Design Example

| Design Aspect | Specification | Purpose/Rationale |

|---|---|---|

| Research Question | Do three drug formulations differ in blood pressure reduction? | Defines the objective of the study |

| Dependent Variable | Reduction in systolic BP (mmHg) | Continuous outcome measure |

| Independent Variable | Drug formulation (A, B, C) | Three-level categorical factor |

| Sample Size | 50 patients per group (150 total) | Provides adequate statistical power |

| Treatment Duration | 8 weeks | Standard period for antihypertensive effects |

| Control Variables | Age, sex, baseline BP, medications | Reduces confounding effects |

| Assumption Checks | Normality, homogeneity of variance | Ensures validity of ANOVA results |

| Post-hoc Tests | Tukey's HSD | Controls Type I error in multiple comparisons |

Two-Way ANOVA

Definition and Applications

Two-way ANOVA extends the one-way approach by simultaneously examining the effects of two independent categorical factors on a continuous dependent variable [40] [37]. This method allows researchers to assess not only the main effects of each factor but also the potential interaction between them [41] [37]. The "two-way" designation refers to the presence of two independent variables, each with two or more levels, creating a factorial design where all possible combinations of factor levels are studied [40] [35].

The interaction effect is a unique and valuable aspect of two-way ANOVA that cannot be examined in one-way ANOVA [41]. An interaction occurs when the effect of one factor on the dependent variable depends on the level of the other factor [41] [37]. For example, in a pharmaceutical study, a two-way ANOVA could examine how drug type (Factor A: Drug X, Drug Y, Placebo) and patient genotype (Factor B: Variant 1, Variant 2) influence treatment response [41]. An interaction would be present if Drug X works better for patients with Variant 1, while Drug Y works better for those with Variant 2.

Two-way ANOVA is particularly valuable in drug development and scientific research because it provides a more comprehensive understanding of how multiple factors jointly influence outcomes [41]. It allows researchers to answer complex questions such as: "Does the effect of a drug depend on patient sex?" or "Does the efficacy of a treatment vary by dosage and administration route?" [37]. By examining interaction effects, researchers can identify subgroups that respond differently to treatments, enabling more personalized and effective interventions [41].

This statistical method also increases efficiency by studying two factors in a single experiment rather than conducting separate one-way ANOVAs for each factor [41]. Additionally, two-way ANOVA can provide greater statistical power for detecting effects when factors are included in the same model, as it accounts for more of the variance in the dependent variable [40].

Hypotheses and Assumptions

In two-way ANOVA, three sets of hypotheses are tested simultaneously. First, for the main effect of Factor A, the null hypothesis states that all level means of Factor A are equal, while the alternative states that at least one level mean differs [37]. Second, for the main effect of Factor B, the null hypothesis states that all level means of Factor B are equal, with the alternative stating that at least one level mean differs [37]. Third, for the interaction effect between Factors A and B, the null hypothesis states that there is no interaction (the effect of Factor A is consistent across all levels of Factor B, and vice versa), while the alternative states that an interaction exists [41] [37].

The assumptions for two-way ANOVA are similar to those for one-way ANOVA but apply to each cell in the design [40]. The normality assumption requires that the dependent variable is normally distributed within each combination of factor levels (each cell) [40]. The homogeneity of variances assumption (homoscedasticity) requires that the population variances in each cell are equal [40]. The independence assumption dictates that observations are independent of each other [36]. Additionally, the design should ideally be balanced, with equal sample sizes in each cell, though statistical methods can handle unbalanced designs [40].

When the interaction effect is statistically significant, the main effects must be interpreted with caution, as the effect of one factor is not consistent across levels of the other factor [41]. In such cases, researchers typically focus on simple effects analysis, which examines the effect of one factor at each specific level of the other factor [41].

Experimental Protocol and Statistical Analysis

Consider a detailed experimental protocol for a two-way ANOVA in drug development research. The study investigates the joint effects of drug type and patient age group on cholesterol reduction. The research question is: "Do different statin drugs have different effects on cholesterol reduction across age groups?" The dependent variable is the percentage reduction in LDL cholesterol after 12 weeks of treatment. The two factors are: (1) Drug type, with three levels (Atorvastatin, Rosuvastatin, Simvastatin); and (2) Age group, with three levels (30-45 years, 46-60 years, 61-75 years).

The experimental design involves a 3 × 3 factorial design, creating nine experimental conditions. Researchers randomly assign 270 patients to these nine groups, with 30 patients per group. Patients are stratified by age group and then randomly assigned to drug type to ensure balanced representation. The study is double-blinded, with neither patients nor clinicians knowing the drug assignment. LDL cholesterol measurements are taken at baseline and after 12 weeks of treatment.

Statistical analysis begins with checking the two-way ANOVA assumptions. Normality is assessed using Shapiro-Wilk tests for each of the nine cells. Homogeneity of variances is tested using Levene's test across all cells. If assumptions are violated, appropriate data transformations or alternative statistical approaches are considered.

The two-way ANOVA is then conducted, producing an ANOVA table that partitions the variance into four components: the main effect of drug type, the main effect of age group, the interaction effect between drug type and age group, and the residual (error) variance [40]. Each effect is tested using an F-statistic. If the interaction effect is statistically significant, researchers proceed with simple effects analysis rather than interpreting the main effects directly [41]. For example, they might examine the effect of drug type within each age group separately.

Table 3: Two-Way ANOVA Experimental Design Example

| Design Aspect | Specification | Purpose/Rationale |

|---|---|---|

| Research Question | Do statin drugs have different effects on cholesterol across age groups? | Examines joint effects of two factors |

| Dependent Variable | LDL cholesterol reduction (%) | Continuous outcome measure |

| Factor 1 | Drug type (Atorvastatin, Rosuvastatin, Simvastatin) | Three-level categorical factor |

| Factor 2 | Age group (30-45, 46-60, 61-75 years) | Three-level categorical factor |

| Design | 3 × 3 factorial | All combinations of factor levels |

| Sample Size | 30 patients per cell (270 total) | Provides adequate power for interaction tests |

| Study Duration | 12 weeks | Standard period for lipid-lowering effects |

| Blinding | Double-blind | Reduces bias in outcome assessment |

| Primary Analysis | Interaction effect | Tests if drug effect differs by age group |

Factorial ANOVA Designs

Extending Beyond Two Factors

Factorial ANOVA refers to the general class of ANOVA designs that involve two or more categorical independent variables, extending beyond the two-way case to include three-way, four-way, and higher-order designs [6]. In these designs, the term "factorial" indicates that all possible combinations of the levels of each factor are included in the experiment [6] [35]. For example, a 2 × 3 × 2 factorial design would include two levels of the first factor, three levels of the second factor, and two levels of the third factor, resulting in 12 unique experimental conditions [35].

As the number of factors increases, so does the complexity of the analysis and interpretation. A three-way ANOVA, for instance, includes three main effects (one for each factor), three two-way interactions (for each pair of factors), and one three-way interaction (between all three factors) [35]. The three-way interaction tests whether the two-way interaction between any two factors differs across the levels of the third factor [35]. For example, in a study examining drug type, dosage, and patient age group, a three-way interaction would indicate that the interaction between drug type and dosage varies across different age groups.

Higher-order factorial designs (four-way ANOVA and above) are rarely used in practice because the interpretation becomes extremely complex, and the sample size requirements grow exponentially with each additional factor [36] [35]. These designs require large numbers of experimental cells and participants to maintain adequate statistical power, making them impractical for most research settings [35]. Furthermore, higher-order interactions (three-way and above) are often difficult to interpret meaningfully and may not correspond to theoretically meaningful effects [35].

Despite these challenges, factorial designs offer significant advantages when appropriately applied. They allow researchers to study multiple factors simultaneously in a single experiment, providing greater efficiency than studying each factor separately [35]. Factorial designs also enable the investigation of interactions between factors, which often reflect the complex reality of biological and psychological phenomena where multiple variables operate together rather than in isolation [41].

Applications in Drug Development

Factorial ANOVA designs have particular relevance in drug development and pharmaceutical research, where multiple factors often influence treatment outcomes. For example, a 2 × 2 × 2 factorial design might investigate drug formulation (standard vs. extended-release), dosage (low vs. high), and administration timing (morning vs. evening) on drug bioavailability [35]. Such designs allow researchers to optimize multiple aspects of a treatment simultaneously rather than through separate, sequential experiments.

In clinical trial design, factorial ANOVAs can help identify patient subgroups that respond differently to treatments by including factors such as genetic markers, disease severity, or comorbid conditions along with treatment type [41]. This approach supports the development of personalized medicine by revealing how patient characteristics moderate treatment effects [41]. For instance, a two-way ANOVA might reveal that a new antidepressant works significantly better for patients with a specific genetic profile but shows little advantage for those with a different profile.

Factorial designs also enable more efficient use of research resources. Rather than conducting separate studies for each factor of interest, researchers can examine multiple factors in a single experiment, reducing the total number of participants needed and accelerating the research timeline [35]. This efficiency is particularly valuable in early-phase clinical trials where multiple dosage levels and administration routes may be evaluated simultaneously before selecting the most promising combinations for later-phase trials.

Table 4: Comparison of ANOVA Types

| Characteristic | One-Way ANOVA | Two-Way ANOVA | Factorial ANOVA |

|---|---|---|---|

| Number of Factors | One independent variable | Two independent variables | Three or more independent variables |