Ensuring Accuracy in Mass Spectrometry: A Comprehensive Guide to Validating Ionization Parameters with Standard Reference Materials

This article provides a complete framework for researchers and drug development professionals to validate ionization parameters in mass spectrometry using Standard Reference Materials (SRMs).

Ensuring Accuracy in Mass Spectrometry: A Comprehensive Guide to Validating Ionization Parameters with Standard Reference Materials

Abstract

This article provides a complete framework for researchers and drug development professionals to validate ionization parameters in mass spectrometry using Standard Reference Materials (SRMs). It covers the foundational role of SRMs in achieving measurement accuracy and traceability, details methodological approaches for implementing Stable Isotope Dilution and Selected Reaction Monitoring, addresses common troubleshooting scenarios for parameter optimization, and establishes protocols for cross-laboratory method validation. By synthesizing current practices from forensics, clinical research, and environmental analysis, this guide empowers scientists to enhance data reliability, improve reproducibility, and meet stringent regulatory requirements in biomedical and pharmaceutical applications.

The Critical Role of Reference Materials in Mass Spectrometry Accuracy and Precision

In analytical chemistry and drug development, reliable measurements are the cornerstone of research reproducibility, regulatory compliance, and patient safety. The validation of ionization parameters, particularly in techniques like mass spectrometry, depends fundamentally on using appropriate standard reference materials. These materials ensure that instrumental responses accurately reflect analyte concentration and composition, which is critical when studying complex pharmaceutical compounds and their metabolites. Two distinct categories of standards play pivotal roles in this process: Certified Reference Materials (CRMs) and Internal Standards (IS). While both are essential for quality assurance, they serve fundamentally different purposes within the analytical workflow [1] [2].

CRMs provide the foundational traceability to international measurement systems, establishing accuracy through an unbroken chain of comparisons to SI units. In contrast, Internal Standards correct for variability introduced during sample preparation and analysis, compensating for matrix effects and instrumental drift. This guide provides a detailed comparison of these critical materials, focusing on their optimal application in validating ionization parameters for pharmaceutical research and drug development.

Understanding Certified Reference Materials (CRMs)

Definition and Key Characteristics

Certified Reference Materials (CRMs) are reference materials characterized by a metrologically valid procedure for one or more specified properties. They are accompanied by a certificate that provides the value of the specified property, its associated uncertainty, and a statement of metrological traceability [3]. CRMs represent the highest echelon of reference materials, produced under stringent accreditation standards like ISO 17034 to ensure accuracy, traceability, and reliability [1] [4].

The certification process involves rigorous testing for homogeneity and stability, with certified values determined through validated analytical methods on qualified instrumentation [5]. These materials enable the meaningful comparison of measurement results over time and geography, establishing metrological traceability when used to calibrate or verify measurement system performance [6].

Production and Certification Process

The production of CRMs follows a meticulously controlled process:

- Homogeneity Testing: Ensures consistency of the certified property throughout all units of the material [7].

- Stability Studies: Guarantees the material's properties remain consistent over time under specified storage conditions [7].

- Characterization: Multiple independent measurement methods are often employed to determine property values [1].

- Uncertainty Evaluation: A combined uncertainty budget is established for each certified value [1].

- Certification: Accredited organizations issue certificates detailing certified values, uncertainties, and traceability [7].

This rigorous process distinguishes CRMs from other reference materials and makes them indispensable for critical measurements requiring demonstrated accuracy and traceability.

Understanding Internal Standards (IS)

Definition and Primary Functions

Internal Standards are compounds added at a known concentration to every sample—both calibrators and unknowns—at the earliest possible stage of analysis [8]. Rather than relying on absolute response, calibration is based on the ratio of response between the analyte and the Internal Standard [8]. This approach compensates for various sources of variability that can affect analytical results.

The primary function of Internal Standards is to correct for:

- Sample Preparation Variability: Complex preparation techniques (e.g., solid-phase extraction, liquid-liquid extraction) can introduce volumetric errors that Internal Standards compensate for [2].

- Matrix Effects: Components in the sample matrix can enhance or suppress ionization efficiency in techniques like mass spectrometry [2].

- Instrumental Drift: Mass spectrometers can experience sensitivity changes over time, which Internal Standards help correct [2].

Types of Internal Standards

Table 1: Common Types of Internal Standards Used in Analytical Chemistry

| Type | Description | Common Applications |

|---|---|---|

| Stable Isotope-Labeled | Deuterated (D), ^13^C-labeled, or ^15^N-labeled versions of the analyte [2] | Ideal for quantitative MS methods; nearly identical behavior to analyte [2] |

| Structural Analogues | Compounds with similar chemical structure but different mass-to-charge ratio (m/z) [2] | Used when isotope-labeled standards aren't available [2] |

| Surrogate Compounds | Compounds not structurally related but added to monitor processing efficiency [2] | Environmental and food testing with varying matrix effects [2] |

Comparative Analysis: CRMs vs. Internal Standards

Functional Differences and Applications

Table 2: Comprehensive Comparison Between CRMs and Internal Standards

| Characteristic | Certified Reference Materials (CRMs) | Internal Standards (IS) |

|---|---|---|

| Primary Function | Calibration, method validation, quality control [1] | Correct for variability in sample preparation and analysis [2] |

| Traceability | Traceable to SI units with documented uncertainty [1] [7] | No inherent metrological traceability |

| Certification | Produced under ISO 17034 with Certificate of Analysis [1] | No formal certification; selection based on chemical similarity |

| When Used | To generate calibration curves, as spike solutions [1] | Added to all samples before processing [8] |

| Measurement Basis | Absolute response or comparison to calibration curve [8] | Ratio of analyte response to IS response [8] |

| Uncertainty | Characterized and documented [1] | Not formally characterized |

| Cost Considerations | Higher cost due to rigorous certification [1] | Variable cost; stable isotope-labeled can be expensive |

| Ideal For | Regulatory compliance, high-precision quantification [1] | Methods with multiple preparation steps, matrix effects [8] |

Complementary Roles in Analytical Workflows

Despite their differences, CRMs and Internal Standards often play complementary roles in analytical methods. CRMs establish the fundamental accuracy and traceability of measurements, while Internal Standards control the precision and variability of the analytical process. This relationship is particularly important in complex analyses such as the determination of cannabinoids in cannabis extracts, where both CRMs and specialized Internal Standards like deuterated Δ9-THC are employed to ensure accurate quantification [5].

In research on natural products and dietary supplements, the combination of matrix-based CRMs and appropriate Internal Standards has enhanced experimental rigor and benefited the study of health effects [3]. The proper application of both materials strengthens the validity of research findings and supports reproducibility.

Experimental Protocols for Method Validation

Protocol 1: Using CRMs for Ionization Parameter Validation

Objective: To validate ionization efficiency and instrument response parameters in mass spectrometry using CRMs.

Materials and Reagents:

- CRM with certified values for target analytes [1]

- Appropriate solvent matching the CRM specification

- Matrix-matched blank samples (if assessing matrix effects)

Procedure:

- CRM Reconstitution: Precisely reconstitute the CRM according to the certificate instructions, noting expiration and stability constraints [1].

- Calibration Curve Preparation: Prepare a series of calibration solutions at a minimum of five concentration levels covering the expected sample range.

- Instrumental Analysis: Analyze calibration solutions using the optimized MS parameters, monitoring ionization efficiency for each concentration level.

- Data Analysis: Plot measured response against certified values. The calibration curve should demonstrate linearity with R² ≥ 0.99 and back-calculated concentrations within ±15% of certified values [3].

- Ionization Stability Assessment: Analyze mid-level calibration standards repeatedly over 4-6 hours to monitor ionization stability.

Validation Parameters:

- Accuracy: Percent difference from certified values should be ≤15% [3]

- Precision: Relative standard deviation (RSD) of repeated measurements ≤5%

- Ionization Stability: Signal RSD over time ≤10%

Protocol 2: Assessing Internal Standard Performance for Ionization Correction

Objective: To evaluate and validate Internal Standard effectiveness in correcting for ionization variability.

Materials and Reagents:

- Certified Reference Material for target analyte

- Candidate Internal Standard (stable isotope-labeled analog preferred) [2]

- Blank matrix samples

Procedure:

- Sample Preparation: Spike blank matrix with a constant concentration of CRM (mid-calibration level) and varying concentrations of Internal Standard.

- Extraction Efficiency: Process samples through the entire sample preparation workflow, including extraction and clean-up steps.

- Analysis: Analyze samples using LC-MS/MS or GC-MS, monitoring both analyte and Internal Standard signals.

- Matrix Effect Evaluation: Prepare post-extraction spiked samples to compare with pre-extraction spiked samples, calculating matrix effects as (post-extraction signal/pre-extraction signal × 100%) [2].

- Ionization Compensation Assessment: Introduce deliberate variations (nebulizer gas flow, source temperature) while monitoring analyte and Internal Standard responses.

Acceptance Criteria:

- Internal Standard Recovery: 70-120% in all samples [9]

- Signal Ratio Stability: RSD of analyte/IS response ratio ≤5% despite deliberate parameter variations

- Matrix Effect Correction: Internal Standard should normalize matrix effects to within ±10% of ideal response

Research Reagent Solutions

Table 3: Essential Materials for Ionization Validation Studies

| Reagent/Material | Function | Example Applications |

|---|---|---|

| Single-Component CRMs | Primary calibration and ionization efficiency reference [1] | Instrument calibration, fundamental ionization studies |

| Multi-Component CRMs | Simultaneous validation of multiple analytes [5] | High-throughput method development, panel analyses |

| Stable Isotope-Labeled Standards | Optimal Internal Standards for mass spectrometry [2] | Quantitative bioanalysis, metabolic studies |

| Matrix-Matched CRMs | Validation of methods in complex matrices [3] | Biological sample analysis, environmental testing |

| Structural Analogues | Alternative Internal Standards when isotopes unavailable [2] | Pharmaceutical impurity testing, forensic analysis |

Workflow Integration and Decision Framework

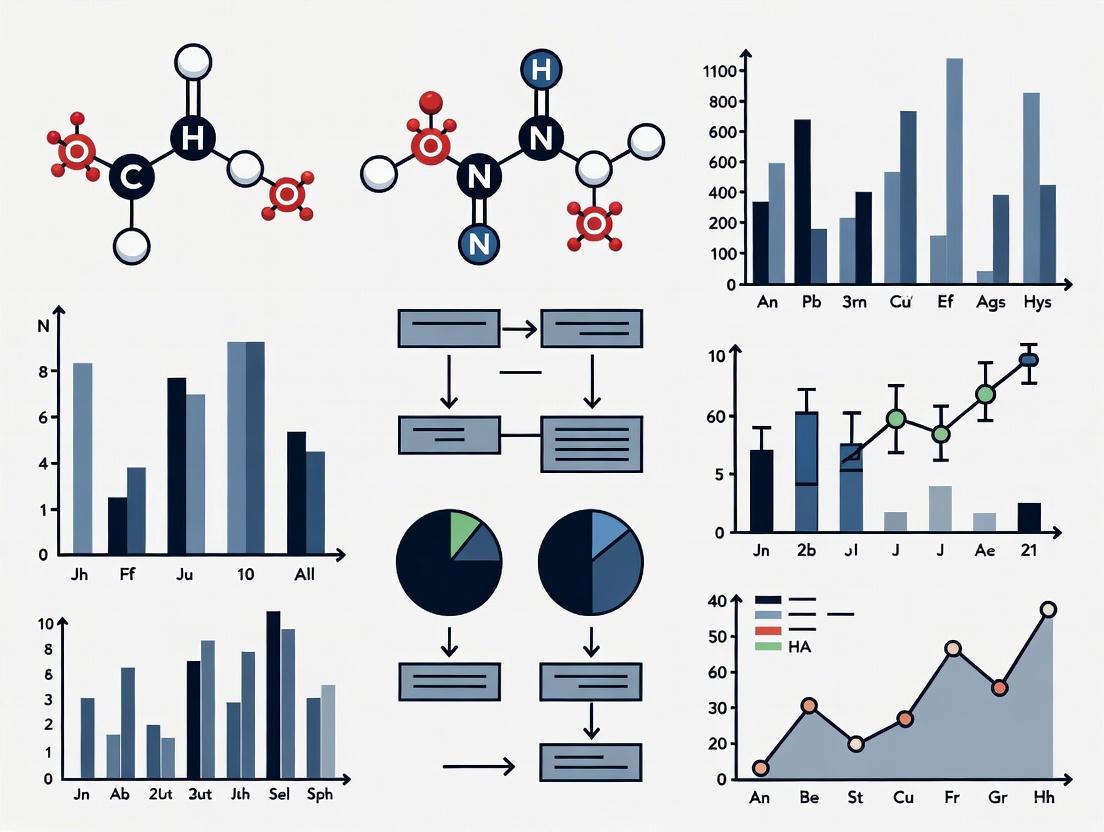

The following diagram illustrates the strategic decision process for implementing CRMs and Internal Standards in the validation of ionization parameters:

Strategic Implementation Guidance:

- CRM Selection: Choose a CRM that matches your analyte of interest in the same form as found in samples and is either already matrix-matched or can be appropriately matched during preparation [1].

- Internal Standard Decision: Internal Standards are particularly beneficial for methods with multiple sample preparation steps where volumetric recovery may vary, such as liquid-liquid extraction or solid-phase extraction [8].

- When to Avoid Internal Standards: For simple dilution-based methods with high-precision autosamplers, external standardization may be preferred as it simplifies the chromatogram and eliminates variability from Internal Standard addition and measurement [8].

Certified Reference Materials and Internal Standards serve distinct but complementary roles in validating ionization parameters for pharmaceutical research and drug development. CRMs provide the metrological foundation for accurate and traceable measurements, while Internal Standards control variability throughout the analytical process. The strategic implementation of both materials, following the experimental protocols and decision framework outlined in this guide, ensures robust method validation and reliable research outcomes. As the field advances, the continued development of matrix-matched CRMs and specialized Internal Standards will further enhance the precision and accuracy of ionization-based analytical techniques.

Ionization parameters, primarily represented by the acid dissociation constant (pKa), are fundamental molecular properties that dictate the behavior of pharmaceutical compounds in biological systems and analytical instruments. Inaccurate determination of these parameters can create a cascade of errors affecting drug discovery, development, and clinical application. This guide examines the critical consequences of relying on unvalidated ionization data and underscores the necessity of robust validation protocols using standard reference materials to ensure data integrity from the research bench to the patient's bedside.

The Fundamental Role of Ionization in Drug Properties

Ionization state influences nearly every aspect of a drug's performance. In biological systems, it determines lipophilicity, membrane permeability, and ultimately, bioavailability. A study of FDA-approved oral molecules found that approximately 70% are ionizable, a trend that has remained consistent over the past 40-50 years [10]. This prevalence highlights why accurate pKa characterization is indispensable throughout the pharmaceutical development pipeline.

The charge state of a molecule profoundly influences its lipophilicity and biopharmaceutical characteristics, affecting not only receptor affinity but also absorption, distribution, metabolism, excretion, and toxicity (ADMET) profiles [11]. For instance, basic compounds tend to show greater toxicity through mechanisms such as phospholipidosis and hERG channel binding, while acids often exhibit higher plasma protein binding, affecting volumes of distribution [11].

Consequences of Invalidated Ionization Parameters

Compromised Drug Discovery and Lead Optimization

In early discovery stages, invalidated ionization parameters can misdirect lead optimization efforts and prolong development timelines.

- Misguided Structure-Activity Relationships (SAR): When ionization is inaccurately characterized, medicinal chemists may modify molecular structures without understanding the true mediators of potency. If ionizable groups are incorrectly assumed to contribute to potency, project teams may unnecessarily protect that part of the molecule, restricting opportunities to enhance other properties [10].

- Inefficient Design-Make-Test-Analyze (DMTA) Cycles: Without accurate ionization data, multiple DMTA cycles may be wasted exploring structural modifications that ultimately prove unproductive due to charge state misunderstandings [10].

Table 1: Impact of Ionization Accuracy on Lead Optimization Decisions

| Scenario | Informed Decision with Validated pKa | Risk with Invalidated pKa |

|---|---|---|

| Ionization influences bioactivity | Focus lead optimization on that lead series | Pursue suboptimal lead series with poor ionization properties |

| Charge state doesn't influence bioassay results | Modify structure to enhance ADMET without impacting potency | Make modifications that inadvertently reduce potency |

| Localized charge is potency-mediating | Protect that molecular region from modification | Waste cycles modifying critical ionizable groups |

Analytical Method Failures and Quality Control Issues

In analytical chemistry, inaccurate ionization parameters directly impact the reliability of chromatographic methods and quantitative analyses.

- Chromatographic Method Vulnerabilities: Chromatographers rely on pKa and related logD values to optimize HPLC and UHPLC separations. The understanding of ionic form(s) of analytes and the pH of the mobile phase helps in selecting appropriate pH buffers and stationary phases. Invalidated pKa values can lead to poor resolution, co-elution, and method failure [10].

- Ion Suppression Effects: In mass spectrometry, ion suppression presents a significant challenge when co-eluting compounds or matrix components compete with or block the ionization of target analytes. This phenomenon is particularly problematic in complex biological matrices and can lead to inaccurate quantification [12]. Without proper characterization of ionization behaviors, methods are vulnerable to these effects.

- Compromised Quality-by-Design (QbD): A QbD approach to analytical method development requires accurate physicochemical properties to design robust chromatographic methods. Invalidated ionization parameters undermine this foundation [10].

Formulation Challenges and Product Stability Issues

Salt formation is a critical strategy for enhancing solubility and dissolution through pH adjustment, requiring precise knowledge of pKa values.

- Inappropriate Salt Selection: Without accurate pKa data, scientists cannot reliably identify suitable salt forms for clinical development, potentially leading to suboptimal bioavailability or stability issues [10].

- Physical Form Instability: The selection of stable physical forms depends on accurate ionization characteristics. Invalidated parameters can result in form changes during storage or manufacturing, affecting product performance and shelf life [10].

Clinical Implications and Patient Safety Risks

The consequences of ionization inaccuracies extend beyond development into clinical application, with direct implications for patient safety.

- Variable Bioavailability: Inaccurate prediction of ionization behavior under different physiological pH conditions can lead to unexpected bioavailability variations between patients, potentially resulting in subtherapeutic dosing or toxicity.

- Drug-Drug Interactions: Invalidated ionization parameters may fail to predict interactions when multiple drugs compete for absorption sites or metabolic pathways influenced by charge state.

- Forensic and Clinical Toxicology Limitations: In drug-facilitated crimes, rapid detection of substances like benzodiazepines in residues is crucial. Techniques like Extractive-Liquid Sampling Electron Ionization-Mass Spectrometry (E-LEI-MS) enable direct analysis of samples without pretreatment, but their reliability depends on accurate ionization characteristics of target compounds [13].

Experimental Validation: Methodologies and Protocols

Chromatographic Method Validation Protocol

To ensure accuracy in analytical methods, the following protocol validates ionization parameters for HPLC/UHPLC applications:

- Mobile Phase Preparation: Prepare buffer solutions at pH values spanning the predicted pKa (±2 pH units) using appropriate buffers (phosphate, acetate, ammonium formate).

- Column Selection: Select stationary phases with demonstrated stability across the pH range being tested.

- Retention Time Monitoring: Inject analyte standards at each pH condition and monitor retention time shifts. The greatest shift occurs at pH = pKa.

- Comparison with Standards: Use certified reference materials with known pKa values to validate the method under identical conditions.

- Data Analysis: Plot retention factor (k) against mobile phase pH and fit to appropriate models to determine experimental pKa.

Table 2: Key Research Reagent Solutions for Ionization Validation

| Reagent/Material | Function | Application Context |

|---|---|---|

| Synthetic Chemical Standards | Instrument qualification, calibration, metabolite identification | Targeted metabolomics, method validation [14] |

| Matrix Reference Materials | Quality control, method validation | Bioanalytical method development, biomarker studies [14] |

| Isotopically Labelled Standards | Internal standards for quantification | LC-MS/MS method development, compensating for ion suppression [14] [12] |

| Certified Reference Materials (CRMs) | Method standardization, proficiency testing | Regulated environments, quality assurance [14] |

| Buffer Solutions (various pH) | Mobile phase preparation, pKa determination | Chromatographic method development [10] |

Mass Spectrometry Ionization Efficiency Assessment

Different ionization techniques show varying susceptibilities to matrix effects and ion suppression:

- Electrospray Ionization (ESI) Optimization: ESI is particularly prone to matrix effects but excels for polar to moderately polar compounds. Optimize sheath gas flow rate, sheath gas temperature, nebulizer pressure, and vaporizer temperature based on analyte properties [15].

- Atmospheric Pressure Photoionization (APPI) Application: APPI complements ESI, excelling for nonpolar and moderately polar analytes. It often demonstrates superior matrix tolerance compared to ESI due to its different ionization pathway [15].

- Ion Suppression Testing: Use post-column infusion methods to identify regions of ion suppression in chromatographic runs. Incorporate stable isotope-labeled internal standards to compensate for suppression effects [12].

- Alternative Ionization Techniques: Emerging techniques like Extractive Electrospray Ionization (EESI) enable direct analysis of complex matrices with minimal sample pretreatment, offering high tolerance to dirty matrices [16].

Diagram 1: Consequences of invalidated ionization parameters across pharmaceutical development.

Standard Reference Materials: The Foundation for Accuracy

The use of certified reference materials provides the necessary foundation for validating ionization parameters throughout method development and application.

Current Practices and Identified Gaps

A recent survey within the metabolomics community revealed critical insights into standard usage and needs:

- Synthetic chemical standards are primarily used for instrument qualification (83%), calibration (78%), and metabolite identification (74%) [14].

- Matrix reference materials are mainly applied for quality control (52%) and method validation (44%) [14].

- There is strong demand for more standards, particularly for metabolite identification and quantification, with cost being a major barrier, especially for isotopically labelled standards and certified reference materials [14].

Recommended Standardization Workflow

Diagram 2: Standardization workflow for validating ionization parameters.

The consequences of invalidated ionization parameters permeate every stage of pharmaceutical development and clinical application, from misguided lead optimization to compromised patient safety. Accurate determination and validation of pKa values and related ionization parameters are not merely academic exercises but fundamental requirements for efficient drug development and reliable analytical methods.

The path forward requires increased adoption of standardized reference materials, implementation of robust validation protocols, and greater awareness of ionization-related pitfalls across the research community. By prioritizing accuracy in ionization parameter determination, pharmaceutical scientists can mitigate risks, enhance efficiency, and ultimately deliver safer, more effective medicines to patients.

Standard Reference Materials (SRMs) serve as the metrological foundation for reliable analytical measurements across scientific disciplines, providing an unbroken chain of traceability to international standards. These certified artifacts enable researchers to quantify measurement uncertainty, validate instrument performance, and establish confidence in analytical results. This guide examines the fundamental principles by which SRMs establish measurement certainty, with particular emphasis on their application in validating ionization parameters in mass spectrometry-based assays. Through comparative evaluation of SRM types, experimental protocols, and data analysis frameworks, we provide researchers with practical methodologies for implementing traceability in quantitative analyses.

Standard Reference Materials (SRMs) are certified artifacts with well-characterized composition or properties that provide the metrological link between routine measurements and recognized standards. As defined by the International Organization for Standardization (ISO), traceability represents the "property of a measurement result whereby it can be related to a stated reference through an unbroken chain of comparisons, all having stated uncertainties" [17]. In practical terms, SRMs function as transfer standards that allow laboratories to assert traceability to relevant measurement scales maintained by national metrology institutes like the National Institute of Standards and Technology (NIST).

The hierarchy of reference materials begins with primary standards issued by authorized bodies, with Certified Reference Materials (CRMs) occupying the second-highest level in this hierarchy [1]. SRMs represent a specific class of CRMs distributed by NIST, carrying a federally registered trademark to distinguish them from commercial alternatives [17]. These materials provide the highest level of accuracy, lowest uncertainties, and direct traceability to SI units through rigorous certification processes.

For researchers validating ionization parameters, SRMs deliver three essential components: (1) metrological traceability to SI units through NIST references; (2) certified values with well-defined uncertainties; and (3) matrix-matched composition when necessary to account for sample-specific effects [18] [1]. This combination enables meaningful comparison of measurement results across different laboratories, instruments, and time periods, forming the foundation for reproducible research in drug development and analytical sciences.

The Metrological Framework of Traceability

Traceability to SI Units

The traceability chain for chemical measurements follows a hierarchical path that ultimately links to the seven base units of the International System of Units (SI). For chemical measurements, the mole serves as the base unit for amount of substance, while other SI units like the kilogram (mass), meter (length), and second (time) provide the foundation for related measurements [17]. SRMs create the critical connection between routine laboratory measurements and these primary standards through an unbroken chain of comparisons, each with documented uncertainties.

Table: SI Base Units Relevant to Chemical Measurements

| Base Quantity | Name | Symbol |

|---|---|---|

| Length | meter | m |

| Mass | kilogram | kg |

| Time | second | s |

| Amount of substance | mole | mol |

| Electric current | ampere | A |

| Thermodynamic temperature | kelvin | K |

This formalized system dates back to the Convention du Mètre of 1875, which established the framework for international measurement standardization [17]. The system ensures that a measurement of potassium concentration in clinical samples, for instance, can be directly compared to a certified value for a potassium SRM, with known uncertainty, and through it to the mole definition itself.

Uncertainty Quantification

A defining characteristic of SRMs is their comprehensive uncertainty quantification. According to the Guide to the Expression of Uncertainty in Measurement (GUM), measurement uncertainty (MU) is a "non-negative parameter characterizing the dispersion of the quantity values being attributed to a measurand" [19]. In practical terms, uncertainty provides an interval of values within which the true value is believed to lie with a stated probability.

The basic parameter of measurement uncertainty is the standard deviation, denoted as standard measurement uncertainty (u). For SRMs, the combined standard measurement uncertainty (u~c~) incorporates multiple uncertainty sources, while the expanded measurement uncertainty (U) represents the combined standard uncertainty multiplied by a coverage factor (k), typically k=2 for approximately 95% confidence [19].

When commercial manufacturers produce traceable reference materials, the stated uncertainty cannot be smaller than that of the NIST SRM used for comparison, as each comparison step introduces additional uncertainty components. For example, if a commercial CRM is certified against a NIST SRM with a standard uncertainty of 15 µg/mL, and the manufacturer's process has a standard deviation of 25 µg/mL, the combined uncertainty (with k=2) would be calculated as: √(25² + 15²) × 2 = 58 µg/mL [17].

SRMs in Analytical Measurement Systems

Establishing Traceability in Spectrophotometry

NIST's Traceability in Molecular Spectrophotometry program exemplifies how SRMs provide traceability for optical measurements. This program develops, certifies, and recertifies SRMs for verifying transmittance (absorbance) and wavelength scales of spectrophotometers across ultraviolet (UV), visible (VIS), and near-infrared (NIR) spectral regions [18].

UV/visible transmittance traceability is established through the second-generation High Accuracy Spectrophotometer (HAS II), while wavelength traceability links to recognized atomic transitions that serve as secondary length standards [18]. These SRMs enable researchers to validate critical instrument parameters that affect ionization efficiency and detection sensitivity in spectrophotometric detection systems.

Table: Spectrophotometry SRMs and Their Applications

| SRM Number | Description | Certification Range | Primary Application |

|---|---|---|---|

| SRM 930x | Glass Filters for Spectrophotometry | 440 nm to 635 nm | Verification of transmittance scale in visible region |

| SRM 2031x | Metal on Fused Silica Filters | 240 nm to 635 nm | UV and visible transmittance verification |

| SRM 2034 | Holmium Oxide Solution Wavelength Standard | 240 nm to 650 nm | Wavelength scale calibration at 14 absorption bands |

| SRM 2035x | UV-Vis-NIR Wavelength/Wavenumber Standard | 334 nm to 1,946 nm | Wavelength verification across multiple regions |

Role in Mass Spectrometry and Ionization Validation

In mass spectrometry, SRMs provide critical validation for ionization efficiency and instrument response. Selected Reaction Monitoring (SRM) and Multiple Reaction Monitoring (MRM) mass spectrometry techniques rely on reference materials to establish quantification workflows for proteins and metabolites in complex biological samples [20] [21]. These targeted approaches use signature peptides as stoichiometric representatives of target proteins, with stable isotope-labeled standards enabling precise quantification.

The stable isotope dilution (SID)-SRM-MS approach exemplifies how reference materials establish traceability in ionization-based measurements [20]. In this method:

- Signature peptides unique to the target protein are selected

- Stable isotope standards (SIS) with identical sequence but heavier isotopes are synthesized

- A known amount of SIS peptide is spiked into the sample

- The MS response ratio between native and SIS peptides enables precise quantification

This methodology compensates for variations in ionization efficiency, sample preparation losses, and instrument performance, thereby establishing measurement certainty through internal standardization [20] [22].

Comparative Analysis of Reference Material Types

Certified Reference Materials vs. Reference Standards

Understanding the distinction between Certified Reference Materials (CRMs) and reference standards is essential for selecting appropriate materials for measurement traceability. CRMs represent the highest category of reference materials, characterized by rigorous certification processes and comprehensive uncertainty documentation.

Table: Comparison of Certified Reference Materials vs. Reference Standards

| Feature | Certified Reference Materials (CRMs) | Reference Standards |

|---|---|---|

| Accuracy | Highest level of accuracy | Moderate level of accuracy |

| Traceability | Directly traceable to SI units | ISO-compliant |

| Certification | Includes detailed Certificate of Analysis | May include certificate |

| Uncertainty | Comprehensive uncertainty budget | Limited uncertainty information |

| Cost | Higher | More cost-effective |

| Ideal Application | Regulatory compliance, method development, high-precision work | Routine testing, qualitative analysis, method monitoring |

CRMs should be used when establishing initial method validity, generating calibration curves, or as spike solutions for standard additions. Reference standards are suitable for ongoing method verification, qualitative analysis, or situations where cost considerations preclude CRM usage [1].

Discontinued and Active NIST SRMs

The NIST SRM portfolio evolves based on technological advancements and availability of commercial alternatives. Several historically important SRMs have been discontinued in favor of commercially produced equivalent products, though recertification services for existing filters continue.

Active SRMs include:

- SRM 2031x-series: Metal on fused silica filters for UV/visible transmittance verification, certified at ten wavelengths from 240 nm to 635 nm [18]

- SRM 931x: Liquid absorbance filters containing nickel-cobalt solutions in break-open ampoules [18]

- SRM 2035x: Ultraviolet-Visible-Near-Infrared wavelength/wavenumber transmission standard, certified for seven absorbance bands in the NIR region [18]

Discontinued SRMs (with recertification still available) include SRM 930x (neutral density glass filters), SRM 1930, and SRM 2930 (extended range glass filters) [18]. This evolution reflects the maturing of the commercial reference material sector while maintaining NIST's role in providing the highest-order references.

Experimental Protocols for Traceability Establishment

Measurement Uncertainty Estimation Protocol

Establishing measurement certainty requires systematic estimation of measurement uncertainty (MU). The top-down approach utilizing quality control (QC) data provides a practical framework for clinical and analytical laboratories [19].

Step 1: Defining the Measurand Clearly specify the quantity intended to be measured, including:

- Chemical entity and its form (e.g., arsenite vs. arsenate)

- Matrix specification (e.g., plasma, urine, water)

- Kind-of-quantity (e.g., amount-of-substance concentration)

Step 2: Estimating Imprecision Determine intermediate imprecision (u~Imp~) under intermediate conditions across multiple runs, incorporating variations from:

- Calibrator and reagent batch changes

- Different operators

- Instrument maintenance cycles

- Environmental fluctuations

Step 3: Assessing Bias and its Uncertainty When bias correction is applied, estimate the uncertainty of bias correction (u~Bias~) using:

Where u~Ref~ is the uncertainty of the reference material value, and u~Rep~ is the standard error of the mean of replicate measurements of the reference material [19].

Step 4: Combining Uncertainty Components Calculate the combined standard uncertainty of the procedure (u~Proc~):

- If bias is not significant or not evaluated: u~Proc~ = u~Imp~

- If bias is corrected: u~Proc~ = √(u~Imp~² + u~Bias~²)

Step 5: Expressing Expanded Uncertainty Report the expanded uncertainty (U) using an appropriate coverage factor (typically k=2 for 95% confidence):

SRM-Based Validation of Ionization Parameters

For mass spectrometry applications, SRMs provide a mechanism to validate ionization efficiency and instrument response. The following protocol outlines the SID-SRM-MS assay development process for quantifying low-abundance signaling proteins [20]:

Step 1: Selection of High-Responding Signature Peptides

- Identify 3-5 proteotypic peptide candidates per target protein

- Utilize prior LC-MS/MS data, public repositories (PeptideAtlas, GPMDB), or computational prediction tools

- Apply selection criteria: uniqueness to protein, length (5-25 amino acids), absence of modification sites, and tryptic ends

Step 2: Stable Isotope Standard (SIS) Peptide Synthesis

- Synthesize crude, unpurified SIS peptides with 13C/15N labels

- Ensure identical physicochemical properties to native peptides

- Validate co-elution and fragmentation pattern consistency

Step 3: Transition Optimization

- Directly infuse synthetic peptide mixture into triple quadrupole MS

- Optimize collision energy for each precursor-product ion transition

- Select 3-5 most favorable transitions per signature peptide

- Validate detectability and specificity in tryptic digest of cell extract

Step 4: Assay Qualification

- Synthesize highly pure light and heavy forms of selected signature peptides

- Evaluate sensitivity and linear dynamic range using standard addition approach

- Determine limit of detection (LOD) and limit of quantification (LOQ)

- Validate precision (typically <15-20% CV) and specificity

Step 5: Implementation for Quantitative Analysis

- Spike known amount of SIS peptides into samples

- Perform LC-SRM-MS analysis with scheduled transition monitoring

- Calculate protein concentration from native/SIS peptide ratio

- Establish measurement traceability through SRM-based calibration

The Scientist's Toolkit: Essential Research Reagents

Table: Key Reference Materials for Measurement Traceability

| Research Reagent | Function | Application Context |

|---|---|---|

| NIST SRM 2031x | UV/visible transmittance verification | Validation of spectrophotometer performance for concentration measurements |

| Holmium Oxide Solutions | Wavelength scale calibration | Verification of wavelength accuracy in spectrophotometers |

| Stable Isotope-labeled Peptides | Internal standards for quantification | Compensation for ionization efficiency variations in MS-based proteomics |

| Matrix-matched CRMs | Method validation in complex matrices | Accounting for matrix effects in environmental, clinical, or food samples |

| Single-element Standard Solutions | Instrument calibration | Establishment of calibration curves for elemental analysis |

| Quality Control Materials | Ongoing method verification | Monitoring measurement system performance over time |

| B-Raf IN 13 | B-Raf IN 13, MF:C19H19ClFN3O4S, MW:439.9 g/mol | Chemical Reagent |

| CD73-IN-8 | CD73-IN-8, MF:C17H13ClN4O2, MW:340.8 g/mol | Chemical Reagent |

Visualization of Uncertainty Components

Standard Reference Materials provide the fundamental link between routine laboratory measurements and internationally recognized standards, establishing the traceability chain essential for measurement certainty. Through well-characterized certified values with comprehensive uncertainty budgets, SRMs enable researchers to validate instrument performance, quantify measurement reliability, and compare results across time and geography. The experimental protocols and comparative frameworks presented in this guide offer practical approaches for implementing SRM-based traceability in analytical measurements, with particular relevance for ionization parameter validation in drug development research. As measurement technologies advance, the continued evolution of SRM portfolios will maintain their critical role in supporting reproducible scientific research across diverse disciplines.

In the field of analytical chemistry, particularly in mass spectrometry-based assays for drug development, the validation of ionization parameters is a critical step to ensure data accuracy and reproducibility. Ionization efficiency can be significantly compromised by matrix effects, particularly ion suppression, where co-eluting compounds interfere with the ionization of target analytes, leading to reduced detector response and erroneous quantitation [23] [24]. To control these variables and validate method performance, scientists rely on well-characterized reference materials. This guide objectively compares three cornerstone reference material types—NIST Standard Reference Materials (SRMs), isotopically-labeled compounds, and matrix-matched standards—empowering researchers to select the optimal tools for their specific validation challenges.

At a Glance: Comparison of Reference Material Types

The table below summarizes the core characteristics, primary applications, and key performance data of the three reference material types.

Table 1: Overview of Reference Material Types for Ionization Validation

| Reference Material Type | Core Characteristics & Certification | Primary Applications in Validation | Reported Uncertainty & Performance Data |

|---|---|---|---|

| NIST SRMs | - Metrologically traceable certified values (CVs) and reference values [25].- Values established using two or more independent methods [26].- Accompanied by a certificate of analysis with stated uncertainty [25]. | - Establishing measurement traceability [25].- System suitability testing and quality control [3] [27].- Benchmarking laboratory performance via cross-lab comparisons [25]. | - Uncertainties typically <2% for radioactivity SRMs [28].- PFAS in SRM 1957 have non-certified reference values due to isomeric complexity [25]. |

| Isotopically-Labeled Compounds | - Stable isotopes (e.g., 2H, 13C, 15N) replace atoms in the analyte [29].- Nearly identical chemical and physical properties to the unlabeled analyte [23].- No inherent certified value; used as an internal calibrant. | - Internal standardization to correct for ion suppression and variable recovery [23].- Metabolic pathway elucidation (Metabolic Flux Analysis) [29].- Improving metabolite annotation in mass spectrometry [30]. | - In MFA, isotopomer distributions are used to determine reaction fluxes [29].- Correction accuracy depends on matching the analyte's ionization efficiency. |

| Matrix-Matched Standards | - Authentic or artificial matrix spiked with analytes [3].- Can be characterized in-house or obtained as CRMs (e.g., NIST SRM 1957) [25].- Mimics the analytical challenges of the test sample. | - Assessing accuracy and precision in the presence of matrix effects [3].- Correcting for ion suppression when an exact matrix match is used [23].- Validating sample preparation protocols and extraction efficiency. | - Method precision and accuracy are determined during validation [3].- Effectiveness depends on the consistency of the test sample matrix [23]. |

Detailed Experimental Protocols for Use

Protocol for Validating an Analytical Method with NIST SRMs

This procedure uses a NIST SRM to test the bias and precision of an analytical method, using lead in paint analysis (SRM 2569) as an example [27].

- Step 1: Material Reconstitution and Handling. If the SRM is in a different physical state (e.g., freeze-dried serum), reconstitute it exactly as specified in the certificate [25]. For paint film SRMs, avoid frequent handling to prevent deterioration [27].

- Step 2: Data Collection. Analyze the SRM using your standard method. To account for material heterogeneity, perform measurements at at least three independent locations on the material and record the results [27].

- Step 3: Data Analysis. Calculate the median of the replicate measurements. Compare this median value to the certified value on the SRM certificate. The certificate provides the certified value and its expanded uncertainty [27].

- Step 4: Assessing Method Bias. A method is considered to have significant bias if the difference between the median measured value and the certified value is larger than the combined uncertainty of the measurement and the SRM. Document this bias for method correction or improvement [27].

Protocol for Detecting and Compensating for Ion Suppression

Ion suppression is a critical matrix effect in LC-MS that can be detected and mitigated using the following approaches [23] [24].

Experiment A: Post-Column Infusion.

- Connect a syringe pump containing a solution of the analyte to the LC effluent via a "tee" union, post-column.

- Infuse the analyte at a constant rate while injecting a blank, prepared sample matrix (e.g., plasma) into the LC system.

- Monitor the MS signal. A drop in the otherwise stable signal indicates the retention time at which ion-suppressing compounds are eluting and ionizing [23] [24].

Experiment B: Post-Extraction Spike.

- Prepare three samples: i) analyte in pure solvent, ii) blank matrix extract spiked with analyte, and iii) blank matrix spiked with analyte and taken through the full sample preparation process.

- Analyze all three and compare the peak responses. A reduced response in (ii) compared to (i) indicates ion suppression, while a difference between (ii) and (iii) indicates losses from sample preparation [23].

Compensation Strategy: Internal Standardization with Isotopic Labels.

- Spike the sample with a stable isotope-labeled analog of the analyte (e.g., 13C- or 2H-labeled) before any preparation steps.

- Process the sample and analyze by LC-MS/MS.

- The labeled internal standard will co-elute with the native analyte and experience nearly identical ion suppression and extraction losses. The analyte-to-internal standard response ratio is used for quantification, effectively correcting for the suppression [23].

The workflow below illustrates the decision-making process for selecting the appropriate reference material based on the analytical challenge and the stage of method development.

Decision Workflow for Selecting Reference Materials

The Scientist's Toolkit: Essential Research Reagents

Successful validation of ionization parameters requires a suite of reliable reagents and materials. The following table details key items and their functions.

Table 2: Essential Research Reagents for Ionization Validation

| Tool/Reagent | Function in Validation | Key Characteristics & Examples |

|---|---|---|

| Certified Reference Material (CRM) | Serves as a metrological anchor to assess method accuracy and establish traceability to SI units [3]. | e.g., NIST SRM 1957 (Human Serum) with reference values for PFAS, PCBs [25]. |

| Stable Isotope-Labeled Internal Standard | Corrects for analyte loss during preparation and ion suppression during MS analysis [23]. | 13C-, 15N-, or 2H-labeled version of the analyte; nearly identical chemical behavior [29]. |

| Matrix-Matched Quality Control Material | Monitors analytical performance and checks for matrix effects over time; can be prepared in-house [3]. | Homogenized, stable material matching test samples; should be well-characterized. |

| Post-Column Infusion Setup | Diagnoses the chromatographic location and profile of ion suppression effects [23] [24]. | Syringe pump, "tee" union, and standard solution for continuous infusion during LC run. |

| Calibration Standard Solutions | Generates the primary calibration curve for quantitation; purity is critical [25]. | Can be prepared from neat materials or purchased as certified solutions (e.g., NIST RM 8446 for PFAS) [25]. |

| PRL 3195 | PRL 3195, MF:C58H69ClN12O9S2, MW:1177.8 g/mol | Chemical Reagent |

| CD73-IN-9 | CD73-IN-9, MF:C14H11F2N5O2, MW:319.27 g/mol | Chemical Reagent |

NIST SRMs, isotopically-labeled compounds, and matrix-matched standards are complementary tools, each with a distinct and critical role in validating ionization parameters. NIST SRMs provide the foundational metrological traceability and are the definitive choice for assessing a method's fundamental accuracy. Isotopically-labeled internal standards are the most practical solution for routinely compensating for the pervasive challenge of ion suppression in quantitative LC-MS/MS. Finally, matrix-matched standards are indispensable for evaluating a method's performance within the complex, real-world context of the sample matrix. By understanding their unique strengths and applications, scientists can design more robust validation protocols, leading to more reliable and reproducible analytical data in drug development.

In analytical chemistry and particularly in the validation of ionization parameters for mass spectrometry, understanding the distinction between accuracy and precision is fundamental to generating reliable data. While these terms are often used interchangeably in colloquial language, they represent distinct concepts in scientific measurement. Accuracy refers to how close a measurement is to the true or accepted value of the quantity being measured, indicating the correctness of the result [31] [32]. In contrast, precision refers to the reproducibility of measurements—how close repeated measurements are to one another, regardless of their proximity to the true value [31] [33]. This distinction becomes critically important when validating ionization parameters using standard reference materials, as both characteristics must be optimized to ensure data quality.

The relationship between accuracy and precision can be visualized through the classic bullseye analogy [34] [32]. Imagine four scenarios: (1) darts tightly clustered in the bullseye represent both high accuracy and high precision; (2) darts tightly clustered away from the bullseye represent high precision but low accuracy; (3) darts scattered randomly but centered around the bullseye represent high accuracy but low precision; and (4) darts scattered randomly away from the bullseye represent neither accuracy nor precision. In the context of ionization parameter validation, this analogy helps researchers distinguish between consistent but potentially biased results (precise but inaccurate) versus correct but highly variable results (accurate but imprecise).

Table 1: Key Differences Between Accuracy and Precision

| Aspect | Accuracy | Precision |

|---|---|---|

| Definition | Closeness to true value | Closeness between repeated measurements |

| Focus | Correctness | Consistency/repeatability |

| Error Type | Systematic error/bias | Random error |

| Dependency | Requires known reference value | Independent of true value |

| Quantification | Percent error, bias | Standard deviation, relative standard deviation |

Theoretical Framework: Accuracy, Precision, and Measurement Uncertainty

The Role of Systematic and Random Errors

The concepts of accuracy and precision are intrinsically linked to different types of measurement errors. Systematic errors affect accuracy by consistently biasing measurements in one direction, often due to equipment calibration issues, methodological flaws, or environmental factors [32]. These errors are particularly problematic in ionization parameter validation as they can lead to inaccurate quantification of analytes, even when measurements appear consistent. Random errors, on the other hand, affect precision by creating unpredictable variations in measurements, resulting from instrument limitations, environmental fluctuations, or operator techniques [32]. In mass spectrometry, random errors might manifest as variations in signal intensity across replicate injections of the same sample.

The International Organization for Standardization (ISO) provides formal definitions that further refine these concepts. According to ISO standards, trueness (a component of accuracy) describes the closeness of agreement between the average of a large number of test results and the true or accepted reference value [33]. Precision, meanwhile, is decomposed into repeatability (closeness of agreement under identical conditions) and reproducibility (closeness of agreement under different conditions) [33] [32]. When validating ionization parameters, both repeatability and reproducibility assessments are essential—repeatability ensures method stability under controlled conditions, while reproducibility confirms robustness across expected variations in instrumentation, operators, and environments.

Quantifying Accuracy and Precision

Accuracy is typically quantified using percent error or bias, calculated by comparing measured values to certified reference values [31] [34]. For a measurement value A with uncertainty δA, the percent uncertainty is defined as:

% unc = (δA / A) × 100% [31]

Precision is commonly expressed through standard deviation (σ) or relative standard deviation (RSD), also known as coefficient of variation [32]. For a set of n measurements with mean x̄, the standard deviation is calculated as:

σ = √[Σ(xi - x̄)² / (n-1)] [32]

The RSD is then derived as:

RSD = (σ / x̄) × 100% [32]

These quantitative measures become essential when evaluating ionization parameters, as they provide objective criteria for comparing different parameter sets and selecting optimal configurations.

Experimental Design: Validating Ionization Parameters Using Reference Materials

Selection and Application of Certified Reference Materials

Certified Reference Materials (CRMs) play an indispensable role in validating ionization parameters by providing traceability to international standards and known quantitative values [35] [36]. CRMs are homogeneous, stable materials with certified property values, accompanied by documented uncertainty and metrological traceability to the International System of Units (SI) [35]. In the context of ionization parameter validation for mass spectrometry, appropriate CRM selection should consider several factors: chemical relevance to expected analytes, availability, stability under analytical conditions, toxicological properties, and analytical compatibility with the intended platforms [37].

Recent research demonstrates innovative applications of CRMs across various analytical domains. In environmental analysis, newly developed soil CRMs for perfluorooctanoic acid (PFOA) and perfluorooctane sulfonate (PFOS) enable validation of ionization parameters for these challenging analytes [35]. Similarly, in medical device analysis, carefully selected polymer additive reference standards facilitate robust non-targeted analysis of extractables and leachables [37]. The metabolomics community has demonstrated particular sophistication in CRM usage, with surveys showing that 83% of laboratories employ synthetic chemical standards for instrument qualification, 78% for calibration, and 74% for metabolite identification [14].

Table 2: Certified Reference Material Applications in Analytical Validation

| Application Area | CRM Type | Validation Purpose | Key Metrics |

|---|---|---|---|

| Environmental Analysis | Soil CRMs with certified PFOA/PFOS values [35] | Ionization parameter optimization for trace contaminants | Accuracy: 94-106% Recovery; Precision: RSD < 5.5% |

| Medical Device Safety | Polymer additive reference standards [37] | Non-targeted analysis method validation | Relative Response Factor (RRF) variance |

| Metabolomics | Synthetic chemical standards, matrix reference materials [14] | Instrument qualification, calibration, identification | Method-specific uncertainty factors |

Methodologies for Ionization Parameter Validation

A robust experimental protocol for validating ionization parameters using reference materials should incorporate both targeted and non-targeted approaches, depending on the analytical objectives [14] [37]. The following workflow represents a comprehensive approach:

The experimental workflow begins with careful CRM selection based on analytical requirements, followed by sample preparation using validated protocols to maintain integrity. Ionization parameter optimization typically involves systematic variation of key parameters (e.g., spray voltage, sheath gas temperature, capillary temperature) while monitoring signal response, stability, and mass accuracy using CRMs. Data acquisition should include sufficient replicates under both identical and varied conditions to assess repeatability and reproducibility. Finally, accuracy and precision assessments against certified values and across replicates provide quantitative validation metrics.

For mass spectrometry applications, critical ionization parameters typically include:

- Ion source parameters: Spray voltage, nebulizer gas pressure, drying gas flow rate and temperature

- Mass analyzer parameters: Resolution settings, collision energies, mass calibration

- Sample introduction parameters: Flow rate, injection volume, column temperature (for LC-MS)

Each parameter set should be evaluated using CRMs with matrices matching actual samples to ensure relevant performance data. The optimal parameter combination achieves the best balance between sensitivity (signal intensity), specificity (minimal interference), accuracy (deviation from certified values <5%), and precision (RSD <10-15% depending on concentration level) [35] [37].

Comparative Analysis: Experimental Data Interpretation

Accuracy and Precision Metrics in Practice

When evaluating experimental data from ionization parameter validation, researchers must interpret both accuracy and precision metrics in the context of their analytical requirements. The following table illustrates typical performance expectations across different application domains:

Table 3: Performance Standards Across Application Domains

| Application Domain | Accuracy Requirement (% of certified value) | Precision Requirement (RSD) | Key Challenges |

|---|---|---|---|

| Pharmaceutical QC | 98-102% | < 2% | Matrix effects, regulatory compliance |

| Environmental Monitoring | 85-115% (method-dependent) | < 15% at LOQ | Low concentrations, complex matrices |

| Metabolomics (Targeted) | 90-110% | < 10% | Wide concentration range, structural diversity |

| Metabolomics (Non-Targeted) | Qualitative identification | < 20-30% | Unknown identification, semi-quantitation |

Recent studies highlight the practical implications of these metrics. In environmental analysis, newly developed soil CRMs for PFOA and PFOS demonstrated method accuracy of 94-106% and precision (RSD) of 4.1-5.5% when using appropriate ionization parameters [35]. In medical device safety assessment, the uncertainty factor (UF) used to calculate the Analytical Evaluation Threshold (AET) depends directly on the relative standard deviation of response factors from reference standards—highlighting how precision directly impacts safety thresholds [37].

The Scientist's Toolkit: Essential Research Reagent Solutions

Successful validation of ionization parameters requires carefully selected reference materials and reagents. The following essential materials represent core components of the analytical chemist's toolkit for method validation:

Table 4: Essential Research Reagent Solutions for Ionization Parameter Validation

| Reagent Type | Function | Example Applications |

|---|---|---|

| Certified Reference Materials (CRMs) | Provide traceable accuracy benchmarks and precision assessment | Instrument calibration, method validation, proficiency testing [35] [36] |

| Isotope-Labeled Internal Standards | Correct for matrix effects and ionization efficiency variations | Quantitative accuracy improvement, especially in complex matrices [14] [35] |

| Matrix-Matched Reference Materials | Assess method performance in realistic sample contexts | Evaluation of matrix effects on ionization efficiency [14] [35] |

| Tuning and Calibration Solutions | Optimize and verify instrument performance | Daily performance verification, system suitability testing [36] |

| Quality Control Materials | Monitor method performance over time | Ongoing verification of accuracy and precision, batch acceptance criteria [14] |

| DRB18 | 5-[[4-Chloro-2-[(3-hydroxy-4-methylphenyl)methylamino]anilino]methyl]-2-methylphenol | High-purity 5-[[4-Chloro-2-[(3-hydroxy-4-methylphenyl)methylamino]anilino]methyl]-2-methylphenol for Research Use Only. Not for human or veterinary diagnostic or therapeutic use. |

| NCGC00538431 | NCGC00538431, MF:C28H31F6N7O5S, MW:691.6 g/mol | Chemical Reagent |

Advanced Concepts: Uncertainty Factors and Order of Accuracy

Uncertainty Quantification in Analytical Measurements

A sophisticated understanding of measurement uncertainty is essential for proper interpretation of accuracy and precision data. The uncertainty factor (UF) represents a critical concept in non-targeted analysis, particularly relevant when validating ionization parameters for unknown compound detection. As defined in ISO 10993-18 for medical device evaluation, the UF accounts for analytical uncertainty in screening methods and is calculated as [37]:

UF = 1 / (1 - RSD)

Where RSD is the relative standard deviation of the response factors from the reference standard database. This relationship demonstrates mathematically how precision (expressed as RSD) directly impacts the confidence in quantitative estimates—as precision decreases (higher RSD), the uncertainty factor increases, requiring higher detection thresholds to maintain reliable quantification [37].

Order of Accuracy in Numerical Methods

While primarily applied to computational methods, the concept of order of accuracy provides valuable insights for analytical chemists validating ionization parameters. The order of accuracy describes how the error (E) of a measurement or calculation decreases as a parameter (h), such as step size or resolution, is refined [38]. The relationship is expressed as:

E(h) = Ch^n

Where C is a constant and n is the order of accuracy [38]. In the context of ionization parameter optimization, this concept can be extended to understand how method performance improves as parameters are progressively refined. Higher-order methods deliver dramatically improved accuracy with modest parameter refinement, but only when operating within their appropriate domain (typically with small "step sizes" or incremental changes) [38].

The following diagram illustrates the relationship between error, parameter refinement, and order of accuracy:

The relationship between accuracy and precision represents more than a theoretical distinction—it embodies a fundamental principle of analytical science with direct implications for ionization parameter validation. Through strategic implementation of certified reference materials, systematic experimental design, and comprehensive data interpretation, researchers can move beyond mere tight clustering of results to achieve genuine approximation of true values. The integration of accuracy and precision assessment into method validation protocols ensures that analytical data supports robust scientific conclusions, regulatory compliance, and ultimately, public safety in applications ranging from pharmaceutical development to environmental monitoring. As analytical technologies evolve, maintaining this disciplined approach to measurement quality will continue to underpin advancements across the scientific spectrum.

Implementation Strategies: SRM Protocols for Targeted and Untargeted Analysis

Stable Isotope Dilution Selected Reaction Monitoring (SID-SRM) represents the gold standard for precise and accurate quantification of target molecules in complex biological samples, particularly in the field of proteomics. This powerful methodology combines the exceptional specificity of mass spectrometric detection with the analytical rigor of isotope dilution quantification, establishing itself as an essential tool for researchers requiring absolute quantification of proteins and peptides. SID-SRM addresses fundamental limitations of non-targeted proteomics approaches, which often struggle with detecting low-abundance molecules amid complex sample matrices, resulting in inadequate sensitivity and poor reproducibility [39].

The core principle of SID-SRM integrates two sophisticated analytical concepts: the use of stable isotope-labeled internal standards and the selective monitoring of specific ion transitions. In practice, known quantities of synthetically produced, stable isotope-labeled analogs of the target analytes (typically peptides in proteomics) are added to samples prior to processing. These internal standards, which are chemically identical to their endogenous counterparts but distinguishable by mass spectrometry, enable precise normalization throughout sample preparation and analysis. The Selected Reaction Monitoring component then provides exceptional selectivity by configuring mass spectrometers to monitor only specific precursor-to-product ion transitions unique to the target analytes, effectively filtering out interfering signals from complex sample matrices [39].

Within the broader context of validating ionization parameters using standard reference materials, SID-SRM serves as a critical validation methodology. The technique provides a robust framework for assessing and verifying ionization efficiency, matrix effects, and instrument performance through the use of well-characterized isotope-labeled standards. This application is particularly valuable in drug development, where accurate quantification of pharmacologically relevant proteins is essential for biomarker verification, pharmacokinetic studies, and therapeutic monitoring. The exceptional reproducibility and reliability of SID-SRM have established it as the preferred method when analytical rigor is paramount, especially in regulated environments where method validation is required [39].

Methodological Comparison of Targeted Quantification Approaches

SID-SRM: The Benchmark Technique

SID-SRM operates on a triple quadrupole mass spectrometer platform, where the first quadrupole (Q1) selects specific precursor ions derived from the target peptide, the second quadrupole (Q2) functions as a collision cell to fragment these ions, and the third quadrupole (Q3) monitors specific fragment ions unique to the target analyte. This two-stage mass filtering provides exceptional selectivity, effectively eliminating chemical noise and isobaric interferences that commonly plague other LC-MS techniques. The stable isotope-labeled internal standards, typically incorporating heavy isotopes such as 13C, 15N, or a combination thereof, are added at the earliest possible stage of sample preparation, ideally before protein digestion, to account for and correct variability in digestion efficiency, recovery, and ionization [39].

The quantification power of SID-SRM stems from the nearly identical physicochemical properties shared by the native analyte and its isotope-labeled counterpart. These analogs co-elute during chromatography, exhibit nearly identical ionization efficiencies, and generate equivalent fragment ions, yet remain distinguishable by mass spectrometry due to their mass difference. This enables the internal standard to track the native analyte throughout the entire analytical process, correcting for losses during sample preparation, matrix-induced ionization suppression, and instrument variability. The resulting analyte-to-internal standard response ratio provides a stable foundation for precise quantification, typically yielding coefficients of variation below 15% and often below 10% for well-optimized assays [39].

Comparative Analysis with Alternative Techniques

Table 1: Technical Comparison of Targeted Proteomics Quantification Methods

| Parameter | SID-SRM | PRM | DIA/SWATH | Western Blot |

|---|---|---|---|---|

| Quantification Type | Absolute (with standards) | Relative or Absolute | Mostly Relative | Relative |

| Precision (CV%) | 5-15% | 8-20% | 15-30% | 15-50% |

| Dynamic Range | 3-4 orders of magnitude | 3-4 orders of magnitude | 2-3 orders of magnitude | 1-2 orders of magnitude |

| Multiplexing Capacity | Moderate (dozens to ~100 targets) | Moderate (similar to SRM) | High (thousands of targets) | Low (typically 1-3 targets) |

| Selectivity | Excellent (two stages of mass selection) | Excellent (high-resolution isolation and detection) | Good (chromatographic deconvolution required) | Variable (antibody dependent) |

| Throughput | Medium | Medium | High | Low |

| Internal Standard Integration | Built-in to methodology | Possible but less established | Challenging | Not applicable |

| Antibody Requirement | No | No | No | Yes |

Parallel Reaction Monitoring (PRM) represents a technological evolution of SRM that operates on high-resolution mass spectrometers. While SRM monitors predefined fragment ions on a triple quadrupole instrument, PRM acquires full fragment ion spectra for selected precursors on instruments like Orbitrap or Q-TOF platforms. This approach offers greater flexibility in post-acquisition data analysis, as researchers can theoretically extract any fragment ion from the acquired data without predefining transitions. PRM typically provides improved selectivity due to higher mass resolution and accuracy, with studies demonstrating superior anti-background interference capabilities compared to conventional SRM [39].

Data-Independent Acquisition (DIA) methods, such as SWATH-MS, represent a different paradigm that systematically fragments all ions within sequential isolation windows across the full mass range. This comprehensive approach generates complex datasets containing information on virtually all detectable analytes, creating a permanent digital record of the sample that can be mined retrospectively. SWATH-MS combines the advantages of DIA with high-resolution targeted data extraction, enabling the quantification of thousands of proteins in a single analysis without predefining targets. However, this comprehensiveness comes with trade-offs in sensitivity and dynamic range compared to targeted approaches like SID-SRM, particularly for low-abundance analytes [39].

Experimental Protocols for SID-SRM

Method Development and Validation Protocol

The development of a robust SID-SRM assay begins with the careful selection of proteotypic peptides—peptides uniquely representing the target protein that exhibit favorable physicochemical properties for LC-MS analysis. These peptides should ideally be 7-20 amino acids in length, avoid missed cleavage sites, and exclude chemically unstable residues (e.g., methionine, N-terminal glutamine) or post-translational modifications. Following peptide selection, preliminary experiments using synthetic peptides identify optimal precursor ions and fragment ions, typically prioritizing y-ions that are abundant and unique to the peptide. For each peptide, 3-5 transitions are initially monitored, which are subsequently refined to 2-3 optimal transitions for final quantification based on signal intensity and specificity [39].

The stable isotope-labeled internal standards are crucial components that should mirror the native peptides as closely as possible, typically incorporating 13C and/or 15N atoms on C-terminal lysine or arginine residues to ensure identical chromatographic behavior and ionization efficiency. These standards are synthesized with high isotopic purity (>98%) and quantified precisely to enable accurate spiking. Method validation includes assessment of linearity (typically R² > 0.99), lower limit of quantification, precision (intra- and inter-day CV < 15-20%), accuracy (85-115% of expected values), and selectivity in the presence of matrix components. Additional validation parameters include stability assessments, dilution integrity, and determination of the assay's dynamic range, which typically spans 3-4 orders of magnitude [39].

Sample Preparation and Analysis Workflow

Table 2: Key Research Reagent Solutions for SID-SRM

| Reagent Category | Specific Examples | Function in SID-SRM Workflow |

|---|---|---|

| Stable Isotope-Labeled Standards | AQUA peptides, PSAQ standards, Full-length protein standards | Internal standards for precise quantification; correct for sample preparation losses and ionization variability |

| Digestion Enzymes | Trypsin, Lys-C | Protein cleavage into measurable peptides; trypsin most commonly used for its specificity and reliability |

| Reduction/Alkylation Reagents | Dithiothreitol (DTT), Iodoacetamide | Protein denaturation and cysteine modification for consistent digestion |

| Chromatography Columns | C18 reverse-phase columns (e.g., 75μm ID, 15-25cm length) | Peptide separation prior to MS analysis; reduces matrix effects |

| Mobile Phase Additives | Formic acid, Acetonitrile, Methanol | LC solvent system for optimal peptide separation and ionization |

| Quality Control Materials | Standard Reference Materials, Pooled quality control samples | Method performance verification and batch-to-batch monitoring |

The sample preparation workflow for SID-SRM begins with the precise addition of stable isotope-labeled standards to the biological sample immediately upon collection or following protein extraction. For absolute quantification, the amount of internal standard added should approximate the expected endogenous levels. Following standard addition, proteins are denatured, reduced, and alkylated using standard protocols, then digested using a specific protease (typically trypsin) under controlled conditions. The resulting peptide mixture is desalted using solid-phase extraction, concentrated, and reconstituted in an appropriate LC-MS compatible solvent [39].

Chromatographic separation is typically performed using nanoflow or conventional high-performance liquid chromatography with reverse-phase C18 columns, employing gradient elution with water/acetonitrile mobile phases containing 0.1% formic acid. The mass spectrometric analysis is conducted on a triple quadrupole instrument operated in SRM mode, with dwell times optimally adjusted to ensure sufficient data points across chromatographic peaks (typically 10-15 points per peak). Data processing involves integration of the extracted ion chromatograms for both native and isotope-labeled peptides, calculation of peak area ratios, and interpolation from a calibration curve prepared using authentic standards analyzed in the same batch [39].

Experimental Data Supporting SID-SRM Superiority

Quantitative Performance Metrics

The exceptional analytical performance of SID-SRM is demonstrated through extensive method validation data across numerous applications. In comparative studies evaluating quantification of candidate biomarker proteins in plasma, SID-SRM has consistently demonstrated inter-assay precision of 5-15% CV, significantly outperforming antibody-based methods like Western blotting (typically 15-50% CV) and label-free approaches (20-40% CV). The accuracy of SID-SRM, as determined by recovery experiments using spiked proteins in complex matrices, typically ranges from 85-115%, even at concentrations near the lower limit of quantification. This level of precision and accuracy is maintained across the assay's dynamic range, which typically spans 3-4 orders of magnitude, enabling reliable quantification of analytes from low ng/mL to μg/mL concentrations in biological matrices [39].

The sensitivity advantage of SID-SRM becomes particularly evident when analyzing low-abundance proteins in challenging matrices. In studies focused on quantifying signaling proteins in cell lysates, SID-SRM has demonstrated detection limits in the attomole range, substantially lower than what can be typically achieved with DIA methods like SWATH. This sensitivity stems from the efficient noise rejection inherent in the two-stage mass filtering process, which dramatically improves signal-to-noise ratios compared to less selective acquisition methods. When directly compared to PRM, SID-SRM typically shows comparable sensitivity for most applications, though PRM may offer advantages for certain analytes due to its higher resolution and mass accuracy [39].

Applications in Pharmaceutical and Clinical Research

The application of SID-SRM in drug development spans multiple critical areas, including pharmacokinetic studies of biotherapeutics, biomarker verification, and analysis of pharmacodynamic markers. In one representative study quantifying monoclonal antibodies in serum, SID-SRM demonstrated superior correlation with ELISA (R² = 0.98) while offering advantages in multiplexing capacity and specificity. For biomarker verification, SID-SRM has emerged as the method of choice for transitioning from discovery-phase findings to validated assays, with the National Cancer Institute's Clinical Proteomic Tumor Analysis Consortium (CPTAC) frequently employing SID-SRM for cross-platform verification of candidate biomarkers [39].